- Google Cloud

- :

- Cloud Forums

- :

- AI/ML

- :

- Are AI Studio's 1.0-pro-vision-001 and API's gemin...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

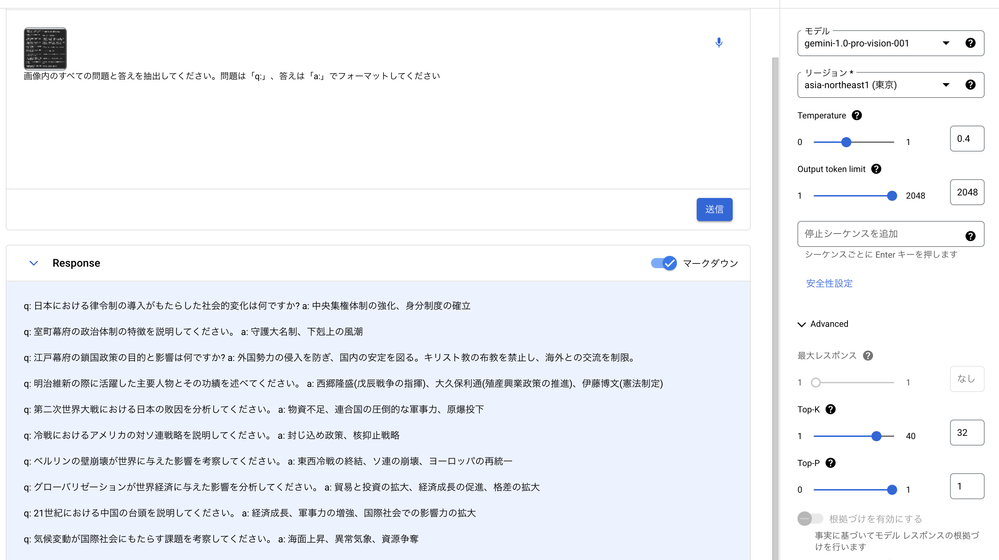

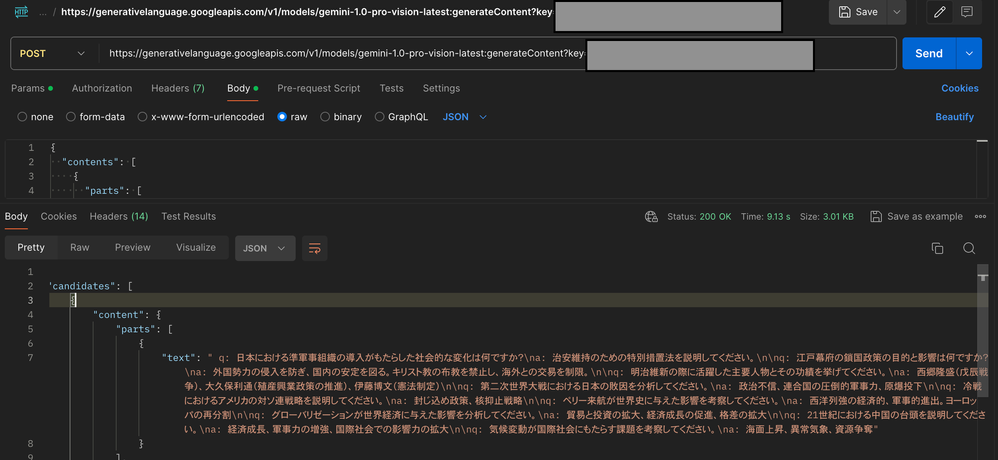

With Vertex AI Studio, I get the response as expected, but via the API, the quality of the response may be lower, or it may give a wrong response different from AI Studio. I think I am using the same model (1.0-pro-vision-001) and parameters for the request, but is it different internally?

The image is an example in Japanese, but when I asked Vertex AI Studio to extract questions and answers for the same image, it extracted everything correctly, but when I used the API (postman), there are some incorrect parts extracted.

In postman, we use pro-vision-latest, which we recognize to mean the same as vertexAI's 001 model.

Why does the quality of the answers change?

Is it the same 001 model but different internally?

Thank you in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Yuma,

Thanks for reaching out! We're here to help you with your inquiries.

It sounds like you're concerned about the different results you're getting from the pro-vision models. While both models share the same core functionality, versioning can indeed play a role. The last 3 digits in the model name (1.0-pro-vision-001) indicate a stable version, which is likely why you're seeing better results with it. The 'latest' version (1.0-pro-vision-latest) may still be under development, with ongoing bug fixes and improvements that could cause slight variations in output for some prompts.

A stable version of a Gemini model does not change and continues to be available for approximately six months after the release date of the next version of the model.

You can identify the version of a stable model by the three-digit number that's appended to the model name. For example, gemini-1.0-pro-001 is version number one of the stable release of the Gemini 1.0 Pro model.

The latest version of a model is updated periodically and includes incremental updates and improvements. These changes might result in subtle differences in the output over time for a given prompt. The latest version of a model is not guaranteed to be stable.

I hope I was able to provide you with useful insights.

-

AI ML

1 -

AI ML General

527 -

AutoML

198 -

Bison

25 -

Cloud Natural Language API

89 -

Cloud TPU

26 -

Contact Center AI

48 -

cx

1 -

Dialogflow

386 -

Document AI

150 -

Gecko

2 -

Gemini

128 -

Gen App Builder

74 -

Generative AI Studio

120 -

Google AI Studio

37 -

Looker

1 -

Model Garden

34 -

Otter

1 -

PaLM 2

29 -

Recommendations AI

59 -

Scientific work

1 -

Speech-to-Text

106 -

Tensorflow Enterprise

2 -

Text-to-Speech

79 -

Translation AI

90 -

Unicorn

2 -

Vertex AI Model Registry

196 -

Vertex AI Platform

695 -

Vertex AI Workbench

97 -

Video AI

21 -

Vision AI

124

- « Previous

- Next »

| User | Count |

|---|---|

| 10 | |

| 2 | |

| 1 | |

| 1 | |

| 1 |

Twitter

Twitter