- Google Cloud

- Articles & Information

- Community Blogs

- Balancing Agility and Cost in a Kubernetes Environ...

Balancing Agility and Cost in a Kubernetes Environment with Google Kubernetes Engine

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

If your organization is embarking on a digital transformation journey and planning to modernize its application workloads, it’s important to evaluate and ask a few key questions.

-

Is my company looking to deliver software at scale?

-

Do I need to improve business agility and enable automation and portability of enterprise workloads?

-

Does my current environment have a bunch of legacy systems where I’m facing scalability issues?

-

Do I need to replace operational siloed models with cloud-centric ones that facilitate organizational outcomes?

If the answer is “yes” to all the above questions, then you need a platform where you can leverage agile services and run containerized applications at scale in a portable fashion, while also being low cost and easy to manage.

As outlined in the Google Cloud Architecture Framework, Operational Excellence and Cost Optimization are key pillars in every organization’s IT or business strategy, but it’s important that cost reduction does not come at the expense of user experience or pose any risks to the business or its customers.

For customers adopting innovative cloud technologies for their application development and hosting, it can be challenging to implement cost optimization best practices, while also ensuring there are no negative effects on their applications’ performance and stability, or the end user experience.

Therefore, it’s important to maintain a balance between operational costs and agility in your cloud environment. Let’s discuss how we can achieve our desired performance and cost optimization goals in the Kubernetes environment on Google Cloud.

Agility and cost optimization with Google Kubernetes Engine

Google Kubernetes Engine (GKE) provides a managed environment for deploying, managing, and scaling your containerized applications using Google infrastructure.

GKE offers a range of features and capabilities to help customers balance both operational excellence with cost optimization.

-

GKE accelerates organizational agility by reducing deployment times to as low as 10 seconds. Scaling would be difficult and prohibitively expensive without GKE.

-

Customers benefit by eliminating CAPEX and transitioning to an OPEX-based consumption model on a modern container management platform.

-

Single-click clusters with the ability to scale up to 15,000 nodes for a single cluster.

-

AI-driven, autoscaling, auto repairs, and auto upgrades that keep clusters healthy and hyper-responsive to resource spikes.

-

Support for GPUs and TPUs for specialized workloads like machine learning, video transcoding, and image processing.

-

Single pane view with fine-grained visibility into your clusters, broken down by namespaces and labels, so you can drill down into resource usage statistics.

-

Native integration with security features like Kubernetes Network Policy to provide a safe hosting environment for mission-critical applications.

Best practices for cost optimization in GKE environments

Cost optimization is the combination of cost control and cost visibility, and in Google Cloud, we have numerous services that cater to both requirements to help achieve the desired results.

It’s important to understand that cost optimization in a GKE environment is a shared responsibility between Google Cloud as a service provider and the end customer.

Cloud cost optimization in the shared responsibility model

When it comes to balancing agility with cost optimization in the Kubernetes environment in Google Cloud, there are some tasks where the customer is responsible, and others where Google Cloud is responsible.

The customer’s responsibility for cost optimization in a GKE environment

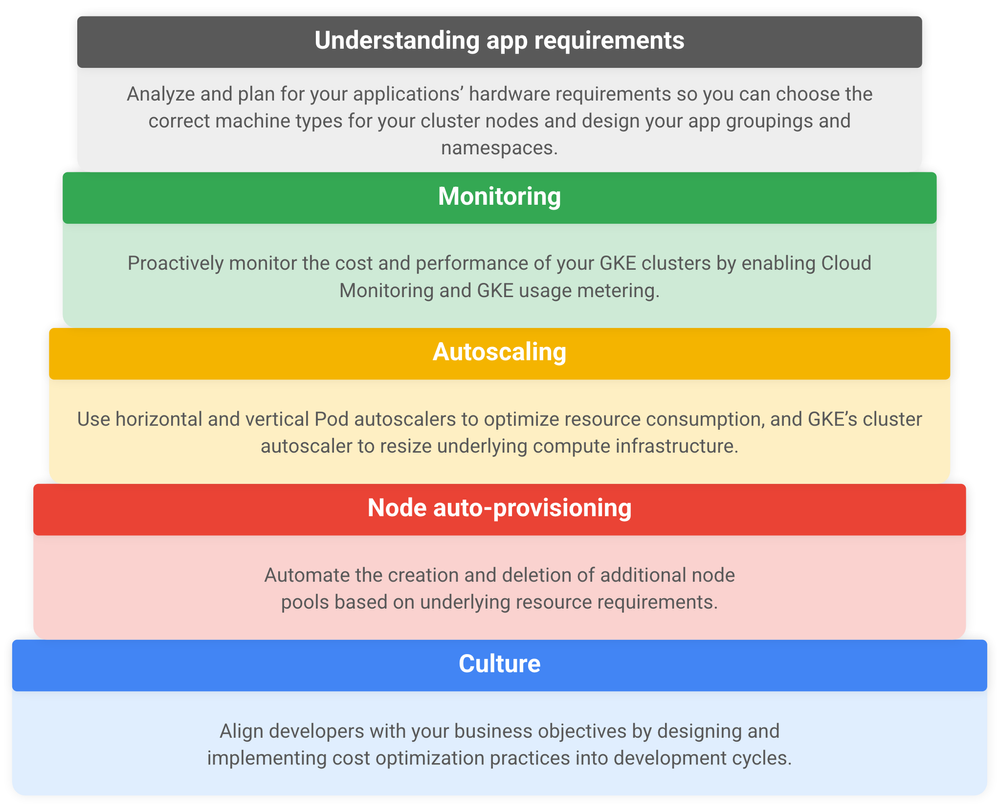

The customer’s responsibility for cost optimization in a GKE environment can be summarized by the image below:

Understanding application hardware requirements

Cloud architects or developers who are planning to develop or deploy their applications into a Kubernetes environment in Google Cloud need to first analyze and plan for their application’s hardware requirements based on the following factors:

-

Number of users for the application. Check whether the application will be used by a definite number of users or any number of users, if deployed for internet-based users.

-

Applications category. Determine whether the application is an external-facing application or an internal enterprise application.

-

Usage frequency. Verify whether the application is used very frequently on a daily basis or only on some occasions, such as the end of the month or a quarter.

-

Application nature. Confirm whether the application is CPU-intensive, memory-intensive, or GPU-intensive.

-

Storage requirements. Check the appropriate storage requirements per application.

Based on a correct analysis of your application’s hardware requirements, you can more accurately choose the correct machine types for your cluster nodes and design your application groupings and namespaces. While selecting for correct node types of any application, you can achieve further cost reduction by using preemptible nodes. This option is not suited for applications that need to be up and running all the time.

Note: GKE uses E2 machine types by default. For detailed information on the different machine types available, see Machine Families.

Monitoring GKE clusters

Proactive monitoring is key to managing the costs of Google Cloud resources in an effective manner. To monitor your Kubernetes environment and optimize costs effectively:

-

Enable Cloud Monitoring for GKE cluster nodes for their resource consumption, which can help GKE administrators plan the correct node sizing.

-

Enable GKE usage metering to export and view resource usage broken down by Kubernetes namespaces and labels, and to attribute usage to meaningful entities using BigQuery. This will help with effective cost management and decision making.

Autoscaling

GKE provides two types of autoscaling options to support cloud cost and performance optimization:

-

Pod autoscaling: GKE provides 4-way auto scaling functionality for Pods running applications in the cluster via the Horizontal Pod Autoscaler (HPA) and the Vertical Pod Autoscaler (VPA). Based on underlying resource consumption (CPU or memory), Kubernetes administrators can set thresholds and define Pod autoscaling either vertically or horizontally. This in turn optimizes the node resource consumption and prevents additional nodes from spinning up, thus saving the costs from those additional nodes.

Note: VPA needs to be enabled in the Google Cloud Console under Cluster Settings, unlike HPA, which will be configured per deployment.

-

Cluster autoscaler (CA): GKE’s cluster autoscaler automatically resizes the underlying compute infrastructure for a GKE cluster. CA provides nodes for Pods that don't have a place to run in the cluster and removes underutilized nodes.

Note: GKE’s cluster autoscaler is designed for cost optimization where, if you have different node types within a cluster, CA automatically chooses the least expensive compute type for application workloads based on demand.

We recommend enabling cluster autoscaler with HPA or VPA.

Node auto-provisioning

GKE can automate the creation and deletion of additional node pools based on underlying resource requirements, which helps optimize costs as application performance increases. Node auto-provisioning can be enabled under the Automation tab in the Cloud Console before or after creating the cluster.

Culture

In addition to the various Google Cloud services and configuration options to consider, it’s important to remember your people and processes. Develop a cost-conscious culture early on in development and planning stages and align your cost optimization goals and best practices with your development cycles. This includes, but is not limited to:

-

Designing and establishing a monitoring and capacity management strategy

-

Extending cost-saving practices to how you design native applications for your GKE environment

-

Reviewing the resource requirements of each application and finalizing Kubernetes resource quotas accordingly

Google Cloud’s responsibility for cost optimization in a GKE environment

Google Cloud offers services and discounts to support cost optimization in a GKE environment.

GKE zonal cluster

If you’re evaluating GKE for development purposes, you can benefit from a zonal cluster (master node in single zone), which is provided by Google Cloud for free (one zonal cluster per billing account). This can reduce the platform costs that can be used for development purposes.

Note: This free tier is only applicable to the Container Management fee of $0.10 per cluster per hour (charged in one second increments). GKE free tier provides $74.40 in monthly credits per billing account that are applied to zonal or Autopilot clusters. For more details on pricing, please refer to the section, “Cluster management fee and free tier,” via the link here.

Usage discounts

Google Cloud offers committed use discounts, which are well-suited for application workloads with predictable resource needs. With a combination of preemptible VMs in node pools and committed use discounts for application workloads, organizations can benefit from significant cost savings at scale.

To recap, to achieve desired strategic business objectives as per Google Cloud’s Architecture Framework, it's important to maintain the required balance between operational cost and agility in your digital transformation journey. If you have any questions about what you've read in this blog or more generally, about balancing agility and cloud cost optimization, please feel free to leave a comment below.

Twitter

Twitter