- Google Cloud

- Articles & Information

- Community Blogs

- How to Maximize Service Reliability with the Googl...

How to Maximize Service Reliability with the Google Cloud Architecture Framework

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Your services and applications need to be reliable to provide a great customer experience. But how can you make sure this is the case?

This is the primary question we set out to answer in our latest Architecture Framework Ask Me Anything event.

During this session, @tusharguptag, Customer Engineer and Infrastructure Modernization Specialist, and @Vivek_Rau, Customer Reliability Engineer and Google veteran, shared core reliability principles, provided practical implementation recommendations, and explained how to leverage Google Cloud products and features that support reliability best practices.

In this blog, we share key takeaways from the presentation, along with the session recording, written questions and answers, and supporting documentation, so you can refer back to them at any time.

If you have any further questions, please add a comment below and we’d be happy to help!

With this series, it's our goal to provide a trusted space where you can receive support and guidance along your cloud journey. So if you have any feedback or topic requests for our next sessions, please let us know in the comments, or by submitting the feedback form. You can keep an eye on upcoming sessions from the Cloud Events page in the Community. Thank you!

Session recording and slides

Watch the recording: https://youtu.be/tBZ7eESci0I

Core reliability principles

By definition, reliability is the probability that a system will meet certain performance standards and yield a desired output for a specified period of time. Ultimately, a system becomes more reliable if it has fewer, shorter, and smaller outages.

To maximize system reliability, we recommend adhering to the four core principles of reliability, as outlined in the Google Cloud Architecture Framework:

-

Reliability is your top feature: Although new product features can be a priority in the short-term, reliability is your top product feature in both the short- and long-term. Your users aren’t happy if your service is too slow or unavailable over a long period of time, making other product features irrelevant.

-

Reliability is defined by the user: For user-facing workloads, measure the user experience. The user must be happy with how your service performs. For example, in banking, you should measure how much time it takes for a customer to complete a transaction, not just server metrics like CPU usage.

For batch and streaming workloads, you might need to measure key performance indicators (KPIs) for data throughput, such as rows scanned per time window, instead of server metrics such as disk usage. -

100% reliability is the wrong target: It’s important to set and measure reliability targets with allowances of affordable downtime. Your systems should be reliable enough that users are happy, but not excessively reliable that the investment is unjustified. Define Service Level Objectives (SLOs) that set the reliability threshold you want, then use error budgets to manage the appropriate rate of change (more on SLOs and error budgets are in the “measuring service reliability” section below).

-

Reliability and rapid innovation are complementary: Use error budgets to achieve a balance between system stability and developer agility. The following guidance helps you determine when to move fast or slow:

-

When an adequate error budget is available, you can innovate rapidly and improve the product or add product features.

-

When the error budget is diminished, slow down and focus on reliability features.

Measuring service reliability

Now that we’ve covered the core principles of reliability, let’s dive a little deeper into how you can actually measure the reliability of your services, with an explanation of key terms.

-

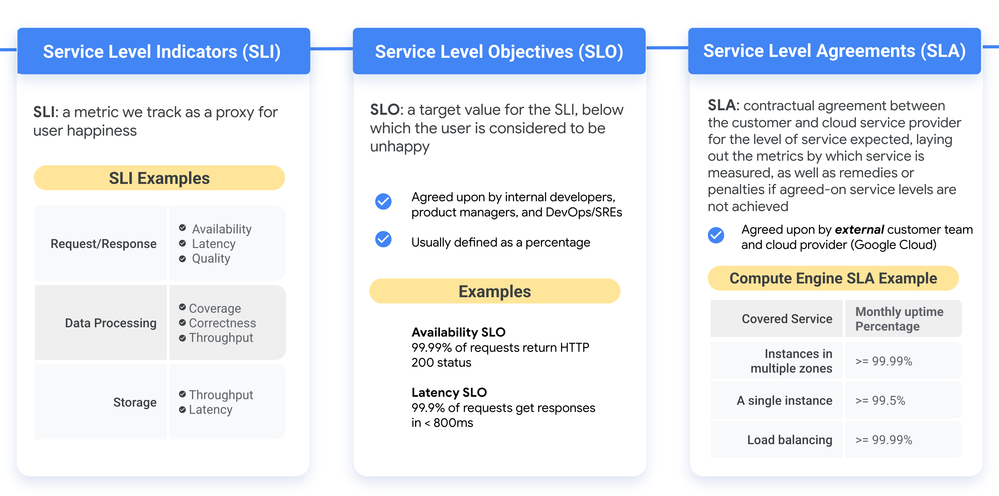

Service Level Indicator (SLI): A carefully defined quantitative measure of some aspect of the level of service that is being provided. It’s a metric (not a target) and is used as a way to measure user satisfaction.

-

Service Level Objective (SLO): Specifies a target level for the reliability of your service. The SLO is a target value for an SLI. When the SLI is at or better than this value, the service is considered to be "reliable enough." Because SLOs are key to making data-driven decisions about reliability, they’re the focal point of site reliability engineering (SRE) practices.

-

Service Level Agreement (SLA): An explicit or implicit contract with your users that includes consequences if you miss the SLOs referenced in the contract.

Hand-in-hand with SLIs, SLOs, and SLAs, are error budgets, error budget policies, and critical user journeys.

-

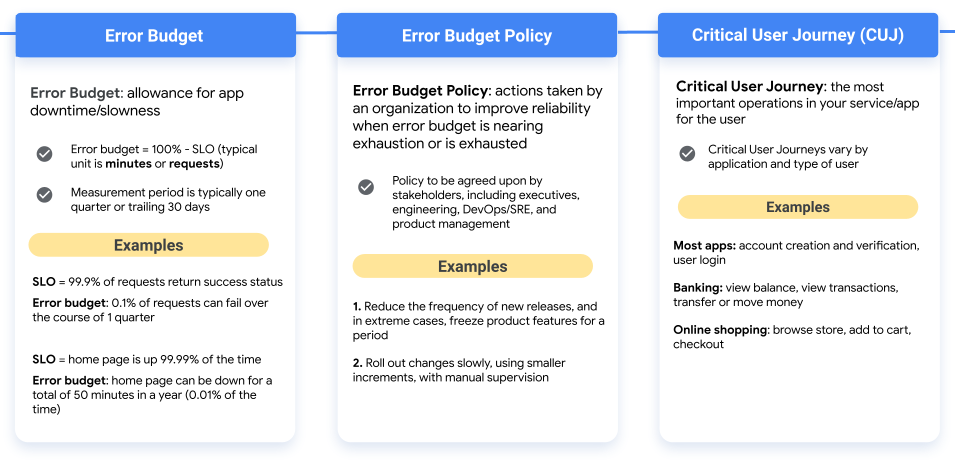

Error budget: Calculated as: 100% - SLO over a period of time. It indicates if your system has been more or less reliable than what is needed over a certain time period and how many minutes of downtime are allowed during that period.

For example, if your availability SLO is 99.9%, your error budget over a 30-day period is (1 - 0.999) x 30 days x 24 hours x 60 minutes = 43.2 minutes. So if your system has had 10 minutes of downtime in the past 30 days and started the 30-day period with the full error budget of 43.2 minutes unutilized, then the remaining error budget would be 33.2 minutes.

But what happens if your error budget is nearing exhaustion, or is exhausted? Establish a plan of action with error budget policies. -

Error budget policies: Actions taken by your organization to improve reliability. For example, reduce the frequency of new releases and in extreme cases, freeze product features for a certain period.

-

Critical user journeys: The most important operations in your service or application for the end user. Critical user journeys vary depending on the type of application and the type of user. For example, critical user journeys in online shopping include “browse store,” “add to cart,” and “checkout.” While in banking, it includes “view balance,” “view transactions,” and “transfer money.” Prioritize defining SLOs for critical user journeys, not for every possible user operation.

Configuring dashboards and alerts for SLOs and error budgets can be challenging because the monitoring configuration will need to implement arithmetic, involving ratios and consumption rates. Google Cloud provides an SLO Monitoring feature that hides this complexity by treating SLOs and error budgets as basic building blocks integrated into the monitoring framework. With SLO Monitoring, the user selects the desired SLIs (reliability metrics) and SLOs (target values), and the framework handles the mathematical manipulations required to monitor SLOs and error budgets.

Reliability questions and answers

1. When designing my architecture, how do I know what is too much? For example, if I should include Apigee? if I should include API Gateway? a JWT? etc.

From the perspective of the Google Cloud Architecture Framework, you want to consider whether the cost of any option is worth it based on your business requirements for reliability.

So let’s say you’re looking at redundancy across multiple regions. This will of course, add more cost because you require more resources. The question you need to ask yourself is does my business really need such a high Service Level Objective? Does it need such a high availability? Because in the case that a region goes down (which happens very rarely), there are some applications where it’s okay to be down for a short period of time without significant impact on your end users or your business.

Ultimately, compare the cost to your business due to downtime versus the cost to your business of the architecture itself.

Additional resources:

2. Is site reliability applicable for analytical use cases, such as building a data warehouse/data lake with different ETL pipelines? If yes, what are the things we need to consider for that?

In the case of data processing, ETL pipelines, data warehouses, etc., the typical Service Level Indicators are not exactly the same, but are similar. For example, latency.

Latency for a pipeline is how long it takes for data to get from one end of the pipeline to the other and to come out as the final output. So you can implement monitoring where you inject some synthetic data and then see at what point the pipeline produces the desired output - which can be used to determine your latency Service Level Indicators and Service Level Objectives.

Other Service Level Indicators to consider for data pipelines include:

-

Availability: How often and for how long is your data pipeline down?

-

Coverage: Is it covering the full set of data that you feed to it? What percentage is it?

-

Correctness: What percentage of the data that goes through the pipeline is accurate?

-

Throughput: What volume of data can you get through the pipeline within a certain unit of time?

-

Freshness: How recent is the data that came through the pipeline? If it’s a day old for example, that likely means your pipeline is running too slow.

Additionally, we recommend that you use managed services from Google Cloud (such as Cloud Data Fusion or Cloud Composer) to build your ETL pipelines so that you’re covered by the reliability of the services offered by that particular service.

3. Is it a best practice to use SLOs for all types of projects and applications? Or should we use it only for critical and important applications?

If the application is important to your business - even if it’s not critical - it should have a Service Level Objective (SLO). The SLO may be very loose/flexible in one case and very tight/strict in another. You may have 99.9% SLO for something that is less critical and 99.99% SLO for critical, high availability applications. Keep in mind your critical user journeys to determine the SLOs you prioritize.

In addition, make sure there is a team committed and responsible for owning and maintaining the SLO before you set it up.

4. Can Google Cloud SQL perform automatic instance failover across zones?

Yes, Cloud SQL can perform automatic instance failover across zones, but you need to make sure that the configuration is enabled.

Note however, that there is no automatic failover across regions. Cross-region failover (versus cross-zone failover) will require restoring backups to a Cloud SQL instance in a secondary region if the primary region goes down for any region. More information on this can be found in the Cloud SQL documentation here.

5. How do I balance cost optimization with the price of redundancy and reliability?

Suppose your service is down for five minutes - how much revenue do you lose? What is the impact on brand reputation? Customer satisfaction? There are both tangible and intangible costs to consider from an outage.

Define your service’s critical user journeys and determine if the cost of high availability is necessary and worth it to achieve the desired SLOs. More information and recommendations on designing for scale and high availability can be seen in the Cloud Architecture Center here.

6. What is the best way to achieve high availability for my organization’s applications? They contain sensitive information and must reside inside my organization’s data centers. I was thinking of using Google Cloud Load Balancing to direct requests to my servers. Is this possible? Or the best option?

You can use Google Cloud Load Balancing, along with Cloud Interconnect, to direct requests to your on-premises servers. More information on how that works can be seen here.

Therefore, it is possible to set up load balancing in a hybrid mode where some requests are sent to your Cloud servers and other requests are sent to your on-premises servers. However, we do not recommend a complex configuration of this type unless it's necessary, as it adds to the customer’s egress costs via the hybrid connectivity (Interconnect or VPN) and may also increase latency, which impacts service performance.

7. If my service goes down, how do I know how much and what data I’ve lost? Is it recoverable?

If you use a fully managed service from Google Cloud, you won’t lose any data at all because the backend service has your data. If that service itself goes down because perhaps an entire region is down and disks are damaged beyond repair, then that’s when you rely on your backups.

After an event like this where your data is lost or corrupted, you need to restore data from backups and bring up all services again using the freshly restored data.

Based on the Google Cloud Architecture Framework, we use three criteria for judging the success or failure of this type of recovery test: data integrity, RTO, and RPO.

-

Data integrity: The requirement that the restored data must be consistent. You must verify that the data backups contain consistent snapshots of the data so that the restored data is immediately usable for bringing the application back into service.

-

Recovery Time Objective (RTO): The time it takes to restore the data after an incident. Make sure that when you’re performing data recovery tests, that the time it takes to restore is in line with your SLO targets.

-

Recovery Point Objective (RPO): An upper bound on data loss from the incident. You can’t take backups all the time, so you’re not able to restore system state up to the last instant before an outage. What you can do is take backups on a regular schedule so that you have an upper limit on the number of minutes or hours of transactions that are lost when you need to restore data from backup. You can think of RPO as the maximum interval between backup operations, with the actual interval ideally being much smaller.

8. If I’m using a load balancer to distribute traffic across servers, what happens if the load balancer goes down or is offline? Doesn’t that mean I still have a single point of failure?

If you use Cloud Load Balancing, a single load balancer instance going down will never affect your application because we run many replicas of all components of Cloud Load Balancing. Redundancy is a core design principle for reliability in the Architecture Framework. Therefore, if you run your own load balancer instances using products like nginx or HAProxy, make sure you run several replicas distributed across VMs, zones, and regions, so that loss of a single VM, loss of an entire zone, or even a region-wide outage will not disrupt incoming traffic.

9. What is the option for managed services for Dataflow and Composer to achieve reliability?

Dataflow is a fully managed data processing service from Google Cloud with built-in features to provide reliable services. These features include horizontal and vertical autoscaling of underlying worker nodes based on utilization, as well as automatic partitioning of data inputs for enhancing the data pipeline performance. In addition, SLO Monitoring is built in to identify and mitigate performance bottlenecks.

Cloud Composer on the other hand, is a managed workflow orchestration service built on Apache Airflow. It comes with easy-to-use charts for proactive monitoring of workflows and troubleshooting in case of an issue.

Supporting resources

-

The Reliability Pillar of the Google Cloud Architecture Framework (Documentation)

-

Site Reliability Engineering (Online Book)

-

The Site Reliability Workbook (Online Book)

-

Metrics That Matter (CACM Article)

-

Building Secure & Reliable Systems (Online Books)

- A2A, MCP, and ADK — Clarifying Their Roles in the AI Ecosystem

- Applying AI to the craft of software engineering

- Understanding A2A — The Protocol for Agent Collaboration

- Unlock the power of AI Agents with Tools, Actions and Enterprise Data

- Using Google's Agent Development Kit (ADK) with MCP Toolbox and Neo4j

Twitter

Twitter