- Google Cloud

- Articles & Information

- Cloud Product Articles

- Demystifying Pub/Sub: An Introduction to Asynchron...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

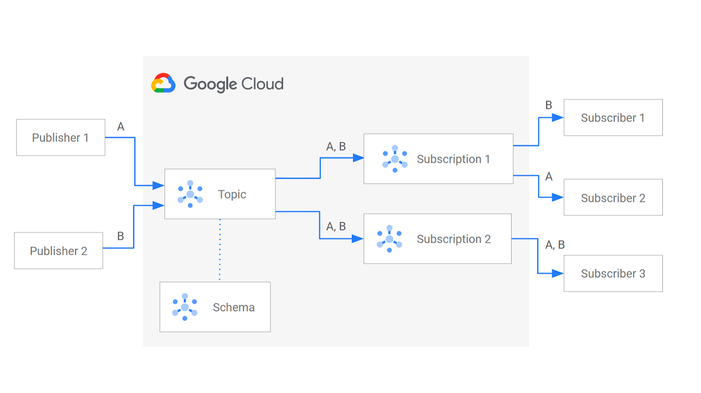

In the dynamic realm of software development, the ability for applications to communicate seamlessly and efficiently is paramount. Pub/Sub, an acronym for publish-subscribe, has emerged as a prevalent messaging architecture that facilitates asynchronous communication between applications and services. This blog post delves into the intricacies of Pub/Sub, shedding light on its core components and highlighting its versatility in modern software architectures.

The Essence of Pub/Sub

Pub/Sub operates on a fundamental principle of decoupling producers, the entities that generate messages, from consumers, the applications that process those messages. This asynchronous approach eliminates the need for direct connections between producers and consumers, enabling independent development and scalability.

Table of Contents

- Unveiling the Core Components of the Pub/Sub Architecture

- Topics

- Publishers

- Subscribers

- Messages

- Subscriptions

- Schemas

- Advantages of Embracing Pub/Sub

- Applications of Pub/Sub

- Harnessing the Power of Google Cloud Pub/Sub with ABAP SDK for Google Cloud

Unveiling the Core Components of the Pub/Sub Architecture

Topics

In Google Cloud Pub/Sub, a topic is a named resource that represents a feed of messages. Topics are used to organize and categorize messages so that subscribers can receive messages that are relevant to their interests.

Key Characteristics of Google Cloud Pub/Sub Topics:

- Durability: Messages published to a topic are stored persistently and will be delivered to all subscribers, even if the subscribers are not currently connected.

- Security: Topics support access control lists (ACLs) so that only authorized subscribers can receive messages.

- Scalability: Topics can handle large volumes of messages and subscribers, making them suitable for high-throughput applications.

- Decoupling: Topics decouple publishers and subscribers, allowing them to operate independently, enhancing flexibility and resilience.

- Filtering: Topics enable subscribers to filter and receive only relevant data, reducing processing overhead and improving efficiency.

Applications of Topics:

Data Distribution:

Topics facilitate seamless data distribution from publishers to multiple subscribers. Publishers generate and send messages to specific topics, and subscribers register subscriptions to receive messages from the topics they are interested in. This mechanism ensures that data is routed to the appropriate subscribers, enabling real-time data consumption and utilization.

Event Triggering:

Topics act as powerful event triggers, initiating actions in other systems based on published messages. When a publisher sends a message to a topic, subscribed systems receive the message and can trigger corresponding actions or workflows. This capability enables event-driven architectures, where systems respond to events as they occur.

Change Tracking:

Topics provide a valuable mechanism for tracking changes to data in a system. By publishing messages whenever data changes occur, publishers can inform subscribers of the updates, enabling them to maintain consistent and up-to-date data representations across various systems.

Example: Customer Order Processing

Consider a company using a topic called "customer-orders" to manage customer orders. Publishers send messages to this topic whenever new orders are placed. Subscribers, including a warehouse management system, a billing system, and a customer service system, receive these messages and take appropriate actions:

- The warehouse management system initiates the order picking process.

- The billing system generates an invoice for the order.

- The customer service system sends a confirmation email to the customer.

This example demonstrates how topics facilitate real-time data exchange and synchronized action execution among interconnected systems.

Publishers

Publishers are the originators of data, responsible for generating and disseminating messages to designated topics. They play a crucial role in the Pub/Sub ecosystem, ensuring the accuracy, integrity, and timely delivery of information across the network.

Google Cloud Pub/Sub publishers can be any application or system that produces data, ranging from microservices to IoT devices. They interact with the Pub/Sub service using various client libraries or APIs to publish messages containing the intended data.

Key Responsibilities of Google Cloud Pub/Sub Publishers:

- Data Generation: Publishers are responsible for generating the data that will be published to topics. This data can be structured, unstructured, or binary in nature.

- Message Creation: Publishers create messages that encapsulate the data to be transmitted. Messages can contain additional metadata, such as attributes and message IDs, to provide context and facilitate processing.

- Topic Selection: Publishers choose the appropriate topic to which they will publish messages. Topics represent categories of data, allowing subscribers to filter and receive only relevant information.

- Message Publication: Publishers publish messages to the selected topics using the Pub/Sub API or client libraries. This involves sending the message data along with any associated metadata.

- Error Handling: Publishers handle errors and exceptions that may occur during the message publication process. This ensures that data loss is minimized and the system remains stable.

Subscribers

Google Cloud Pub/Sub subscribers are the applications that consume messages from specific topics. They play a crucial role in the Pub/Sub system, responsible for receiving, processing, and acting upon the data contained within messages.

Responsibilities of Google Cloud Pub/Sub Subscribers:

- Subscription Registration: Subscribers register subscriptions to receive messages from specific topics. Subscriptions establish virtual connections between subscribers and topics, enabling selective data filtering.

- Message Reception: Subscribers receive messages from the Pub/Sub system using the Pub/Sub API or the gcloud command-line tool. The system delivers messages to subscribers based on their subscription configuration.

- Message Processing: Subscribers process the received messages, extracting the data and performing the necessary actions based on the information contained within.

- Message Acknowledgment: Subscribers acknowledge messages to inform the Pub/Sub system that they have successfully received and processed them. This helps maintain message order and prevent duplicate processing.

Examples of Google Cloud Pub/Sub Subscribers:

- A customer relationship management (CRM) system: The system could subscribe to a topic for order confirmation messages and update customer records accordingly.

- A data analytics platform: The platform could subscribe to a topic for stock market updates and perform real-time analysis.

- An anomaly detection system: The system could subscribe to a topic for sensor data and identify potential anomalies or deviations from normal patterns.

Messages

Google Cloud Pub/Sub messages are the units of data exchanged within the Pub/Sub system. They encapsulate the information being communicated between publishers and subscribers and play a crucial role in real-time data exchange.

Key Characteristics of Google Cloud Pub/Sub Messages:

- Data Encapsulation: Messages contain the actual data being communicated, which can be structured data, unstructured data, or even binary data.

- Metadata: Messages can include metadata, such as attributes and message IDs, providing additional context and information about the data.

- Immutability: Once published, messages are immutable, meaning their content cannot be modified. This ensures data integrity and consistency.

- Ordering: Messages published to a topic are delivered to subscribers in the order they were published, preserving data sequence.

Structure of Google Cloud Pub/Sub Messages:

A Pub/Sub message consists of two primary components:

- Data: The actual data being communicated, typically represented as a string or a byte array.

- Attributes: Optional metadata that provides additional context about the message, such as labels, timestamps, and message IDs. Attributes are key-value pairs, where the key is a user-defined string and the value is a string or a number.

Subscriptions

Google Cloud Pub/Sub subscriptions are virtual connections between subscribers and topics. They enable subscribers to filter and receive only relevant messages from specific topics, reducing processing overhead and improving efficiency.

Key Characteristics of Google Cloud Pub/Sub Subscriptions:

- Topic Association: Subscriptions are associated with a specific topic, indicating the source of messages the subscriber wants to receive.

- Message Filtering: Subscribers can specify filtering rules to receive only messages that match their criteria, such as attribute values or message content patterns.

- Delivery Types: Subscriptions support two delivery types: pull and push. In pull subscriptions, subscribers actively request messages from the Pub/Sub system. In push subscriptions, the Pub/Sub system initiates requests to deliver messages to subscribers.

- Dead Letter Queue (DLQ) Handling: Subscribers can configure a DLQ to store messages that cannot be delivered or processed successfully. This helps prevent data loss and allows for later processing or debugging.

- Message Acknowledgment: Subscribers can acknowledge messages to inform the Pub/Sub system that they have successfully received and processed them. Acknowledgments help maintain message order and prevent duplicate processing.

In addition to these core components, Pub/Sub systems may also include other features such as:

- Acknowledgments: Acknowledgments are signals sent by subscribers to the broker indicating that they have successfully received and processed a message. This mechanism helps maintain message order and prevents duplicate messages from being processed.

- Schema enforcement: Schema enforcement ensures that messages adhere to a standardized format. This helps prevent data corruption and simplifies data processing for subscribers.

- Dead letter queues: Dead letter queues (DLQs) are queues where messages that cannot be delivered or processed are stored. This helps prevent data loss and allows for later processing or debugging.

- Retention policies: Retention policies specify how long messages are stored before they are expired and deleted. This helps manage storage usage and ensure that only relevant data is retained.

Choosing the Right Subscription Type

Push Subscriptions:

With push subscriptions, the Pub/Sub system actively delivers messages to subscribers by sending HTTP requests to their designated endpoints. This approach is particularly useful for applications that require real-time data processing and immediate action upon receiving messages.

Pull Subscriptions

With pull subscriptions, subscribers actively request messages from the Pub/Sub system by making HTTP requests to the Pub/Sub service. This approach is suitable for applications that require more control over message consumption and can tolerate some latency.

The choice between push and pull subscriptions depends on the specific requirements of the application. For applications that demand real-time data processing and immediate action, push subscriptions are the preferred choice. For applications that require more control over message consumption and can tolerate some latency, pull subscriptions are more suitable.

Schemas

Google Cloud Pub/Sub schemas are optional components that define the structure and format of data exchanged within the Pub/Sub system. They provide a contract between publishers and subscribers, ensuring that messages adhere to a standardized format, which facilitates data validation, interpretation, and processing.

Benefits of Google Cloud Pub/Sub Schemas:

- Data Integrity: Schemas enforce data integrity by validating messages against the defined structure, preventing data corruption and anomalies.

- Interoperability: Schemas promote interoperability between publishers and subscribers, ensuring that data can be interpreted and processed consistently across different applications.

- Self-Documenting Data: Schemas provide self-documenting data, making it easier for subscribers to understand the meaning and context of the data.

- Error Prevention: Schemas help prevent errors by identifying invalid or inconsistent data early in the processing pipeline.

- Simplified Data Processing: Schemas simplify data processing by providing a standardized format, reducing the need for custom parsing and interpretation.

When to Use Google Cloud Pub/Sub Schemas:

Schemas are particularly beneficial when:

- Data consistency and integrity are crucial: Schemas ensure that data remains consistent and accurate throughout the Pub/Sub system.

- Data is exchanged between multiple publishers and subscribers: Schemas establish a common understanding of data structure across different applications.

- Data is used for downstream processing: Schemas provide a standardized format for downstream processing, reducing errors and simplifying data integration.

Creating and Using Google Cloud Pub/Sub Schemas:

- Schema Definition: Schemas are defined using either Avro or Protocol Buffers, two well-established schema languages.

- Schema Association: Schemas are associated with specific topics, ensuring that messages published to those topics adhere to the defined schema.

- Message Validation: Publishers can validate messages against the associated schema before sending them to the Pub/Sub system.

- Schema Evolution: Schemas can evolve over time to accommodate changes in data structure, ensuring that the Pub/Sub system remains flexible and adaptable.

Advantages of Embracing Pub/Sub

- Scalability: Pub/Sub inherently scales horizontally, allowing for the addition of more publishers and subscribers without compromising performance.

- Asynchronous Communication: Decoupling producers and consumers eliminates the need for real-time synchronization, enhancing overall system responsiveness.

- Fault Tolerance: Pub/Sub systems are designed to handle failures gracefully, ensuring message delivery even in the face of disruptions.

- Reliability: Messages are persisted until they are acknowledged by consumers, guaranteeing message delivery.

- Flexibility: Pub/Sub supports various message formats and protocols, catering to diverse application requirements.

Applications of Pub/Sub

Pub/Sub is a powerful messaging service that can be used for a variety of applications. Here are some of the most common use cases:

- Real-time data streaming: Pub/Sub can be used to stream data in real time, such as stock tickers or social media feeds. This data can be used to power real-time dashboards and analytics, or to trigger alerts and notifications.

- Event-driven architectures: Pub/Sub can be used to build event-driven architectures, where events are published to a topic and then consumed by one or more subscribers. This can be used to decouple components of an application, and to make it more scalable and resilient.

- Asynchronous workflows: Pub/Sub can be used to orchestrate asynchronous workflows, where tasks are triggered by events. This can be used to improve performance and scalability, and to make it easier to manage complex workflows.

- Data integration pipelines: Pub/Sub can be used to build data integration pipelines, where data is transferred between disparate systems. This can be used to consolidate data from multiple sources, and to make it easier to analyze and visualize data.

- IoT communication: Pub/Sub can be used to enable communication between IoT devices and cloud applications. This can be used to collect data from IoT devices, and to trigger actions based on that data.

These are just a few of the many applications of Pub/Sub. With its scalability, reliability, and ease of use, Pub/Sub is a powerful tool that can be used to solve a variety of problems.

Harnessing the Power of Google Cloud Pub/Sub with ABAP SDK for Google Cloud

The ABAP SDK for Google Cloud provides a comprehensive client library that simplifies the integration of Google Cloud Pub/Sub into SAP applications. This integration offers several benefits:

- Seamless Integration: The ABAP SDK seamlessly integrates with existing SAP systems, enabling developers to leverage Google Cloud Pub/Sub without disrupting existing workflows.

- Simplified Development: The client library provides pre-built classes and methods, streamlining the development of Pub/Sub-based applications in ABAP.

- Robust Error Handling: The ABAP SDK includes robust error handling mechanisms, ensuring the reliability and stability of Pub/Sub applications.

- Performance Optimization: The client library is designed for performance, optimizing message transmission and processing for SAP environments.

Conclusion

Google Cloud Pub/Sub has emerged as a transformative tool for real-time data exchange, enabling businesses to build scalable, resilient, and event-driven applications. Its integration with the ABAP SDK for Google Cloud further extends its reach, empowering SAP developers to leverage the power of Pub/Sub within their existing SAP environments. Google Cloud Pub/Sub, coupled with the ABAP SDK for Google Cloud, provides a powerful and versatile solution for asynchronous communication between ABAP applications and the Google Cloud platform. The solution's ability to decouple services, promote scalability, and ensure reliable message delivery makes it an ideal choice for building modern and resilient enterprise applications.

Embrace the dynamic ABAP SDK for Google Cloud Community, a thriving ecosystem where fellow ABAP developers leveraging Google Cloud converge to share knowledge, collaborate, and explore. Join us as we shape the future of ABAP SDK for Google Cloud and unleash a universe of possibilities.

Embark on your innovation journey with us:

Happy Learning! Happy Innovating!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Great topic!

Twitter

Twitter