- Google Cloud

- Articles & Information

- Cloud Product Articles

- GenAI and API Management: Part 2a - The need to Mo...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

GenAI and API Management: Part 2a - The need to Monetize LLM API's

on 12-02-2024 05:00 AM - edited on 01-09-2025 10:44 AM by RakeshTalanki

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This is part 2 of the series of articles on the topic of GenAI and API Management. Please refer to Part 1 here.

Why Monetize LLMs?

The rise of AI Powered chatbots, AI powered customer service, automated content generation, productivity applications, and many other applications being built with LLM API’s has opened up exciting new possibilities for businesses across all industries.

However, deploying and monetizing these powerful models can be a complex and challenging process. Usage of LLM API’s are only rising leading to higher costs. There is a growing need to adequately track LLM usage. These challenges are driving enterprises to explore various monetization strategies for their LLM API's.

This article will explore these challenges and demonstrate how Apigee, Google Cloud's API management platform, can help you unlock the true potential of your AI/ML investments.

Challenges

Some of the common obstacles we have seen are slow time-to-market, security concerns, lack of business intelligence, integration difficulties, scaling challenges, discovery issues, limited self-service options, and inadequate monetization tools.

Strategies to Monetize LLM’s

Monetizing LLM API's can be approached in several ways, depending on your business goals. Here are some strategies we have come across:

1. Usage-based pricing:

- Charge per API call: This is a common model where you charge users for each request they make to your API. You can set different prices based on the complexity or resource intensiveness of the request.

- Charge per token: Many LLMs charge based on the number of tokens processed in the request and response. This model is particularly relevant for text-based LLMs.

- Tiered pricing: Offer different subscription tiers with varying levels of access and usage limits. This allows users to create a plan that best suits their needs and budget.

Apigee customers using monetization in this category are: AccuWeather APIs, Mapquest APIs.

2. Value-added services:

- Fine-tuning: Offer custom fine-tuning of your LLM for specific use cases or industries. This allows businesses to create a more tailored and effective AI solution.

- Data enrichment: Provide services to help users enhance their data for better performance with your LLM. This could include data cleaning, formatting, or annotation.

Apigee customers using monetization in this category are: Pitney Bowes APIs, Telstra APIs.

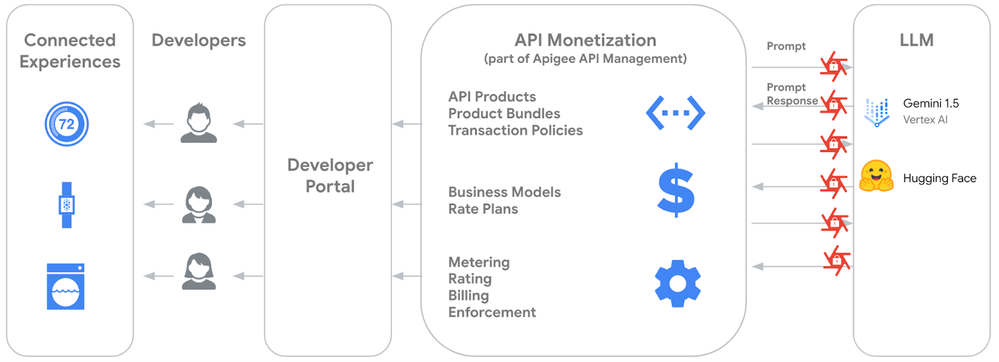

Solve Monetization problem using Apigee

By leveraging Apigee, enterprises can achieve their business goals around LLM usage by packaging LLM access into API products and monetize their usage effectively. Monetization in Apigee is straightforward. Here are some scenarios.

Use Case 1: Internal Chargeback for API Usage

Scenario: A large enterprise has different business units (e.g., Credit Card Division, Retail Banking Division, Infrastructure Division) that consume each other's APIs. They want to track API usage and implement internal chargeback to ensure fair cost allocation and encourage efficient resource utilization.

Apigee Implementation ➡️

- API Product: Create separate API products for each business unit's APIs.

- Rate Plans: Define rate plans with pricing models for internal consumption. This could include:

- Fixed fees: A flat fee per month, or year for access to an API.

- Usage-based pricing: Charge per API call, tiered pricing based on usage volume, or a combination of both.

- Cost-plus pricing: Add a markup to the actual cost of providing the API to cover infrastructure and maintenance expenses.

- Developer Portal: Onboard internal teams as developers on the Apigee Developer Portal.

- Analytics and Reporting: Utilize Apigee analytics to track API usage by each department.

- Billing: Generate reports and invoices for each department based on their API consumption.

Use Case 2: Monetizing AI-Powered Features

Scenario: A media company wants to offer its customers the ability to generate AI-powered summaries of their content using an LLM. They want to allow customers to use their own API keys or purchase access through the media company's portal.

Apigee Implementation ➡️

- API Product: Create an API product for the summarization feature.

- Rate Plans: Define multiple rate plans:

- BYOK (Bring Your Own Key): Free plan for customers using their own LLM API keys.

- Paid Plans: Tiered pricing based on usage (e.g., number of summaries generated).

- Key Management: Implement a mechanism for customers to securely store and manage their API keys within Apigee.

- Developer Portal: Enable customers to subscribe to plans and manage their API usage through the Developer Portal.

- Integration with Billing System: Integrate Apigee with a billing system to automate invoicing and payment processing.

Use Case 3: API Access Control and Revenue Sharing

Scenario: A healthcare provider wants to give third-party developers access to its patient data API (with proper consent and security measures) and share revenue generated from the use of this API.

Apigee Implementation ➡️

- API Product: Create an API product for the patient data API.

- Rate Plan: Define a revenue-sharing model where the healthcare provider and the developer receive a percentage of the revenue generated.

- OAuth 2.0: Implement OAuth 2.0 for secure API access control and authorization.

- Developer Portal: Allow developers to register, subscribe to the API product, and agree to the revenue-sharing terms.

- Analytics and Reporting: Track API usage and revenue generated by each developer.

- Automated Payouts: Set up automated payouts to developers based on the agreed revenue share.

Use Case 4: Charging for Premium API Features

Scenario: A SaaS company offers a basic API for free but wants to charge for premium features like higher rate limits, access to real-time data, or priority support.

Apigee Implementation ➡️

- API Products: Create separate API products for basic and premium features.

- Rate Plans:

- Free Plan: Offer a free rate plan with limited access to the basic API.

- Premium Plans: Offer tiered pricing for premium features, with higher tiers providing more benefits.

- Developer Portal: Allow users to upgrade to premium plans through the Developer Portal.

- Quota Management: Use Apigee policies to enforce rate limits and access controls based on the user's subscription plan.

How does Apigee help?

- Adaptable Pricing Models: Apigee accommodates diverse pricing strategies, encompassing subscriptions, pay-as-you-go, tiered plans, and revenue sharing.

- Robust Security: Apigee ensures API and data protection through API key management, OAuth 2.0 support, and threat prevention measures.

- Scalable Performance: Apigee manages high API traffic volumes and transaction levels, guaranteeing dependable performance even during peak usage periods.

- Streamlined Integration: Apigee integrates with various billing platforms and backend services to simplify monetization processes.

- Centralized Developer Hub: Apigee's Developer Portal offers a unified space for developers to explore, subscribe to, and manage APIs.

- Actionable Insights: Apigee delivers comprehensive analytics and reporting tools for tracking API usage, revenue, and other essential metrics.

Conclusion

Monetizing LLMs through Apigee provides a powerful and efficient way to unlock the value of the AI investments. By combining the capabilities of LLMs with Apigee's API Management Platform, businesses can create new revenue streams, optimize costs, and deliver innovative solutions to the market. The token-based billing approach, coupled with Apigee's flexible Rate Plans and robust infrastructure, enables granular control, scalability, and a seamless developer experience. As LLMs continue to evolve, Apigee empowers businesses to stay ahead of the curve and capitalize on the transformative potential of AI.

Twitter

Twitter