- Google Cloud

- :

- Articles & Information

- :

- Cloud Product Articles

- :

- Network and Envoy Proxy Configuration to manage mT...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

In this series of 2 articles, we discuss the network configuration for consuming APIs on Apigee X using mutual TLS (mTLS).

In particular, this article details the necessary network components on Google Cloud Platform (GCP): Load Balancer (LB), Network Endpoint Group (NEG) and GKE cluster.

The assumption is made that an Apigee X organization is provisioned and operational.

Requirements

Requirements are the following:

- External client applications must use mTLS to access APIs hosted on Apigee X

- Client certificate used during the TLS handshake must be propagated to API proxies on Apigee X but not to the target endpoint

- Internal client applications can access the Apigee X runtime using 1-way TLS

Architecture overview

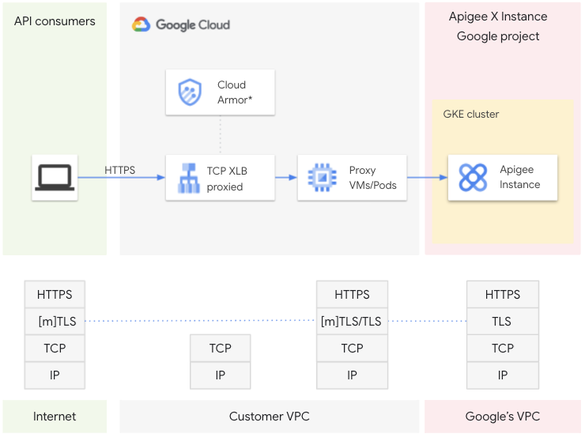

As mentioned before, the scope of this article is a situation where external client applications use mTLS to access APIs exposed on Apigee X.

An external TCP Proxy load balancer is used as a global entry point to access APIs hosted on Apigee X. TCP Proxy Load Balancing lets you use a single IP address for all users worldwide.

The TLS termination and therefore the mutual TLS handshake is completed on dedicated envoy proxies that run on Pods or VMs.

The only way for external client applications to access APIs hosted on Apigee X is the TCP Proxy Load Balancer.

In this article, we decide to operate the envoy proxies on a GKE cluster and therefore via Pods. In this case, it is necessary to configure a Network Endpoint Group (NEG), which is used when configuring the backend service of the TCP Proxy LB.

An alternative to GKE cluster, Pods and NEG is the usage of Virtual Machines (VMs) and Managed Instance Group (MIG).

If you are interested in such an alternative based on MIG, please refer to the following Google Apigee git repository, which provides Terraform scripts to perform the same kind of installation.

We do not discuss Google Cloud Armor in this article, but it is the option to consider if you want to enforce Web App and API Protection (WAAP) on an external Google Cloud load balancer (HTTPS or TCP/SSL Proxy).

Last but not least, please note that Apigee hybrid natively supports mTLS on its Itsio ingress as documented here.

Google Cloud and Apigee X Configuration details

From this section, we consider that Apigee X has been installed for internal access only: no global HTTPS load balancer needs to be installed as the API traffic must be secured using mTLS, that is not yet supported on such type of load balancers.

In case you would need to install a trial version of Apigee X, you can follow the instructions provided through the Apigee DevRel GitHub repository, which proposes a dedicated provisioning tool: Apigee X Trial Provisioning or use the Apigee terraform module as described here.

Environment Variables

The article refers to some variables, which are the following:

- VPC_NET: name of the consumer VPC

- SUBNET: subnet of the consumer VPC

- ZONE: zone in which the GKE cluster will be installed

- CLUSTER_NAME: name of the GKE cluster that will host envoy proxies

- APIGEE_ENDPOINT: the IP address of your Apigee X runtime ingress

- RUNTIME_MTLS_HOST_ALIAS: host alias of the Apigee environment group for mTLS based access

Envoy Proxies

In this section, we provide the gcloud and kubectl commands, which are required to create Envoy Proxies on a GKE cluster. These proxies are responsible for handling the mTLS handshake.

GKE Cluster

First you have to enable the container API:

gcloud services enable container.googleapis.comIn GKE, clusters can be distinguished according to the way they route traffic from one Pod to another Pod. A cluster that uses alias IP address ranges is called a VPC-native cluster.

A VPC-native cluster is required to create a zonal NEG:

gcloud container clusters create $CLUSTER_NAME \

--machine-type=e2-standard-4 \

--enable-ip-alias \

--network=$VPC_NET \

--subnetwork=$SUBNETYou can get the cluster credentials using the following command:

gcloud container clusters get-credentials $CLUSTER_NAME --zone $ZONEEnvoy Proxies configuration

Envoy proxies are installed on the GKE cluster previously created.

In this example, the proxies and related components are installed on the apigee namespace:

kubectl create namespace apigeeHere is the configuration (config.yaml) of the Envoy Proxies:

Envoy Config (config.yaml)

static_resources:

listeners:

- name: listener_0

address:

socket_address:

address: 0.0.0.0

port_value: 8443

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

set_current_client_cert_details:

subject: true

route_config:

name: local_route

virtual_hosts:

- name: local_service

domains: ["*"]

routes:

- match:

prefix: "/"

route:

cluster: apigeex

host_rewrite_literal: $RUNTIME_MTLS_HOST_ALIAS

request_headers_to_add:

- header:

key: "x-apigee-tls-client-raw-cert"

value: "%DOWNSTREAM_PEER_CERT%"

append: true

http_filters:

# Router

- name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

transport_socket:

name: envoy.transport_sockets.tls

typed_config:

"@type": type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.DownstreamTlsContext

require_client_certificate: true

common_tls_context:

validation_context:

trusted_ca:

filename: /ca-cert/tls.crt

tls_certificates:

- certificate_chain:

filename: /certs/tls.crt

private_key:

filename: /certs/tls.key

clusters:

- name: apigeex

connect_timeout: 30s

type: LOGICAL_DNS

dns_lookup_family: V4_ONLY

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: apigeex

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: $APIGEE_ENDPOINT

port_value: 443

transport_socket:

name: envoy.transport_sockets.tls

typed_config:

"@type": type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.UpstreamTlsContextIn the config.yaml file, the raw client certificate is transmitted using the %DOWNSTREAM_PEER_CERT% variable. The list of all available variable names can be found in the Envoy documentation.

In the config.yaml file there are two environment variables, which need to be replaced:

- RUNTIME_MTLS_HOST_ALIAS

- APIGEE_ENDPOINT

Once these variables have been set, you finalize the creation of the Envoy proxy configuration file using the following command:

( echo "cat <<EOF" ; cat config.yaml ; echo EOF ) | sh > standalone-envoy-config.yamlThe configmap, which contains the configuration of Envoy proxies is created on the GKE cluster using the following command:

kubectl create configmap -n apigee standalone-envoy-config \

--from-file=./standalone-envoy-config.yamlCryptographic objects for mTLS

At this step, we need to create the Kubernetes TLS secrets used by the Envoy proxies and define the mounting points to access them in the Envoy proxy Kubernetes manifest file.

In case you do not have a CA certificate, a valid envoy proxy certificate and private key, here are openssl commands to create such cryptographic objects:

# Run below command to create self-signed CA Cert for server

openssl req -newkey rsa:2048 -nodes -keyform PEM -keyout server-ca.key -x509 -days 3650 -outform PEM -out server-ca.crt -subj "/CN=Test Server CA"

# Next generate csr for self-signed certificate which we'll sign with our own CA Cert.

# Create SSL server private key

openssl genrsa -out server.key 2048

# Next create the CSR(Certificate Signing Request) for the server

openssl req -new -key server.key -out server.csr -subj "/CN=test.api.example.com"

# Next we sign the server CSR server.csr we just generated

openssl x509 -req -in server.csr -CA server-ca.crt -CAkey server-ca.key -set_serial 100 -days 365 -outform PEM -out server.crtYou can also create the client-side cryptographic objects that you can use later for testing:

# Client-Side Mutual Authentication Setup

openssl req -newkey rsa:2048 -nodes -keyform PEM -keyout client-ca.key -x509 -days 3650 -outform PEM -out client-ca.crt -subj "/CN=Test Client CA"

# First thing is to generate private key for client

openssl genrsa -out example-client.key 2048

# Next create the client CSR

openssl req -new -key example-client.key -out example-client.csr -subj "/CN=Test Client"

# Sign the client CSR

openssl x509 -req -in example-client.csr -CA client-ca.crt -CAkey client-ca.key -set_serial 101 -days 365 -outform PEM -out example-client.crtNow you can create an environment variable for each cryptographic object that has been created:

# CA Cert file (server)

export CA_CERT_FILE=server-ca.crt

# CA Private key file (server)

export CA_KEY_FILE=server-ca.key

# CA Cert file (client)

export CA_CERT_CLIENT_FILE=client-ca.crt

# CA Private key file (client)

export CA_KEY_CLIENT_FILE=client-ca.key

# Envoy proxy certificate file

export ENVOY_PROXY_CERT_FILE=server.crt

# Envoy proxy private key file

export ENVOY_PROXY_KEY_FILE=server.key

# Client app certificate file

export CLIENTAPP_CERT_FILE=example-client.crt

# Client app private key file

export CLIENTAPP_KEY_FILE=example-client.keyIn order to manipulate the cryptographic objects more securely, you create Kubernetes TLS secrets for both the CA and the Envoy proxy crypto objects:

kubectl create secret -n apigee tls ca-secret \

--cert=$CA_CERT_CLIENT_FILE \

--key=$CA_KEY_CLIENT_FILE

kubectl create secret -n apigee tls envoy-secret \

--cert=$ENVOY_PROXY_CERT_FILE \

--key=$ENVOY_PROXY_KEY_FILEEnvoy Proxy and Network Endpoint Group

You can now create the Kubernetes Deployment, Service and NEG using the following Kubernetes configuration file ( standalone-envoy-manifest.yaml )

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: standalone-envoy

name: standalone-envoy

namespace: apigee

spec:

replicas: 2

selector:

matchLabels:

app: standalone-envoy

template:

metadata:

labels:

app: standalone-envoy

spec:

containers:

- name: standalone-envoy

image: "envoyproxy/envoy:v1.18.2"

imagePullPolicy: IfNotPresent

env:

- name: GODEBUG # value must be 0, as apigee does not support http 2

value: http2client=0

ports:

- containerPort: 9000

args:

- -c /config/standalone-envoy-config.yaml

resources:

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 10m

memory: 100Mi

volumeMounts:

- mountPath: /config

name: standalone-envoy-config

readOnly: true

- mountPath: /ca-cert

name: ca-cert

readOnly: true

- mountPath: /certs

name: certs

readOnly: true

volumes:

- name: standalone-envoy-config

configMap:

name: standalone-envoy-config

- name: ca-cert

secret:

secretName: ca-secret

- name: certs

secret:

secretName: envoy-secret

---

apiVersion: v1

kind: Service

metadata:

name: standalone-envoy

namespace: apigee

labels:

app: standalone-envoy

annotations:

cloud.google.com/neg: '{"exposed_ports": {"443":{}}}'

spec:

ports:

- port: 443

name: http

targetPort: 8443

protocol: TCP

selector:

app: standalone-envoy

type: ClusterIPPlease note the two volumeMounts and volumes - ca-cert and certs, which respectively point to the ca-secret and envoy-secret Kubernetes secrets and that are used by the Envoy proxies to configure the mTLS.

Also note the annotation at the service level. The cloud.google.com/neg annotation specifies that port 443 will be associated with a NEG.

In case you need more information about Load Balancing in Google Cloud and NEG, please refer to the Google Cloud documentation.

kubectl apply -f standalone-envoy-manifest-neg.yamlYou can list the NEG in your GKE cluster using the following command:

kubectl get svcneg -n apigeeSet the NEG environment variable, which contains the name of the Network endpoint Group that has just been created:

export NEG=$(gcloud compute network-endpoint-groups list --format=json | jq -r '.[0].name')At this step, you have created two Envoy Proxy replicas (as defined in the Kubernetes deployment) based on a dedicated configuration, as well as a NEG.

Let’s go on with the creation of the TCP Proxy LB in the second part.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @joel_gauci in the above code i see envoy proxy is listening on port 8443 but in the deployment section i see container listening port is 9000, will this code work if we use different port on container?

Twitter

Twitter