- Google Cloud

- Articles & Information

- Cloud Product Articles

- Network and Routing Configuration to manage APIs h...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

In this two-part article (co-authored with Ibrahima N'doye and Christophe Lalevee), we discuss the network and routing configuration for using Apigee X to manage APIs that are hosted on-premise. In particular, this article details the necessary network configuration - Google Cloud Platform (GCP) and Apigee X side - when the Apigee X managed runtime is used by client applications and backends that are located exclusively on an on-premise system.

In such a situation, the customer’s on-prem network is connected with GCP through Cloud Interconnect or Cloud VPN. This extends customers' on-premises network to their GCP Virtual Private Cloud (VPC) through a highly available, low latency connection.

Requirements

Requirements are the following:

- no internet egress

- requirement for own certs on the API endpoint

- DNS peering

Architecture overview

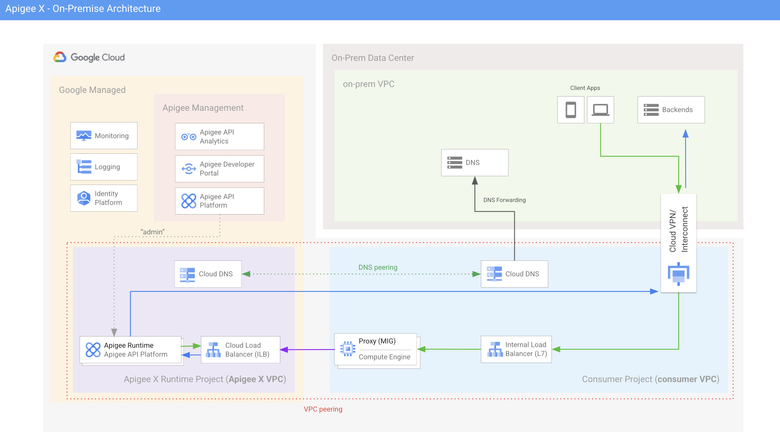

Here is an overview of the architecture.

As mentioned before, the scope of this article is a situation where the client applications and backend APIs only exist in the on-prem data center and VPC.

This single region architecture highlights 3 distinct VPCs:

- the Apigee X Service Network (purple)

- the VPC of the consumer project (blue)

- the network of the on-prem data center (green)

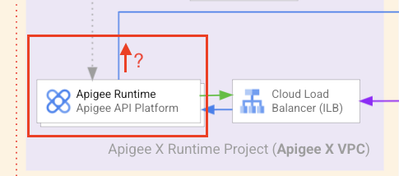

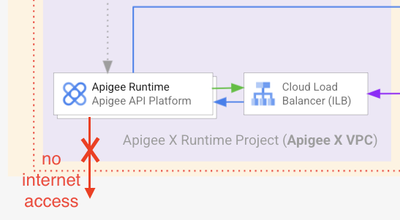

Another particularity of this architecture is that it must not allow access to the internet from the Apigee X runtime.

A dedicated VPC peering allows resources from the consumer VPC to request APIs exposed on the Apigee X runtime. The Apigee X runtime can also reach the resources installed in the consumer VPC.

This is a simplified view of a real production architecture, as many GCP customers are using a Shared VPC instead of one single consumer VPC to peer with the Apigee X VPC.

Please refer to the Apigee X documentation if you want to know more about using shared VPC networks with Apigee X.

Based on this architecture and requirements the following topics are detailed in this article:

- Routes from the Apigee X runtime to backend APIs and DNS servers: how can routes be exported to the Apigee X runtime?

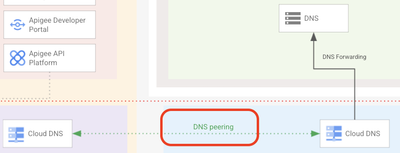

- DNS Peering and DNS forwarding for name resolution on the Apigee X runtime, based on a DNS server that is located on the on-prem network

- Even though the Apigee X ingress is accessible to the on-prem client applications, an HTTPS Internal Load Balancer (ILB L7) is required if specific cryptographic objects (private key and certificate) must be used to secure the communication channel to Apigee X. If it is not the case, then the whole part related to MIG and HTTPS ILB can be skipped

Routes

The Apigee X runtime refers to backend APIs and DNS server(s) that are located on the on-prem VPC. In order to reach this VPC, it is necessary to export routes that are either static or dynamic routes.

Dynamic routes are based on the Border Gateway Protocol (BGP) that is used to exchange routes between the consumer’s VPC network and the on-premises network via Cloud Routers.

DNS Peering / DNS forwarding

The target DNS servers are located on-premise. This means that the name resolution on the Apigee X runtime must be done through these DNS servers such that API proxies can refer to internal hostnames.

HTTPS Internal Load Balancer (L7)

The HTTPS internal load balancer (ILB) is a regional Layer 7 load balancer that enables you to run and scale your services behind an internal IP address.

On an internal HTTP(S) load balancer, you can configure self-managed certificates: being able to configure your own cryptographic objects is often required when Apigee X is used by one of the on-prem applications.

When using an HTTPS ILB, a Managed Instance Group (MIG) is required to send API traffic from the internal HTTPS load balancer to the Apigee Instance.

Google Cloud and Apigee X Configuration details

At this point, we present the Google configuration which is required to implement the target architecture as presented in the Architecture overview .

In this step, we consider that Apigee X has been installed for internal access only: no global load balancer needs to be installed as the API traffic must be confined to internal use, i.e. from the applications and to the resource servers (backend APIs) of the on-prem infrastructure.

In case you would need to install a trial version of Apigee X, you can follow the instructions provided through the Apigee DevRel GitHub repository, which proposes a dedicated provisioning tool: Apigee X Trial Provisioning or use the Apigee terraform module as described here.

Environment Variables

The article refers to some variables, which are the following:

- MIG_NAME: name of the Managed Instance Group that is defined as the backend of the HTTPS ILB

- VPC_NAME: name of the consumer VPC used to peer your Apigee X organization

- VPC_SUBNET: subnet of the consumer VPC used to peer your Apigee X organization

- REGION: region used for the provisioning of your Apigee X managed runtime

- PROJECT_ID: identifier of the Google Cloud consumer project

- APIGEE_ENDPOINT: the IP address of your Apigee X runtime ingress

Network Route exportation

In order for the Apigee X runtime to be able to find routes to backend APIs located on the on-prem network you must enable the custom route exchange. The network peering is updated in order for the consumer VPC network to export custom routes to the peer network (Apigee X VPC network).

Network routes that are defined on the consumer project, and therefore those recovered dynamically via Cloud VPN / Interconnect and Border Gateway Protocol (BGP) as well as static routes, can be used by the Apigee X runtime.

gcloud compute networks peerings update servicenetworking-googleapis-com \

--network=$VPC_NAME \

--project=$PROJECT_ID \

--export-custom-routes

DNS peering

The first step is to configure a Cloud DNS private zone in the consumer project, which forwards DNS requests to your on-prem DNS server.

Then, in order to create a connection between the consumer network and Google managed services, and to manage peered DNS domains for that connection, we can use the following commands:

# Enable the dns.googleapis.com API in the consumer project

gcloud services enable dns.googleapis.com

gcloud services peered-dns-domains create customer-domain \

--network=$VPC_NAME \

--dns-suffix=customer.example.com.

This creates a peered DNS domain which forwards DNS queries for the specified namespace originating in the producer network (Apigee X VPC) to the consumer network to be resolved.

Removal of Internet access from the Apigee X VPC

If the Apigee X runtime must not access resources exposed on the Internet you can enable VPC Service Controls (SC) on the VPC peered connection between Apigee X and the consumer VPCs. Thus, access to the internet is disabled: the Apigee runtime will no longer communicate with any public internet target.

To enable VPC SC for the peered connection you can use the following gcloud command:

gcloud services vpc-peerings enable-vpc-service-controls \

--network=$VPC_NAME \

--project=$PROJECT_ID

Once the VPC SC has been enabled, the Apigee X runtime has no more access to the public internet.

On the second part, we discuss the configuration of the MIG and HTTPS ILB.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This is very helpful and explains the connectivity for Apigee X SaaS. Can we have a similar thread for Apigee X Hybrid ?

Another question I had was, will the whole set up explained here need to be re-done for another Apigee Organization that I wish to set up?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello SidDas,

First and foremost, thanks for your feedback and questions!

Yes, you can have a similar approach for Apigee hybrid (Apigee X is in fact Apigee "SaaS") and Yes if it is a brand new Apigee organization in this case you will need to set up a new MIG but depending on the VPC design (shared VPC or not, same region or not for MIGs) you could reuse part of the HTTPS ILB and just define a new URL Map/proxy and SSL certificate... so maybe the easiest way is to have a dedicated set of HTTPS ILB + MIG for each Apigee organization

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi,

thank you for instructions! We're trying to setup southbound DNS resolution in our shared Vpc setup. Our problem was that ApigeeX could not resolve (Cloud) DNS addresses of our GKE services while traffic goes thru with Ip.

--network=$VPC_NAME \

--dns-suffix=customer.example.com.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello Jan,

Can you please confirm you are executing this command on the consumer project related to you Apigee X organization?

Thanks

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Joel,

our mistake was to run peered-dns-domains - command against our ApigeeX service project. When command was run against project where our shared VPC was located, it was successful.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

thanks for your feedback Jan. I wish you a very good day

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

thanks for this, on the on-prem VPC (in green in diagram) do we need any configurations for routing to work. e.g BGP router etc.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello,

If your client apps and backend APIs are set on the on-premise infra, you need to define (on the on-premise network) at least one route to the HTTPS Internal Load Balancer (in GCP). This advertisement is in general managed using BGP sessions that let each router (Cloud Router on GCP) advertise routes to peer networks.

I have not detailed this topic as it is more of a Cloud Interconnect or Cloud VPN discussion.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

thanks for the information !

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Joel,

we have NAT IP's provisioned for our Org - this is needed for Vendor communication.

we would like to now enable DNS peering as suggested in the article. However i would also like to know the IP address to be whitelisted for On prem firewall. will it be NAT IP or some other IP?

Thank you!

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Basically, the IPs addresses to be allowed are those based on the IP address range (/22) used to provision the Apigee X platform.

Of course you can also configure a NAT gateway on the consumer VPC side in order to use some dedicated IP addresses before reaching the on-premise infrastructure

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Great article, thanks a lot,

but I am wondering how the VPC SC can restrict application level (not Google API) egress traffic from Apigee message processor through cloud NAT to public internet?

Twitter

Twitter