- Google Cloud

- Articles & Information

- Cloud Product Articles

- Performance validation of API gateway deployments

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Article History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This article aims to provide some guidance on how to approach with structure and in an organised fashion the broad topic of performance testing and validation of the key performance metrics of an API gateway. Broadly speaking, I will provide concepts, tools and a walk through of a real-life scenario to successfully not only prepare and execute the tests, but also understand the logical steps and the important bits of systems theory needed to judge and assess the validity of the outcome in what usually is a multidimensional problem, with multiple dimensions and moving parts, what role plays each component and how to design a valid test and correctly interpret the results. I will generally define performance and latency in the context of API traffic and I will guide the reader though the key differences between testing for performance and latency under different test conditions of fixed capacity backends, traffic sources and API gateways and also what changes when elasticity and autoscaling in cloud introduces new degrees of freedom in the assessment. For the readers hungry for results, I will provide concise step-by-step walkthrough instructions on how to test and how to retrieve and interpret the key metrics and make sense of the results in a practical way.

The article also provides a list of useful resources to drill down into several related systems concepts, for the reader with more time and ready to upskill on the broad areas of performance engineering and distributed systems theory. Note that this article is far from being exhaustive, but my hope is that you will find it helpful, you would have learned a few new interesting things in the process of reading and experimenting with its guidance and you would have found it helpful to address your needs to meet your assignment during either an exercise of initial capacity sizing, its validation or tuning, a bottleneck identification problem and/or the need to fine tune an auto scaling configuration, where the specific load generator tool and/or gateway deployment allow.

The process

A. test your backend in isolation; if you don't have your backend, you will need to prepare a mock for it instead. For example:

┌────────────────┐───────5k rps────────▶┌─────────────────┐ │ Load Generator │ │ backend service │ └────────────────┘◀───────10 ms─────────└─────────────────┘

B. You can use the go bench suite to expose your mock if you need it. This will allow you to prepare a stub for your backend service that responds with the same latency if you don't have or don't want to use your backend for the tests; test with it. You can simulate upstream latency by forcing a synthetic delay in its response using X-Delay: {delay} in the request header, even only for a fraction of the requests (with the optional X-Delay-Percent header, integer between 0 to 100). For example:

┌────────────────┐──────5k rps──────▶┌─────────────────┐ │ Load Generator │ │ Go Bench Suite │ └────────────────┘◀──────10 ms───────└─────────────────┘

C. assess peak capacity, response latency profile, breaking points, HTTP response codes. You will collect these during and after the test from the output of the load generator tool.

now you are ready to test your API gateway instance and the corresponding API proxy(es) they expose. For example:

┌──────────────┐─5k rps─▶┌─────────────┐──▶┌───────┐ │Load Generator│ │Proxy to test│ │backend│ └──────────────┘◀──11 ms─└─────────────┘◀──└───────┘

you can now retrieve and analyse the results and plan the next steps to change configuration, change the behaviour of the proxy under test and the other conditions, including making sizing changes. Tests can be repeated using this iterative methodology.

┌──────────────┐─5k rps─▶┌─────────────┐──▶┌──────────────┐ │Load Generator│ │Proxy to test│ │Go Bench Suite│ └──────────────┘◀──8 ms──└─────────────┘◀──└──────────────┘

The Coordinated Omission problem

The best description of this problem is in the documentation of the open source performance testing tool wrk2 (which is described with greater level of details in this article). I have reported it verbatim in this paragraph. wrk2 was derived from wrk to specifically address this problem, and it is described by his author as a constant throughput, correct latency recording variant of wrk. The key parts if its descriptions are specifically two, as I reported them in bold.

- Generation of a constant throughput test traffic (this will be less trivial than it seems)

- Latency recorded correctly (this will appear more clear once you have completed reading this paragraph). The underlying assumption is that by ignoring this problem, the latency profile recorded during the test will be inaccurate and also misleading.

One of wrk2's main modifications to wrk's current (Nov. 2014) measurement model has to do with how request latency is computed and recorded.

wrk's model, which is similar to the model found in many current load generators, computes the latency for a given request as the time from the sending of the first byte of the request to the time the complete response was received.

While this model correctly measures the actual completion time of individual requests, it exhibits a strong Coordinated Omission effect, through which most of the high latency artifacts exhibited by the measured server will be ignored. Since each connection will only begin to send a request after receiving a response, high latency responses result in the load generator coordinating with the server to avoid measurement during high latency periods.

There are various mechanisms by which Coordinated Omission can be corrected or compensated for. For example, HdrHistogram includes a simple way to compensate for Coordinated Omission when a known expected interval between measurements exists. Alternatively, some completely asynchronous load generators can avoid Coordinated Omission by sending requests without waiting for previous responses to arrive. However, this (asynchronous) technique is normally only effective with non-blocking protocols or single-request-per-connection workloads. When the application being measured may involve multiple serial request/response interactions within each connection, or a blocking protocol (as is the case with most TCP and HTTP workloads), this completely asynchronous behavior is usually not a viable option.

The model I chose for avoiding Coordinated Omission in wrk2 combines the use of constant throughput load generation with latency measurement that takes the intended constant throughput into account. Rather than measure response latency from the time that the actual transmission of a request occurred, wrk2 measures response latency from the time the transmission should have occurred according to the constant throughput configured for the run. When responses take longer than normal (arriving later than the next request should have been sent), the true latency of the subsequent requests will be appropriately reflected in the recorded latency stats.

Note: This technique can be applied to variable throughput loaders. It requires a "model" or "plan" that can provide the intended start time if each request. Constant throughput load generators Make this trivial to model. More complicated schemes (such as varying throughput or stochastic arrival models) would likely require a detailed model and some memory to provide this information.

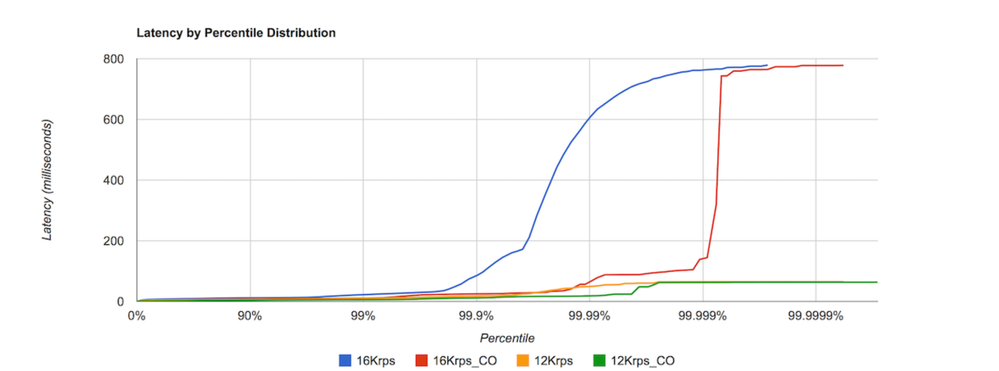

In order to demonstrate the significant difference between the two latency recording techniques, wrk2 also tracks an internal "uncorrected latency histogram" that can be reported on using the --u_latency flag. The following chart depicts the differences between the correct and the "uncorrected" percentile distributions measured during wrk2 runs. (The "uncorrected" distributions are labeled with "CO", for Coordinated Omission)

These differences can be seen in detail in the output provided when the --u_latency flag is used. For example, the output below demonstrates the difference in recorded latency distribution for two runs:

The first ["Example 1" below] is a relatively "quiet" run with no large outliers (the worst case seen was 11 msec), and even wit the 99'%ile exhibit a ~2x ratio between wrk2's latency measurement and that of an uncorrected latency scheme.

The second run ["Example 2" below] includes a single small (1.4sec) disruption (introduced using ^Z on the apache process for simple effect). As can be seen in the output, there is a dramatic difference between the reported percentiles in the two measurement techniques, with wrk2's latency report [correctly] reporting a 99%'ile that is 200x (!!!) larger than that of the traditional measurement technique that was susceptible to Coordinated Omission.

Which tool do I need ?

Before deciding, familiarize yourself with all the sections of this document. Once you have done it, you will be confident on the strengths and weaknesses of each tool you will select to conduct the performance validation and will be better equipped to understand, present and discuss the findings of the tests and the degrees of freedom you will have to tune the performance of the source of the test traffic and/or the system you are testing and its corresponding response latency profiles under the test conditions you have defined. Once you have familiarised with the coordinated omission problem presented in the previous chapter - and understood how it will risk affecting the results of your tests and misleading their interpretation, you will be better equipped not to get confused by the choice of the actual test client, its settings, the test environment conditions and choice of traffic test profiles, not to forget the sizing of all network and compute resources.

You can get started and produce high volume and high quality results and reports with a fairly limited investment, and then by a few iterations, you can add a complete set of sophisticated controls on the source traffic definition, management graphical user interfaces, clustered deployment architectures just by picking the most appropriate tool, following further guidance in this document.

If you would like to start with a fairly complete and easy to run command line tool, wrk and its wrk2 derivation are excellent initial choices. If, instead, you want full control of the test traffic profile and need to build test automation around more complex or high number of test scenarios, then the best bet is to invest time learning how to use gatling, which also provides a complete user interface to chart a wealth of result dimensions including response latency and error distributions, then familiarise how to use it. Then go to the next level of mastery by deploying it in a distributed cluster leveraging a multitenant architecture (distGatling).

Backend mocking

Unless you have your backend upstream service readily available with peak capacity that you need to test with and validate for (or a serverless function implementation or a kubernetes cluster able to scale to meet the capacity demands), you will need to first mock it. Otherwise, without at least one backend nor a mock of it, you will only be able to test against an API gateway that is able to produce static responses, in short, not a full-fledged non-transparent reverse proxy with a ton of policies to inspect, block, manipulate and customise both requests and responses.

For a simple basic test you can start without adding specific logic in the mock itself. At least it is a good starting point to introduce the general principles of the testing methodology.

Start with the validation of the backend capacity and design tests to retrieve its response latency profile, response codes distribution and also understand its stability (i.e. check if it will remain stable and with predictable or acceptable performance) under the desired target level and shape of traffic pressure and duration during its normal operations.

go bench suite

This is an open source tool available here. This is not a very sophisticated tool, but will allow you to get started if you have never built a mock before to suit your testing needs. Remember that you can't just pick a private endpoint as the target for your performance tests unless you own them and you have otherwise obtained a formal upfront authorisation to use them. You don't want to find yourself in a very uncomfortable position to justify yourself afterwards. You can deploy this in isolation as standalone or in a kubernetes cluster you have administration rights for once you build the corresponding docker container. More instructions are on the project website. We will focus on its useful characteristics and why they are valuable for the tests.

It is written in go, so to get started you can install it from source, then run it locally:

go install && go-bench-suite upstream --addr 127.0.0.1:8000

or if you have your own certificate and key files to run a HTTPS web server:

go install && go-bench-suite upstream --addr 127.0.0.1:8443 --certFile ./certs/foo.com.cert.pem --keyFile ./certs/foo.com.key.pem

Once the service is up and running, you will have a few API endpoints designed with the same approach as https://httpbin.org. Just much fewer options. The most useful one is to be able to respond with deterministic or probabilistic (at your choice) response times once it is invoked by a HTTP(S) client.

Among them you have the following methods.

GET /delay/{duration}

Invoking this endpoint returns a 0 length body response after a {duration} delay. Valid time units are ns (nanoseconds), us (or µs, for microseconds), ms (milliseconds), s (seconds), m, h (minutes and hours; but do you really want to use them ?).

You can also simulate upstream latency by forcing a synthetic delay using

X-Delay: {delay} in the request header for any other supported request endpoint. This can be 100% of the times or a fraction of times, if you also add the request integer (from 0 to 100) header

X-Delay-Percent: {delay-percent}

GET|POST|PUT|PATCH /size/{size}Invoking this endpoint with the GET verb returns a payload of random chars of the specified size {size}. Note this won't be a valid JSON or XML payload. Size accepts a human readable byte string into a number of bytes. Supports B, K, KB, M, MB. For POST, PUT and PATCH verbs you will need to supply the payload in the request.

GET /resource/{id}

Returns a particular resource by it's id. You can combine this by adding the X-Delay request header.

Command line performance testing tools

1. hey

Installation

If your platform has homebrew installed, you can install it with the corresponding formula:

brew install hey

Alternatively you can install brew in advance following the steps here or just download the pre-compiled binaries for Linux 64-bit and Mac 64-bit as per the documentation at the github project page.

Basic use It supports HTTP2 endpoints. hey runs the provided number of requests in the provided concurrency level and prints stats.

IMG

curl https://api.envgroup1.example.com/my-proxy/v1/something

2. wrk

Installation

If your platform has homebrew installed, you can install it with the corresponding formula:

brew install wrk

Alternatively, if you have a CentOS Linux OS:

sudo yum -y groupinstall 'Development Tools' sudo yum -y install openssl-devel git git clone https://github.com/wg/wrk.git cd wrk make WITH_LUAJIT=/usr WITH_OPENSSL=/usrsudo cp wrk /usr/local/bin/

For Ubuntu OS, the installation steps are the following:

sudo apt-get install build-essential libssl-dev git -y git clone https://github.com/wg/wrk.git wrk cd wrk sudo make sudo cp wrk /usr/local/bin

For other platforms there are similar installation steps on the project webpage.

Command line options:

-c, --connections: total number of HTTP connections to keep open with

each thread handling N = connections/threads

-d, --duration: duration of the test, e.g. 2s, 2m, 2h

-t, --threads: total number of threads to use

-s, --script: LuaJIT script, see SCRIPTING

-H, --header: HTTP header to add to request, e.g. "User-Agent: wrk"

--latency: print detailed latency statistics

--timeout: record a timeout if a response is not received within

this amount of time.

Basic use

wrk -t12 -c400 -d30s --latency https://api.example.com/v1/ping

This runs a benchmark for 30 seconds, using 12 threads, and keeping 400 HTTP connections open and gathers the detailed latency distribution profile.

Gather the results

Running 30s test @ http://localhost:8080/index.html 12 threads and 400 connections Thread Stats Avg Stdev Max +/- Stdev Latency 635.91us 0.89ms 12.92ms 93.69% Req/Sec 56.20k 8.07k 62.00k 86.54% Latency Distribution 50% 250.00us 75% 491.00us 90% 700.00us 99% 5.80ms 22464657 requests in 30.00s, 17.76GB read Requests/sec: 748868.53 Transfer/sec: 606.33MB

3. go-wrk

Installation

Prerequisite:

- go language: https://golang.org/doc/install

go get github.com/tsliwowicz/go-wrk

This will download and compile go-wrk. The binary will be placed under your $GOPATH/bin directory

Command line parameters:

./go-wrk -help

Usage: go-wrk <options> <url>

Options:

-H header line, joined with ';' (Default )

-M HTTP method (Default GET)

-T Socket/request timeout in ms (Default 1000)

-body request body string or @filename (Default )

-c Number of goroutines to use (concurrent connections) (Default 10)

-ca CA file to verify peer against (SSL/TLS) (Default )

-cert CA certificate file to verify peer against (SSL/TLS) (Default )

-d Duration of test in seconds (Default 10)

-f Playback file name (Default <empty>)

-help Print help (Default false)

-host Host Header (Default )

-http Use HTTP/2 (Default true)

-key Private key file name (SSL/TLS (Default )

-no-c Disable Compression - Prevents sending the "Accept-Encoding: gzip" header (Default false)

-no-ka Disable KeepAlive - prevents re-use of TCP connections between different HTTP requests (Default false)

-redir Allow Redirects (Default false)

-v Print version details (Default false)

Basic use

./go-wrk -c 80 -d 5 http://api.example.com/endpoint

This runs a benchmark for 5 seconds, using 80 go routines (connections)

Gather the results

Running 10s test @ http://api.example.com/ping 80 goroutine(s) running concurrently 142470 requests in 4.949028953s, 19.57MB read Requests/sec: 28787.47 Transfer/sec: 3.95MB Avg Req Time: 0.0347ms Fastest Request: 0.0340ms Slowest Request: 0.0421ms Number of Errors: 0

4. wrk2

wrk2 is wrk modifed to produce a constant throughput load, and accurate latency details to the high 9s (i.e. can produce accurate 99.9999%'ile when run long enough). In addition to wrk's arguments, wrk2 takes a throughput argument (in total requests per second) via either the --rate or -R parameters (default is 1000).

Installation

Prerequisite

gcc compiler (compiling it from source, example below on Debian Linux)

sudo apt install wget libssl-dev unzip libz-dev wget https://codeload.github.com/giltene/wrk2/zip/refs/heads/master mv master master.zip; unzip master.zip; rm master.zip; cd wrk2-master/ make

Command line parameters:

./wrk

Basic use

./wrk -t2 -c100 -d30s -R2000 --latency https://api.example.com/ping

This runs a benchmark for 30 seconds, using 2 threads, keeping 100 HTTP connections open, and a constant throughput of 2000 requests per second (total, across all connections combined). It's important to note that wrk2 extends the initial calibration period to 10 seconds (from wrk's 0.5 second), so runs shorter than 10-20 seconds may not present useful information.

Gather the results

Running 10s test @ https://api.example.com/ping Running 30s test @ https://api.example.com/ping 2 threads and 100 connections Thread calibration: mean lat.: 10087 usec, rate sampling interval: 22 msec Thread calibration: mean lat.: 10139 usec, rate sampling interval: 21 msec Thread Stats Avg Stdev Max +/- Stdev Latency 6.60ms 1.92ms 12.50ms 68.46% Req/Sec 1.04k 1.08k 2.50k 72.79% Latency Distribution (HdrHistogram - Recorded Latency) 50.000% 6.67ms 75.000% 7.78ms 90.000% 9.14ms 99.000% 11.18ms 99.900% 12.30ms 99.990% 12.45ms 99.999% 12.50ms 100.000% 12.50ms Detailed Percentile spectrum: Value Percentile TotalCount 1/(1-Percentile) 0.921 0.000000 1 1.00 4.053 0.100000 3951 1.11 4.935 0.200000 7921 1.25 5.627 0.300000 11858 1.43 6.179 0.400000 15803 1.67 6.671 0.500000 19783 2.00 6.867 0.550000 21737 2.22 7.079 0.600000 23733 2.50 7.287 0.650000 25698 2.86 7.519 0.700000 27659 3.33 7.783 0.750000 29644 4.00 7.939 0.775000 30615 4.44 8.103 0.800000 31604 5.00 8.271 0.825000 32597 5.71 8.503 0.850000 33596 6.67 8.839 0.875000 34571 8.00 9.015 0.887500 35070 8.89 9.143 0.900000 35570 10.00 9.335 0.912500 36046 11.43 9.575 0.925000 36545 13.33 9.791 0.937500 37032 16.00 9.903 0.943750 37280 17.78 10.015 0.950000 37543 20.00 10.087 0.956250 37795 22.86 10.167 0.962500 38034 26.67 10.279 0.968750 38268 32.00 10.343 0.971875 38390 35.56 10.439 0.975000 38516 40.00 10.535 0.978125 38636 45.71 10.647 0.981250 38763 53.33 10.775 0.984375 38884 64.00 10.887 0.985938 38951 71.11 11.007 0.987500 39007 80.00 11.135 0.989062 39070 91.43 11.207 0.990625 39135 106.67 11.263 0.992188 39193 128.00 11.303 0.992969 39226 142.22 11.335 0.993750 39255 160.00 11.367 0.994531 39285 182.86 11.399 0.995313 39319 213.33 11.431 0.996094 39346 256.00 11.455 0.996484 39365 284.44 11.471 0.996875 39379 320.00 11.495 0.997266 39395 365.71 11.535 0.997656 39408 426.67 11.663 0.998047 39423 512.00 11.703 0.998242 39431 568.89 11.743 0.998437 39439 640.00 11.807 0.998633 39447 731.43 12.271 0.998828 39454 853.33 12.311 0.999023 39463 1024.00 12.327 0.999121 39467 1137.78 12.343 0.999219 39470 1280.00 12.359 0.999316 39473 1462.86 12.375 0.999414 39478 1706.67 12.391 0.999512 39482 2048.00 12.399 0.999561 39484 2275.56 12.407 0.999609 39486 2560.00 12.415 0.999658 39489 2925.71 12.415 0.999707 39489 3413.33 12.423 0.999756 39491 4096.00 12.431 0.999780 39493 4551.11 12.431 0.999805 39493 5120.00 12.439 0.999829 39495 5851.43 12.439 0.999854 39495 6826.67 12.447 0.999878 39496 8192.00 12.447 0.999890 39496 9102.22 12.455 0.999902 39497 10240.00 12.455 0.999915 39497 11702.86 12.463 0.999927 39498 13653.33 12.463 0.999939 39498 16384.00 12.463 0.999945 39498 18204.44 12.479 0.999951 39499 20480.00 12.479 0.999957 39499 23405.71 12.479 0.999963 39499 27306.67 12.479 0.999969 39499 32768.00 12.479 0.999973 39499 36408.89 12.503 0.999976 39500 40960.00 12.503 1.000000 39500 inf #[Mean = 6.602, StdDeviation = 1.919] #[Max = 12.496, Total count = 39500] #[Buckets = 27, SubBuckets = 2048] ---------------------------------------------------------- 60018 requests in 30.00s, 19.81MB read Requests/sec: 2000.28 Transfer/sec: 676.18KB <br />

5. Vegeta

Vegeta is a versatile HTTP load testing tool built out of a need to drill HTTP services with a constant request rate. It can be used both as a command line utility and a library. It can generate graphical reports.

Installation

You can get the pre-compiled binaries here.

Usage

Usage: vegeta [global flags] <command> [command flags]

global flags:

-cpus int

Number of CPUs to use (defaults to the number of CPUs you have)

-profile string

Enable profiling of [cpu, heap]

-version

Print version and exit

attack command:

-body string

Requests body file

-cert string

TLS client PEM encoded certificate file

-chunked

Send body with chunked transfer encoding

-connections int

Max open idle connections per target host (default 10000)

-duration duration

Duration of the test [0 = forever]

-format string

Targets format [http, json] (default "http")

-h2c

Send HTTP/2 requests without TLS encryption

-header value

Request header

-http2

Send HTTP/2 requests when supported by the server (default true)

-insecure

Ignore invalid server TLS certificates

-keepalive

Use persistent connections (default true)

-key string

TLS client PEM encoded private key file

-laddr value

Local IP address (default 0.0.0.0)

-lazy

Read targets lazily

-max-body value

Maximum number of bytes to capture from response bodies. [-1 = no limit] (default -1)

-max-workers uint

Maximum number of workers (default 18446744073709551615)

-name string

Attack name

-output string

Output file (default "stdout")

-proxy-header value

Proxy CONNECT header

-rate value

Number of requests per time unit [0 = infinity] (default 50/1s)

-redirects int

Number of redirects to follow. -1 will not follow but marks as success (default 10)

-resolvers value

List of addresses (ip:port) to use for DNS resolution. Disables use of local system DNS. (comma separated list)

-root-certs value

TLS root certificate files (comma separated list)

-targets string

Targets file (default "stdin")

-timeout duration

Requests timeout (default 30s)

-unix-socket string

Connect over a unix socket. This overrides the host address in target URLs

-workers uint

Initial number of workers (default 10)

encode command:

-output string

Output file (default "stdout")

-to string

Output encoding [csv, gob, json] (default "json")

plot command:

-output string

Output file (default "stdout")

-threshold int

Threshold of data points above which series are downsampled. (default 4000)

-title string

Title and header of the resulting HTML page (default "Vegeta Plot")

report command:

-buckets string

Histogram buckets, e.g.: "[0,1ms,10ms]"

-every duration

Report interval

-output string

Output file (default "stdout")

-type string

Report type to generate [text, json, hist[buckets], hdrplot] (default "text")

A good write-up to get started with load testing using Vegeta is available here.

Linux Network Performance Parameters

The best starting point for a deep dive is here. You should at least make yourself aware of its content and pay special attention to its "how to monitor" section.

Tools with graphical interfaces

1. Gatling

Start using it straight away with the cheat sheet here. A quickstart is available here and the instructions on the scenario definition are here. A good walkthrough of its features is in this article, which will go through the details of the native graphical interface.

2. Locust.io

If you are interested in a single test instance you can read this section, otherwise you can jump directly to the section on how to deploy locust.io into a GKE cluster. Beforehand you should still learn how to run locust in a distributed environment here.

Installation

Install Python 3.6 or later following the instructions here.

Locust is installed using pip.

pip3 install locust

Validate the installation with

locust -v

Obviously you can also run Locust directly in a Docker container, instead of installing it yourself. This page explains how.

Use

This locust documentation page explains how to increase the load generator performance by swapping the HTTP Client used by locust. Be aware of the compatibility caveats as well.

3. Tsung

Tsung is written in Erlang; it is very versatile and supports a multitude of additional non HTTP protocols as well, so strictly speaking it is not only a tool for API testing. It has

Installation

Installation documentation is available here - you will need to compile it from source.

Use

It can generate significant load, but it generally takes more time to setup the tests. Instructions are provided here. It provides a granular amount of test data and stats for reporting, including graphical form, described here.

Generate test traffic using GKE

1. Distributed Locust.io

Distributed Load testing in Google Cloud using GKE

DistGatling (gatling on Kubernetes) with UI

3. Gatling on GKE

Gatling on a GKE cluster leveraging DistGatling to test an Apigee hybrid runtime cluster

A. External resources

Further reading

- Your Load Generator is Probably Lying to You - Take the Red Pill and Find Out Why

- How to run a really big load (single node performance tuning) - when your budget is tight

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks Nicola, and in particular thanks for going into detail on coordinated omission.. This is a really important topic for those running performance tests

Twitter

Twitter