- Google Cloud

- Architecture Framework

- Architecture Framework Community Blog

- Build an end-to-end data to AI solution on Google ...

Build an end-to-end data to AI solution on Google Cloud with BigQuery and Vertex AI

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

For many organizations today, there's still a barrier between data and machine learning that slows down innovation. Data can be big, multi-formatted, in different places, and hard to discover. AI/ML systems are often thought of as separate and siloed from the data warehouses and data lakes of an organization, resulting in slower speed and capacity to innovate with AI/ML.

In this article, we’ll discuss how to build an end-to-end data to AI solution on Google Cloud, including a practical example of a real-time fraud detection system and the architecture behind it. We’ll also explore how to train, deploy, and monitor machine learning models in production.

This article is based on a recent Cloud OnBoard session. Register here to watch on demand.

If you have any questions, please leave a comment below and someone from the Community or Google Cloud team will be happy to help.

- Introduction to data to AI on Google Cloud

- Data engineering

- Data analysis

- Feature engineering

- Model training

- MLOps

- Real-time fraud detection scenario

- Data to AI resources

Introduction to data to AI on Google Cloud

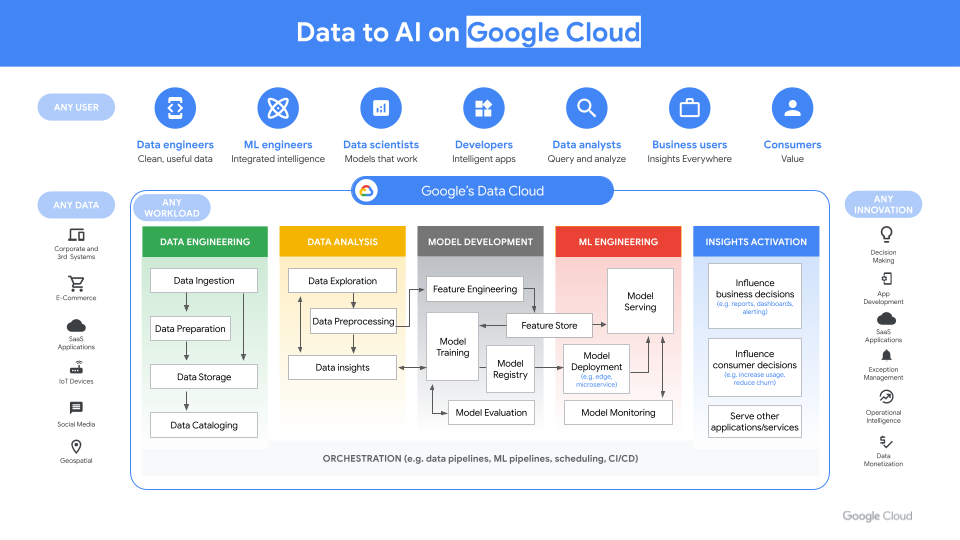

First, we need to have a common understanding of terminology and how data flows from raw data to machine learning and then to downstream insights and activations.

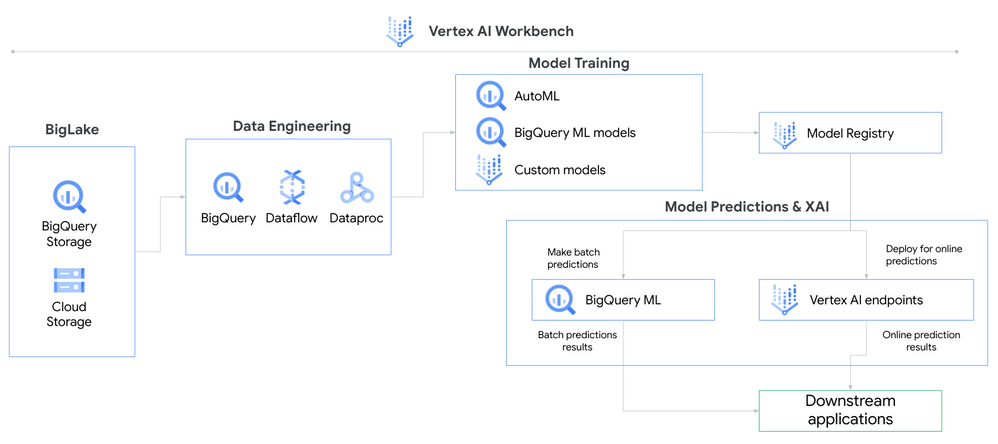

As you can see in the diagram below, there are different stages for the data to go through when building a data to AI solution, including data engineering, data analysis, feature engineering, model training, model deployment, and MLOps. Let’s take a closer look at each of these stages and the Google Cloud products you can use to enable each stage.

Data engineering

The first step in building an end-to-end data to AI solution is data engineering - ingesting, processing, and storing data.

This can be done using Google Cloud’s Data Analytics products, including:

- Dataflow, a unified stream and batch data processing solution that’s serverless, fast, and cost-effective

- BigQuery, a fully managed, petabyte-scale data warehouse that makes it easy to analyze large datasets of structured, semi-structured, and unstructured data

You can use Pub/Sub and BigQuery to ingest data from a variety of sources, including on-premises systems, cloud-based applications, and real-time streaming data. The data can then be processed and transformed using a variety of techniques, such as cleaning, filtering, and aggregating. Finally, the data can be stored in a format that is suitable for machine learning, such as a relational database, a NoSQL database, or a data warehouse.

Data analysis

Once you’ve ingested, processed, and stored your data, you can begin data analysis - exploring and understanding your data - before getting started with machine learning models.

BigQuery has a built-in editor that allows you to run and execute SQL directly in BigQuery, but you can also consider using Looker, a business intelligence solution for analyzing, exploring, and visualizing your data, or Vertex AI Workbench, a Jupyter notebook-based development environment where you can access and explore your data from within a Jupyter notebook using BigQuery and Cloud Storage integrations.

Once you explore the data, identify patterns, and understand the relationships between different variables, you can use this information to choose the appropriate features you want for machine learning - which brings us to feature engineering.

Feature engineering

Once you’ve explored and you understand your data, you can begin to prepare it for machine learning. This process is known as feature engineering (also known as data preprocessing) and it involves converting data into features (measurable properties of your input data) that can be used to train a machine learning model. You can think of feature engineering as helping the model understand the data set in the same way you do.

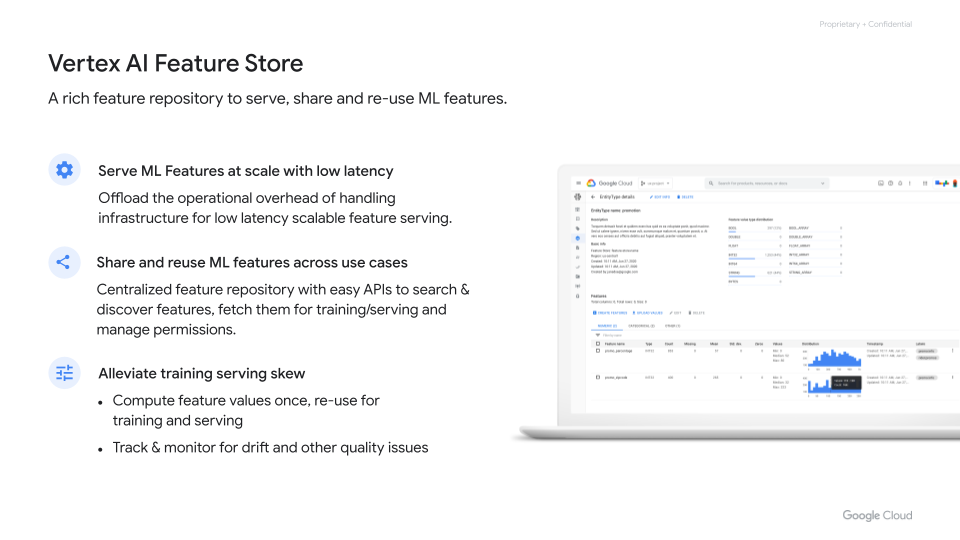

Vertex AI Feature Store is a fully managed solution, providing a central repository for organizing, storing, and serving your features. Using a central feature store with Vertex AI Feature Store, you can focus on the feature computation logic instead of worrying about the challenges of deploying features into production. End result? You can more efficiently share, discover, and re-use ML features at scale, which can increase the velocity of developing and deploying new ML applications.

Feature serving is the process of exporting stored feature values for downstream training, re-training, or inference. Vertex AI Feature Store offers two methods for serving features: batch and online.

- Batch (offline) serving is for high throughput and serving large volumes of data for offline processing (like for model training or batch predictions).

- Stream (online) serving is for low-latency data retrieval of small batches of data for real-time processing (like for online predictions).

Model training

Once you’ve prepared your data, you can start to train a machine learning model. To train machine learning models on Google Cloud, there are three options:

- AutoML: Automated machine learning. Create and train models on image, tabular, text, and video datasets without writing code.

- BigQuery ML: Train models directly in BigQuery using SQL. Manage, orchestrate, and register it directly to Vertex AI Model Registry.

- Custom training: Bring your own code and train models using OSS frameworks (scikit-learn, TensorFlow, PyTorch, XGBoost, etc.), or bring your own container and train using Vertex AI Training.

Regardless if you choose AutoML, BigQueryML, or custom training, you can use Vertex AI Model Registry as a central repository to manage and govern the lifecycle of your machine learning models.

Model deployment

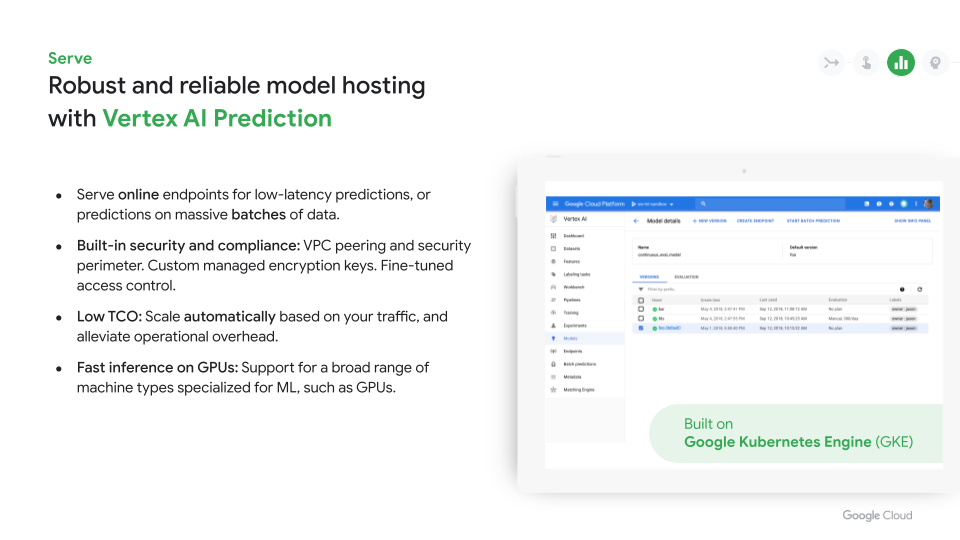

Once you’ve trained a machine learning model, you can use Vertex AI Prediction to serve your models and start getting predictions.

There are two types of predictions: online and batch.

Online predictions are synchronous requests made to a model endpoint. Use online predictions when you are making requests in response to application input or in situations that require timely inference.

You must deploy a model to an endpoint before that model can be used to serve online predictions. Deploying a model associates physical resources with the model so it can serve online predictions with low latency.

You can deploy more than one model to an endpoint, and you can deploy a model to more than one endpoint. For more information about options and use cases for deploying models, see Considerations for deploying models.

On the other hand, batch predictions are asynchronous requests. You request batch predictions directly from the model resource without needing to deploy the model to an endpoint. Use batch predictions when you don't require an immediate response and want to process accumulated data by using a single request.

Note that BigQuery ML only supports batch predictions for your models. To get online predictions, you can train your models in BigQuery ML and deploy them to Vertex AI endpoints through Vertex AI Model Registry.

The diagram below provides an overview of the data to AI flow using BigQuery ML models in Vertex AI.

MLOps

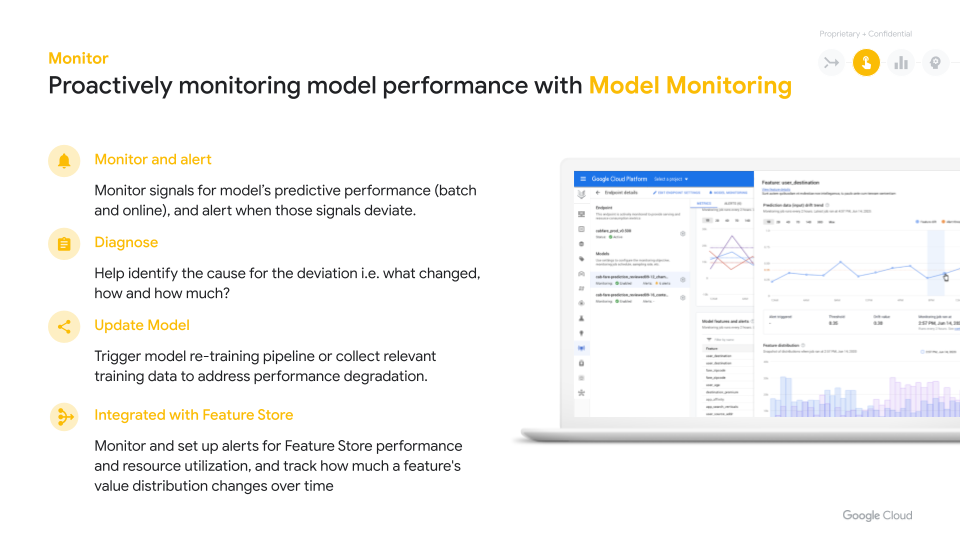

After serving your machine learning models, it's essential to regularly and proactively verify that the model performance doesn't decay. To help ensure accurate performance, the tasks of monitoring model quality and performance in production have emerged as one of the most important elements of MLOps.

Because models don't operate in a static environment, ML model performance can degrade over time. As the properties of input data change over time, the data starts to deviate from the data that was used for training and evaluating the model.

Vertex AI provides a host of solutions to monitor and govern your models, all of which help drive successful and responsible AI deployment. In particular, Vertex AI Model Monitoring is a solution with anomaly and drift detection, reporting, alerting, and recommendation capabilities to help you ensure:

- The data that is being collected and used for model training and evaluation is accurate, unbiased, and used appropriately without any data privacy violations

- The models are evaluated and validated against effectiveness quality measures and fairness indicators, so that they are fit for deployment in production

- The models are interpretable, and their outcomes are explainable (if needed)

- The performance of deployed models is monitored using continuous evaluation and the models’ performance metrics are tracked and reported

- Potential issues in model training or prediction serving are traceable, debuggable, and reproducible

Vertex AI Model Monitoring can be integrated with other Vertex AI services, such as Vertex AI Pipelines to help you automate the process of deploying and monitoring your models. For example, you can use Vertex AI Pipelines to automate the process of retraining your model when it detects an anomaly.

Real-time fraud detection scenario

Now that we’ve provided an overview of building an end-to-end data to AI solution, let’s look at a real-world example and the architecture behind it.

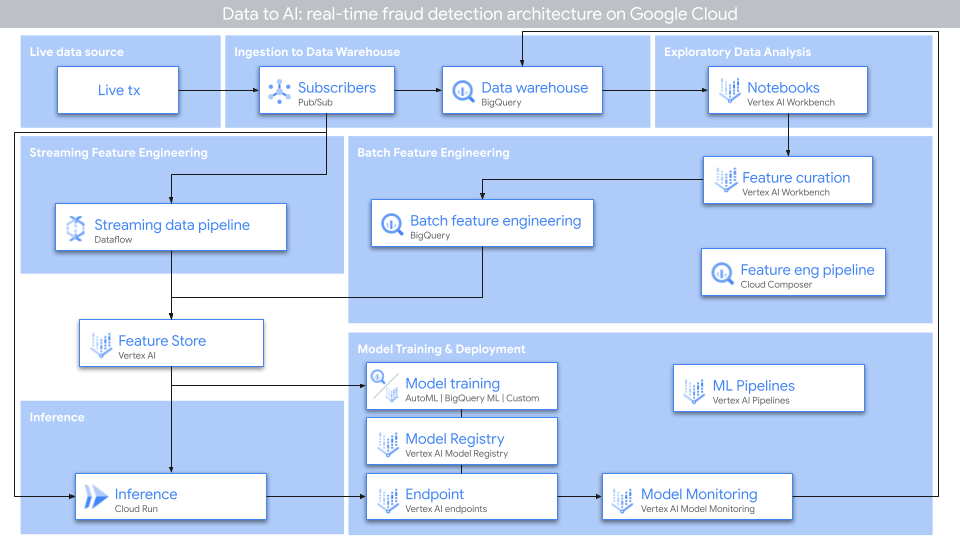

Imagine you’re a data scientist at a bank and you need to create a real-time fraud detection system to ensure the safety and legitimacy of your online bank transactions. The following diagram represents the architecture and workflow behind a real-time fraud detection solution built on Google Cloud, from data to AI. So how does this work?

Data is collected from live transaction logs and is ingested into BigQuery via Pub/Sub. The data is processed and analyzed using Vertex AI Workbench, so you can understand the appropriate features you want to predict against. From there, you can choose batch or streaming feature engineering to prepare your data for machine learning. In this case, we’ll use Dataflow for stream feature engineering with Vertex AI Feature Store to organize, store, and serve our features.

Using BigQuery ML with Vertex AI Model Registry, we’ll train our machine learning model on the data to predict the probability of a transaction being fraudulent. Then the model is deployed in production via Vertex AI endpoints. Online serving is used to serve the features to the machine learning model, from which predictions will detect fraudulent transactions in real time. The system is monitored and updated on an ongoing basis using Model Monitoring to maintain and improve its performance.

To automate this process moving forward, we can use Vertex AI Pipelines to help accelerate retraining, deploying, and monitoring our models.

See the GitHub repo for this reference architecture, which we’ve called FraudFinder, including a series of labs to showcase the comprehensive Data to AI journey on Google Cloud, through the use case of real-time fraud detection.

This is just one example of how Google Cloud can be used to build end-to-end data to AI solutions. Data scientists, data engineers, and machine learning engineers can use Google Cloud to build a variety of AI solutions, including recommender systems, image classification systems, natural language processing systems, and more. See how some of our customers are powering their business use cases using Vertex AI solutions.

Data to AI resources

We hope you found this helpful, as you explore using AI/ML to power your business processes. If you have any questions, please refer to the following resources, or feel free to leave a comment below and someone from the Community or Google Cloud team will be happy to help.

- Cloud OnBoard: From Data to AI with BigQuery and Vertex AI

- FraudFinder GitHub repo

- Vertex AI documentation and resources

- Vertex AI glossary

- Introduction to BigQuery ML

- MLOps: Continuous delivery and automation pipelines in machine learning

- Customer stories using Vertex AI

Special thank you to Polong Lin and Erwin Huizenga for delivering the original content of this article during the Cloud OnBoard: From Data to AI with BigQuery and Vertex AI session.

Twitter

Twitter

![[Cloud Onboard] Fraudfinder_ Data to AI Golden Workshop .png [Cloud Onboard] Fraudfinder_ Data to AI Golden Workshop .png](https://www.googlecloudcommunity.com/gc/image/serverpage/image-id/79599i1C9DEADEBBA3B0B6/image-size/large?v=v2&px=999)