- Google Cloud

- Articles & Information

- Community Blogs

- Building event-driven architectures on Google Clou...

Building event-driven architectures on Google Cloud

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Organizations today are collecting data to the tune of hundreds of thousands or even millions of events per second. How can you make sense of all this data for the benefit of your business and customers?

In this article, we explore an event-driven approach to solution design, and will show an example of an event-driven architecture on Google Cloud that enables you to activate the value of your data in real time and at scale.

This article is based on a recent session from the 2023 Cloud Technical Series. Register here to watch on demand!

If you have any questions, please leave a comment below and someone from the Community or Google Cloud team will be happy to help.

Why use an event-driven architecture?

An event-driven architecture is a software design pattern that uses events to trigger and communicate between decoupled services or applications, enabling us to derive actionable insights from these events as they happen.

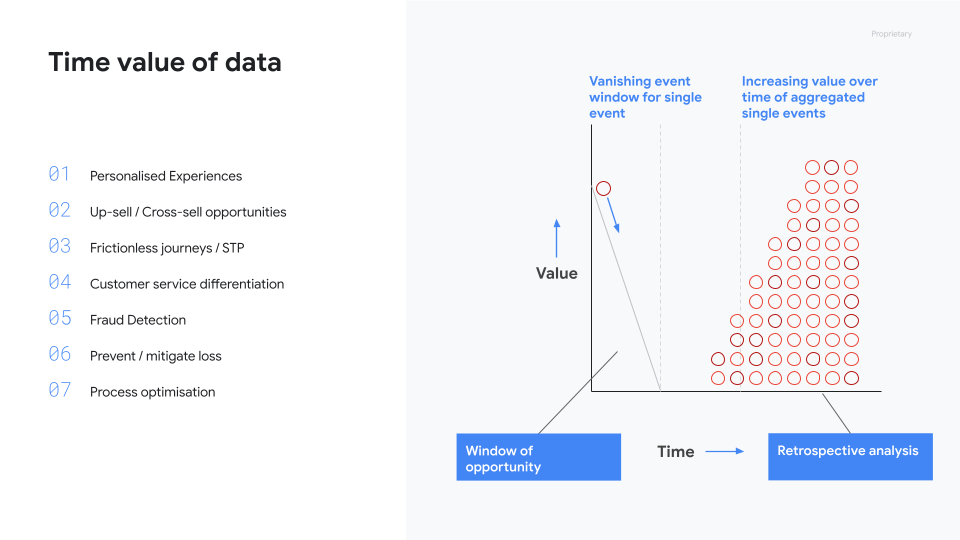

There’s a wide range of opportunities where event-driven solutions can deliver immense value, such as customer experience differentiation, straight-through processing journeys, fraud detection, process optimization, or solutions that help prevent or mitigate loss.

While these opportunities are of high value, there is a limited time window associated with them. As the image below illustrates, the value of insights about an event degrades over time.

Event-driven architectures address the vanishing window of opportunity presented by these events by helping us derive actionable insights from the events as they happen.

Requirements of an event-driven architecture

Traditionally, implementing event-driven analytics solutions has been perceived as complex and challenging for a number of reasons, such as the need to process events at scale, the need to seamlessly upscale and downscale the entire solution based on a dynamic event rate, the need for complex event processing on streaming data, and the need for low latency machine learning model serving and feature serving for stream analytics.

For organizations to effectively utilize an event-driven architecture, they need:

- A robust ingestion service to reliably ingest data/event streams at high volume and velocity and deliver them to multiple subscribers.

- A serverless architecture that can seamlessly scale up as the number of events per second increases and then decreases over a period of time. Elasticity is key to real-time solutions, as floods of data can hit unexpectedly, and tuning/provisioning for spikes is resource intensive.

- Unified stream and batch processing to ease the architectural burden of a traditional approach that requires maintaining a separate pathway for batch processing and streaming processing (i.e. Lambda architecture).

- A comprehensive set of analysis tools. Stitching together disparate technologies is difficult; stream analytics need to be integrated with data warehouse, machine learning, and online applications.

- Flexibility for users. Platforms that move toward existing user skill sets will deliver more success, at a faster pace.

Let's look at how event-driven architecture patterns on Google Cloud address these challenges and requirements.

Event-driven architecture with Google Cloud

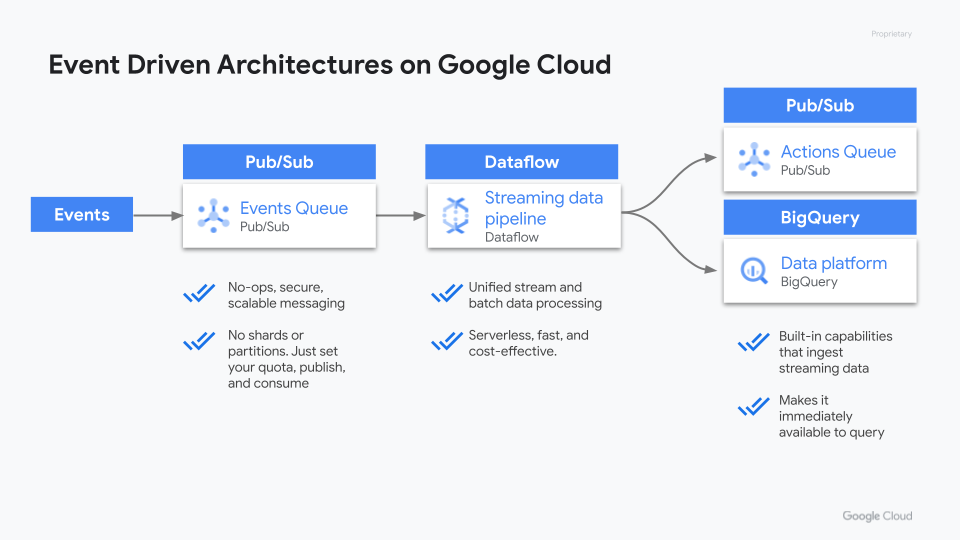

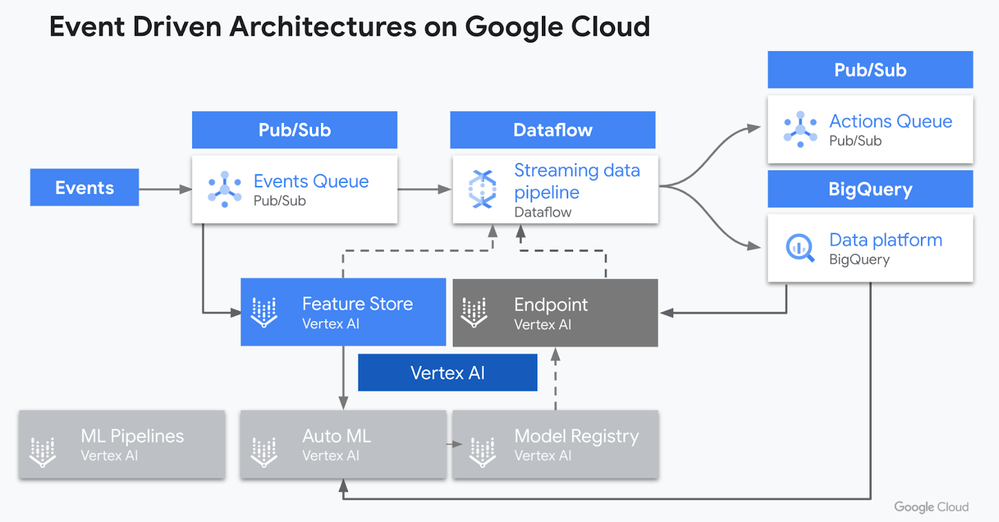

Google Cloud offers enterprise-class solutions to meet your event-driven architecture requirements, while reducing the complexity and operational overhead. The following image represents a typical event-driven architecture pattern that’s implemented for stream processing on Google Cloud.

We have the events on the left feeding into an asynchronous message service called Pub/Sub. Dataflow processes these events, performing stream transformations and window calculation. The processed data and actionable insights are written to two sinks (event consumers) - BigQuery, which is acting as the data platform, and another Pub/Sub topic that acts as our actions queue.

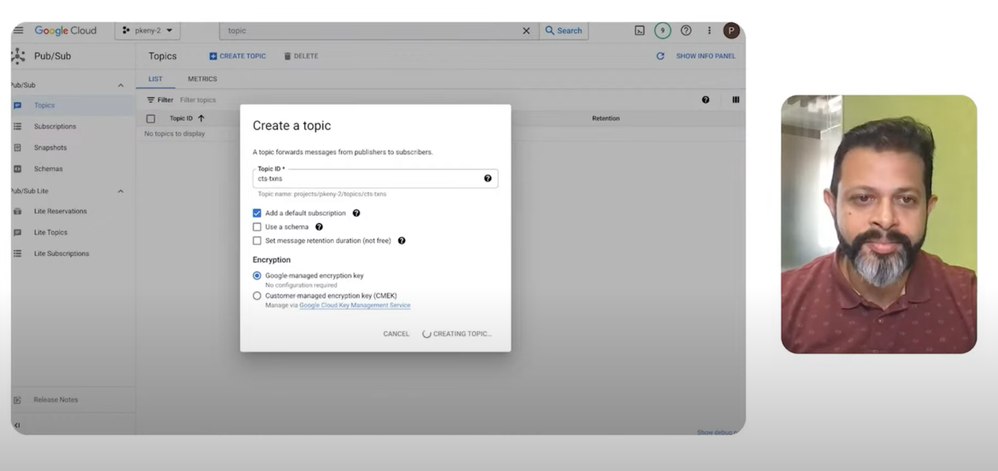

Let’s break down each of these solutions a bit further. With Pub/Sub, there’s no provisioning required – it’s completely serverless, there are no shards, partitions, or brokers. You just create a topic, publish, and start consuming. Pub/Sub scales global data delivery automatically from zero to millions of messages per second.

Dataflow offers unified stream and batch processing that’s also completely serverless, fast, and cost-effective. Importantly, Dataflow separates the logical design of the pipeline from the optimal execution, which allows your team to focus on the business logic, rather than having to manage the infrastructure or clusters. It takes care of autoscaling resources and even dynamically rebalances work across workers in the middle of a job.

Dataflow is based on Apache Beam, and one of the important tenets of Beam has been interoperability between different SDKs and runners. So you could choose between Java, Python, Go, or SQL for developing your pipelines, and for the runners, you could choose between Spark, Flink, or Dataflow on Google Cloud.

Then with BigQuery, you can ingest data at extremely high volumes and speeds, and the data becomes immediately available for querying.

To see an event-driven architecture in action using Pub/Sub, Dataflow, and BigQuery, register here to watch the “Event-driven Architecture and Analytics” session on demand. Google Cloud Customer Engineer, Prasanna Keny, demonstrates step-by-step how to build an event-driven streaming pipeline like the one pictured above based on a financial transactions scenario.

Streaming analytics

What we looked at so far was a pipeline that did stream transformations. When we add stream analytics (i.e. real time scoring of these events using a machine learning (ML) model), there are additional considerations that come into the picture. Let's consider the following architecture diagram that enhances the one we looked at earlier.

Firstly, we need low latency ML model serving and the ability to auto scale the model serving infrastructure with the event rate. Here, Vertex AI Endpoints offers you low-latency predictions, the ability to scale automatically based on traffic, model monitoring, model explainability, and more.

Secondly, the incoming event may not have all the features required by the model. For example, in case of a transaction fraud detection solution, the incoming transaction may have only attributes related to the specific transaction, whereas the model may require additional features related to the demographic / behavioral / transaction history of the payer and payee. Therefore. there's the need for a layer that can serve features to the event pipeline at low latency. The Vertex AI Feature Store offers you a managed solution for online serving at scale and the ability to share features across the organization and across multiple ML projects.

Note that the Feature Store itself may need to have streaming updates. Taking the example of the transaction fraud detection solution again, the model may require features associated with transaction patterns in the last few minutes. Vertex AI Feature Store offers you streaming ingestion, enabling real-time updates to feature values.

Next, If you observe the architecture diagram above, we're also using the Feature Store to supply data for the model training (represented, in this case, by Vertex AI AutoML). This addresses the traditional challenge with training-serving skew. Training-serving skew occurs when the feature data distribution that you use in production differs from the feature data distribution that was used to train your model, resulting in discrepancies between a model's performance during training and its performance in production.

Feature Store also provides point-in-time lookups to fetch historical data for training. With these lookups, you can mitigate data leakage by fetching only the feature values that were available before a prediction and not after.

For additional information on event-driven architectures, please refer to the following resources:

- Event-driven architectures (documentation)

- Create an ecommerce streaming pipeline (tutorial)

- Build an end-to-end data to AI solution on Google Cloud with BigQuery and Vertex AI (blog)

- Real-time stream analytics with Dataflow (video)

- Building resilient streaming analytics systems on Google Cloud (course)

- Guide to preparing for the Google Cloud Professional Data Engineer Certification

- Creating Enterprise Agents with ADK with a few lines of code

- Triggering App Integration from Google Forms - A detailed guide

- Using Google's Agent Development Kit (ADK) with MCP Toolbox and Neo4j

- Google Next '25 Updates: ADK, Agentspace, Application Integration

- Launched: ABAP SDK v1.10 unleashing powerful AI/ML, eventing, and storage capabilities

Twitter

Twitter