- Google Cloud

- Articles & Information

- Community Blogs

- Dataflow Observability, Monitoring, and Troublesho...

Dataflow Observability, Monitoring, and Troubleshooting: Solving 7 Data Pipeline Challenges

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Based on customer feedback, observability is a key area where the Dataflow team continues to invest more - to deliver visibility into the state and performance of Dataflow jobs that are essential for business critical production pipelines.

In a recent Community event, data analytics experts Ashwin Kamath, Konrad Janica, and Wei Hsia, demonstrated:

- Dataflow observability capabilities and the latest new features

- Common issues and scenarios reported by customers for streaming pipelines

- How to use Dataflow observability features to troubleshoot

In this article, we share key takeaways from the presentation, the session recording, written questions and answers, and links to supporting resources so you can easily get back to them at any time.

- Session recording

- Core Dataflow observability features

- Job visualizers: Understand how your data pipeline is being executed

- Job metrics: Monitor the state and performance of your jobs with metrics and logs

- Cloud Error Reporting: Identify and debug Dataflow job errors

- Cloud Profiler: Troubleshoot performance issues

- NEW! Dataflow Insights: Improve job performance and reduce costs

- NEW! Datadog dashboards and monitors: Integrate with tools of your choice

- Troubleshooting 7 common data pipeline challenges

- 1. Job slows down with increase in data volume

- 2. Quota issues, throttling

- 3. OOMs (out of memory)

- 4. Hot keys, uneven CPU load across workers

- 5. User code issues

- 6. Job failed to start

- 7. Job is not autoscaling as expected

- Resource summary

If you have any further questions, please add a comment below and we’d be happy to help! If you have ideas or feedback on Dataflow observability capabilities, please take this short survey to help us understand your needs better and continue to improve.

Session recording

Tip 👉 Use the chapter links with timestamps in the YouTube description to quickly get to the topics you care about most.

Core Dataflow observability features

Here’s an at-a-glance overview of core Dataflow observability features and how they help meet your data pipeline challenges and business goals.

See more detail into each of these features in the blog here: Pro tools for Pros: Industry leading observability capabilities for Dataflow, and by clicking on the timestamp links for each feature below.

Job visualizers: Understand how your data pipeline is being executed

Job graph: illustrates the steps involved in the execution of your job

Execution details: has all the information to help you understand and debug the progress of each stage within your job

Job metrics: Monitor the state and performance of your jobs with metrics and logs

View Dataflow job metrics to understand how your code is impacting job performance, including:

- Dataflow (service) metrics, such as the latency of the job, throughput per stage, user processing time spent, etc.

- Worker (Compute Engine VM) metrics, such as CPU utilization, memory usage, etc.

- Apache Beam custom metrics, such as the number of errors, RPCs made, etc.

- Custom log-based metrics:

- Job logs: startup tasks, fusion operations, autoscaling events, worker allocation

- Worker logs: logs for the work processed by each worker within each step in your pipeline, including custom logs

- NEW! Metrics for Streaming Engine jobs, such as backlog seconds and data freshness by stage, so you can review stages or parts of your pipeline for issues and create SLO-specific alerting

|

Category |

Metrics |

|

Data Freshness, Latency |

/job/per_stage_data_watermark_age |

|

/job/per_stage_system_lag |

|

|

Backlog |

job/backlog_bytes |

|

/job/backlog_elements |

|

|

/job/estimated_backlog_processing_time |

|

|

Duplicates |

/job/duplicates_filtered_out_count |

|

Processing |

/job/processing_parallelism_keys |

|

/job/estimated_bytes_produced_count |

|

|

/job/bundle_user_processing_latencies |

|

|

/job/elements_produced_count |

|

|

Timers |

/job/timers_processed_count |

|

/job/timers_pending_count |

|

|

Persistence |

/job/streaming_engine/persistent_state/write_bytes_count |

|

/job/streaming_engine/persistent_state/read_bytes_count |

|

|

/job/streaming_engine/persistent_state/write_latencies |

|

|

Big Query |

/job/bigquery/write_count |

|

Pub/Sub |

/job/pubsub/streaming_pull_connection_status |

|

Worker memory |

/job/memory_capacity |

|

/worker/memory/total_limit |

Cloud Error Reporting: Identify and debug Dataflow job errors

With Dataflow’s native integration with Google Cloud Error Reporting, you can identify and manage errors that impact your job’s performance - whether it’s with your code, data, or something else.

The Diagnostics tab in the Logs panel on the Job details page tracks the most frequently occurring errors.

21:06 Cloud Error Reporting integration

Cloud Profiler: Troubleshoot performance issues

With Dataflow’s native integration with Cloud Profiler, you can understand where there are performance bottlenecks in your pipeline, such as which part of your code is taking more time to process data or which operations are consuming more CPU/memory. Learn more in the guide: Monitoring pipeline performance.

22:19 Cloud Profiler integration

NEW! Dataflow Insights: Improve job performance and reduce costs

Enabled by default on your batch and streaming jobs, Dataflow Insights are generated by auto-analyzing your job’s executions, providing recommendations that help improve job performance and reduce costs, such as:

- Enabling autoscaling

- Increasing maximum workers

- Increasing parallelism

23:33 Dataflow insights (recommendations)

NEW! Datadog dashboards and monitors: Integrate with tools of your choice

If you’re already using Datadog, you can now leverage out-of-the-box Dataflow dashboards and recommended monitors to monitor your Dataflow jobs alongside other applications within the Datadog console. Learn more in the blog: Monitor your Dataflow pipelines with Datadog

Troubleshooting 7 common data pipeline challenges

The following are our recommendations if you’re having trouble building or running your Dataflow pipeline.

Have your own solutions or tips? Please help others in the Community and add them in the comments below!

1. Job slows down with increase in data volume

In situations where you have a large amount of data coming in and you feel like performance should be faster than it is, you can identify bottlenecks using the Cloud Monitoring for Dataflow view to see dataflow metrics, including:

- Backlog seconds

- Throughput

- CPU utilization

- User processing latencies

- Parallelism

- Duplicates

- Pub/Sub metrics

- Timers

- Sink metrics

Learn more at Troubleshoot slow-running pipelines or lack of output.

2. Quota issues, throttling

Dataflow exercises various components of Google Cloud, such as BigQuery, Cloud Storage, Pub/Sub, and Compute Engine. These (and other Google Cloud services) employ quotas to restrict how much of a particular shared Google Cloud resource your Cloud project can use, including hardware, software, and network components. When you use Dataflow and your job is slowing down, check your quota limits and request an increase if needed:

- CPUs

- In-use IP addresses

- Persistent disk read/write limits

- Regional managed instance groups

- Pub/Sub (learn more)

- BigQuery (learn more)

3. OOMs (out of memory)

Workers may fail due to being out of memory. Although it’s possible the job will finish, it’s also possible these errors will slow down performance or prevent the job from completing successfully.

In this scenario, consider the following options:

- Enable Vertical Autoscaling, a feature in Dataflow Prime that dynamically scales the memory available to workers according to the demands of the job.

- Manually increase the amount of memory available to workers.

- Reduce the amount of memory required, by profiling memory usage (more info here).

4. Hot keys, uneven CPU load across workers

A hot key is a key that cannot be uniformly distributed across all the worker machines. Hot keys lead to uneven resource load across workers, which can negatively impact pipeline performance.

To resolve this issue, check that your data is evenly distributed. If a key has disproportionately many values, consider the following courses of action:

- Rekey your data. Apply a ParDo transform to output new key-value pairs.

- For Java jobs, use the Combine.PerKey.withHotKeyFanout transform.

- For Python jobs, use the CombinePerKey.with_hot_key_fanout transform.

- Enable Dataflow Shuffle.

5. User code issues

If your job looks stuck due to code issues or errors, consider the following:

- Custom sources. If you have custom source dependencies, implement ‘getProgress()’. Backlog metrics rely on the return value of your custom source’s ‘getProgress()’ method to activate, so without implementing ‘getProgress()’, system latency, backlog, and other metrics could be reporting incorrectly.

- System latency is growing. System latency shows the delays from your input sources and how long elements have been waiting before being processed. If your system latency is constantly growing, this is a symptom of blocked I/O, which might need to be optimized in user code (e.g. hot key or batching an API).

- User processing latency is growing. User processing latency is the time the workers spend processing user code. If high latency is detected in a particular stage, use the Execution Details tab to find the related steps in the user code, which can then be debugged using Cloud Profiler.

- High fan-out. High fan-out is when a stage in your pipeline creates more output elements than it consumes, resulting in lower parallelism than its upstream stages. When Dataflow detects that a job has one or more transforms with a “high fan-out,” you can insert a Reshuffle step, which prevents fusion, checkpoints the data, and performs deduplication of records. Learn more about fusion optimization here.

6. Job failed to start

A common issue when writing new jobs in Dataflow can be that the job fails to start. The first thing to check is that the user has the correct permissions. To view jobs in the UI or to create job requests using the APIs, the user must have a ‘Dataflow admin’ or ‘Dataflow writer’ role.

For debugging, ‘Cloud Monitoring Viewer’ and ‘Cloud Logging Viewer’ roles are recommended so that the UI will be fully populated. The logs will indicate any missing service account permissions.

Likewise, the Dataflow workers roles should be added to the default compute service account because Dataflow uses Compute Engine to automatically manage VMs for you. The same applies to jobs that are started with a custom compute service account, but additionally, the user requires the correct permissions to it.

If your workers need to communicate with an external service, you’ll need to configure the firewall for the machines. This is often the case when downloading public packages in Python. A more reliable option is to download the libraries within your container image.

Other issues preventing jobs from starting include quota issues or having an out-of-date Beam version, which is indicated next to the version number in the UI.

7. Job is not autoscaling as expected

Dataflow includes several autotuning features that can dynamically optimize your Dataflow job while it is running. These features include Horizontal Autoscaling, Vertical Autoscaling, and Dynamic Work Rebalancing.

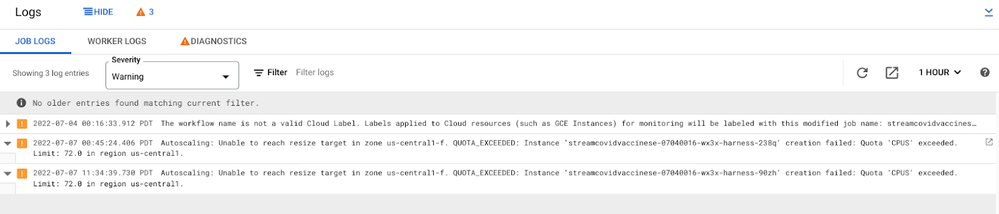

If your job isn’t autoscaling as expected, first check to make sure that autoscaling is enabled from the Job logs panel on the Job details page.

The scaling algorithm is different for streaming vs batch. For streaming, the autoscaler looks at backlog vs CPU utilization to determine scaling decisions. Within certain thresholds, upscaling or downscaling decisions are triggered. The decisions are then represented on the autoscaling chart which shows the current number of workers vs the target number of workers.

Debugging common scenarios include aggressive upscaling, stuck downscaling and frequent scaling:

- Aggressive upscaling could occur because of an overestimation of backlog or an under estimation of throughput. One possible cause is that the pipeline is IO bound and is bottlenecked waiting for external dependencies. Such events will eventually automatically downscale once the backlog stabilizes, but if it is a problem IO can be better parallelized or the maxNumberWorkers can be tweaked.

- Downscaling requires low CPU and low backlog for a few minutes. Even if these requirements are met, the job might not downscale due to disk rebalancing ranges. Streaming engine improves on this by enabling a larger scaling range.

- Frequent autoscaling events without traffic spikes are usually a symptom worker problems. When the autoscaler reduces the amount of workers, the workers could potentially hit out of memory issues due to the increased memory pressure or they could be encountered IP throttling on external services.

Streaming Engine improves the performance of autoscaling and often solves a range of common problems without changing any code.

Resource summary

- Dataflow quickstarts

- Using the Dataflow monitoring interface

- Building production-ready data pipelines using Dataflow: Monitoring data pipelines

- Pipeline troubleshooting and debugging

- Common Dataflow errors and suggestions

- Pro tools for Pros: Industry leading observability capabilities for Dataflow

- Beam College: Dataflow Monitoring

- Beam College: Dataflow Logging

- Beam College: Troubleshooting and debugging Apache Beam and GCP Dataflow

- Simplify your compliance and security with Assured Workloads for Google Cloud NetApp Volumes!

- Secure Your AI APIs with Apigee & Model Armor

- Empowering DFCX Chatbots with BigQuery Analytics

- A guide to negotiating a Cloud contract

- February Edition - Stay ahead with the new BigQuery Newsletter: Everything you need to know

Twitter

Twitter