- Google Cloud

- Articles & Information

- Community Blogs

- Function calling: A native framework to connect Ge...

Function calling: A native framework to connect Gemini to external systems, data, and APIs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

When working with generative models, you’ve probably run into this situation before: you send a prompt to a large language model (LLM), and it responds with:

“As an AI language model, I don't have access to real-time data. However, as of my last update in September 2021…”.

Due to the nature of “frozen training”, LLMs and generative models are not able to inherently reach out to external APIs and services, rather, they are constrained to the information and knowledge that they were trained on, which can lead to frustration for end users who are trying to use your chatbot or generative AI app to work with the most up-to-date-information from external systems.

How do we handle this situation and connect LLMs to the real world? Traditional approaches involve injecting information into LLMs to augment their responses or exhaustive prompt engineering to get structured data as an output from the model so we can pass it to the next stage in the process.

What if there was a better way to work with LLMs, teach them to use various tools, and get structured output so we can carry on with our generative AI app development? Function calling in Gemini does exactly this and helps you connect generative models to real-world data via API calls.

Function calling: The bridge to real-time data

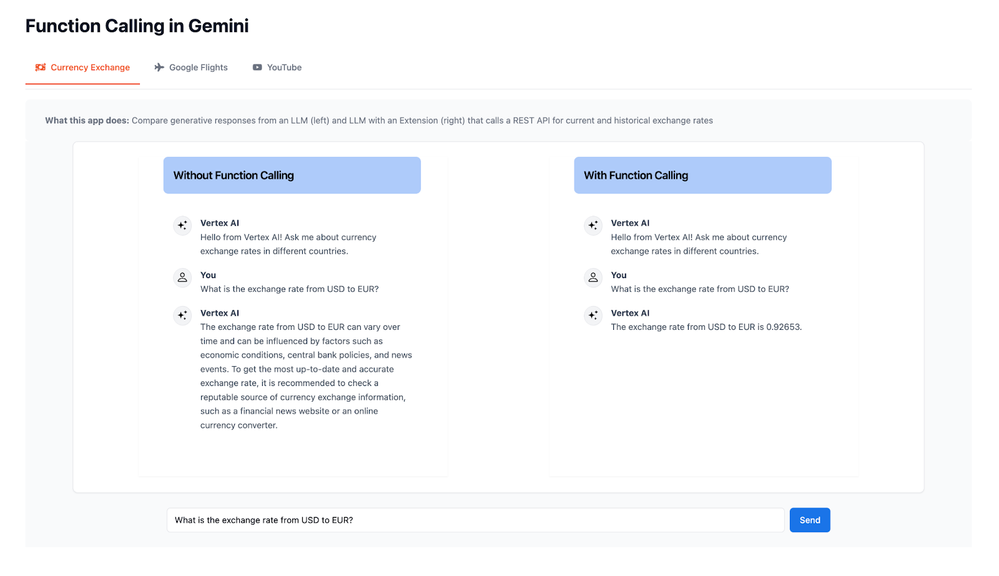

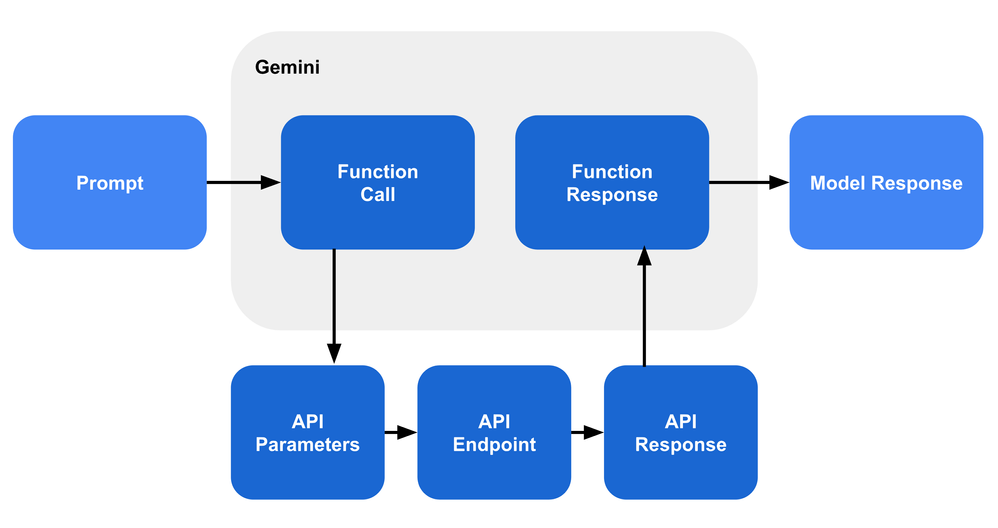

Function calling allows developers to define custom functions that can output structured data from generative models and invoke external APIs. This enables LLMs to access real-time information and interact with various services, such as SQL databases, customer relationship management systems, document repositories, and anything else with an API! The following diagram illustrates the end-to-end flow of the code that we'll be writing in the next section:

Following along in the above diagram, functions are defined using function declarations and tools, which helps the generative model understand the purpose and parameters within a function. When you send a prompt to a generative model that includes functions and tools, the model then returns a structured object (i.e., a function call) that includes the names of relevant functions and extracted parameters (i.e., API parameters) based on the user's prompt. You can then use the returned function and parameters in your application code to call your desired API endpoint in any language, library, or framework that you'd like.

After receiving an API response, you’ll pass this information to Gemini as a function response, and then Gemini will either answer the original prompt by summarizing the results in the model response, or Gemini will output a subsequent function call to get more information for your query. Depending on the available functions and the original prompt, Gemini might return one or more function calls in sequence until it has gathered enough information to generate a summary of the results.

A step-by-step example of function calling

Let’s walk through the design-time and run-time steps for function calling in Gemini. First, you’ll need to install the latest version of the Vertex AI SDK for Python, import the necessary modules, and initialize the Gemini Pro model:

from vertexai.preview.generative_models import Content,FunctionDeclaration,GenerativeModel,Part,Tool

model = GenerativeModel("gemini-pro")

Then, you'll define a function declaration and a tool so that the model knows which functions it can call and how to call them. Let’s define a function called get_current_weather that takes in one input parameter, the location of the user, then we’ll wrap it inside of the weather_tool so that we can use it within Gemini:

Function declaration → Tool

get_current_weather_func = FunctionDeclaration(

name="get_current_weather",

description="Get the current weather in a given location",

parameters={

"type": "object",

"properties": {"location": {"type": "string", "description": "Location"}},

},

)

weather_tool = Tool(

function_declarations=[get_current_weather_func],

)

Next, you'll send a prompt to the model along with the weather_tool so that it can generate a function call with the appropriate function name and parameters:

Generative model → Tool selection → Function call with parameters

prompt = "What is the weather like in Boston?"

response = model.generate_content(

prompt,

generation_config={"temperature": 0},

tools=[weather_tool],

)

Now you can use the returned function call and parameters to make an API request so that you can retrieve the latest information from an external system. This is the point in your application code where you actually call an external API endpoint in any language, library, or framework that you'd like, whether it’s Python, Java, C#, or your favorite SOAP API client:

Function call with parameters → API endpoint → API response

name: "get_current_weather"

args {

fields {

key: "location"

value {

string_value: "Boston"

}

}

}

url = f"http://www.my-weather-forecast-api.com/{params['location']}"

api_response = requests.get(url)

Finally, you'll pass the API response back to Gemini so that it can generate a response to the initial prompt:

API response → Generative model → Response to user

response = chat.send_message(

Part.from_function_response(

name="get_current_weather",

response={

"content": api_response,

},

),

)

response.candidates[0].content.parts[0]

text: "The current weather in Boston is blustery with snow showers. Temperatures are steady in the mid 30s. Winds from the north at 20 to 30 mph. There is a 50% chance of snow today."

Once we pass the API response back to the model, it will respond to the original prompt along with relevant information from the API response. In this case the model responds and includes details about the current weather in Boston that it got back from the weather API.

Why developers love function calling

Developers have given us feedback about how and why they are implementing function calling in their apps, and here are some reasons that they love working with function calling in Gemini:

Full control in development: Compared to traditional approaches that make use of extensive prompting and injecting data into prompts, function calling provides you with complete control over your implementation. You can define functions, tools, parameters, and API calls according to your specific needs and let Gemini handle the hard part of selecting an appropriate function extracting parameters from user prompts.

Less boilerplate code: Function calling is a native feature in the Gemini API that provides developers with a structured approach for defining functions and API calls in their application code without requiring the use of prompt templates or parsing strings to obtain the correct parameters. Function calling provides a light and elegant approach that does not require the creation of additional YAML files or the inclusion of additional boilerplate code.

Fast prototyping: The ability to quickly connect LLMs with external systems allows you to iterate faster and explore new ideas without waiting on us to release a connector for a specific service or API. Rather, you can connect Gemini to any available APIs or systems in your own application code.

No opinionated stack: Developers who want a simple way to connect their LLMs to external systems can do so natively in Gemini with function calling, without the need to use LangChain, reAct, or any additional frameworks. Developers who want to use LangChain, LlamaIndex, or other orchestration frameworks can continue to do so and only use function calling in Gemini to help with function selection and parameter extraction.

Function calling use cases

Let’s review some example use cases of how developers and customers are building new apps and capabilities using function calling in Gemini:

- Workflow automation: Workflows based on environmental triggers to automate processes with little to no user input. LLM reasoning can replace costly manual processes and focus human effort where it’s most valuable.

- Data analysis and visualization: Many organizations have data siloed away in BQ tables or GCS that their business users need, but these domain experts are not familiar with data science tooling and are unable to explore structured data in CSV files, Sheets, BigQuery, or GCS.

- Search through documents: Enable context-aware Q&A systems by integrating with vector databases such as Vertex AI Search and Vertex AI Vector Search to build retrieval-augmented generation implementations on document repositories.

- Enhance chatbot capabilities: Extend the capabilities of chatbots to include real-time information retrieval or transactions, such as fetching currency exchange rates or booking flights.

- Support ticket routing: Automate the assignment of support tickets based on their content, logs, and context-aware rules.

Summary

Function calling in Gemini helps you connect generative models to real-world data via API calls to accelerate the development of AI agents. By connecting LLMs to external systems and providing you with full flexibility and control in development, function calling enables you to build “online” generative AI applications that always have access to the latest data and information.

To get started with function calling in Gemini, you can walk through the steps in the interactive codelab, take a look at the documentation and code samples, or refer to the notebook with code samples. And check out the video below for live demos and Q&A!

We look forward to having you try things out and hearing your feedback in the Google Cloud Community forums!

Twitter

Twitter