- Google Cloud

- Articles & Information

- Community Blogs

- Unlocking visual intelligence with Google Cloud's ...

Unlocking visual intelligence with Google Cloud's Vision API

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

What is Google Cloud’s Vision API?

The Vision API, part of Google Cloud's Vision AI suite of products, is an advanced image analysis tool that utilizes machine learning models to recognize and understand the contents of images.

It allows developers to integrate powerful image analysis capabilities into their applications without the need for extensive machine learning expertise.

Developers and businesses across diverse industries have integrated Vision AI into their applications to enhance user experiences and offer more sophisticated image-related features. It's been leveraged in e-commerce for product recognition, in healthcare for analyzing medical images, in entertainment for content moderation, and in various other sectors to obtain valuable insights from visual content.

The ease of use, seamless integration through APIs, and the accuracy of image analysis have made the Vision API a go-to solution for businesses seeking advanced image recognition and understanding capabilities.

Why use the Vision API?

The Vision API offers the following capabilities:

- Helps assign labels to images and quickly classify them into millions of predefined categories

- Helps to detect objects, read printed and handwritten text, and build valuable metadata into your image catalog

- Enables developers to easily integrate vision detection features within their applications

- Supports a variety of image formats, including JPEG, PNG, GIF, BMP, and more

- Can be used to perform feature detection on a local image file or on a remote image file that is located in Cloud Storage or on the web. Images can be supplied either as direct binary data or as accessible URLs.

- Formats responses in JSON based on the features you request and the information detected in the image

Supported file types by the Vision API

- JPEG

- PNG8

- PNG24

- GIF

- Animated GIF (first frame only)

- BMP

- WEBP

- RAW

- ICO

- TIFF

Implementation methods supported by the Vision API

- Python

- C++

- Go

- Node.js

- PHP

- Ruby

Features provided by the Vision API

The Vision API currently allows you to use the following features:

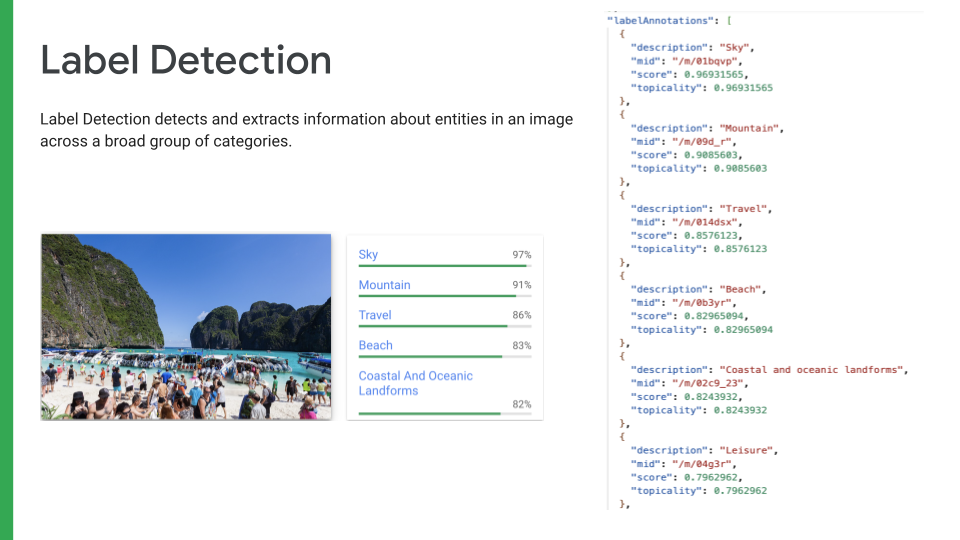

1. Label Detection

Labels can identify general objects, locations, activities, animal species, products, and more.

A LABEL_DETECTION response includes the detected labels, their score, topicality, and an opaque label ID, where:

- mid - if present, contains a machine-generated identifier corresponding to the entity's Google Knowledge Graph entry.

- description - the label description.

- score - the confidence score, which ranges from 0 (no confidence) to 1 (very high confidence).

- topicality - the relevancy of the ICA (Image Content Annotation) label to the image. It measures how important/central a label is to the overall context of a page.

If you need targeted custom labels, Cloud AutoML Vision allows you to train a custom machine learning model to classify images.

Labels are returned in English only.

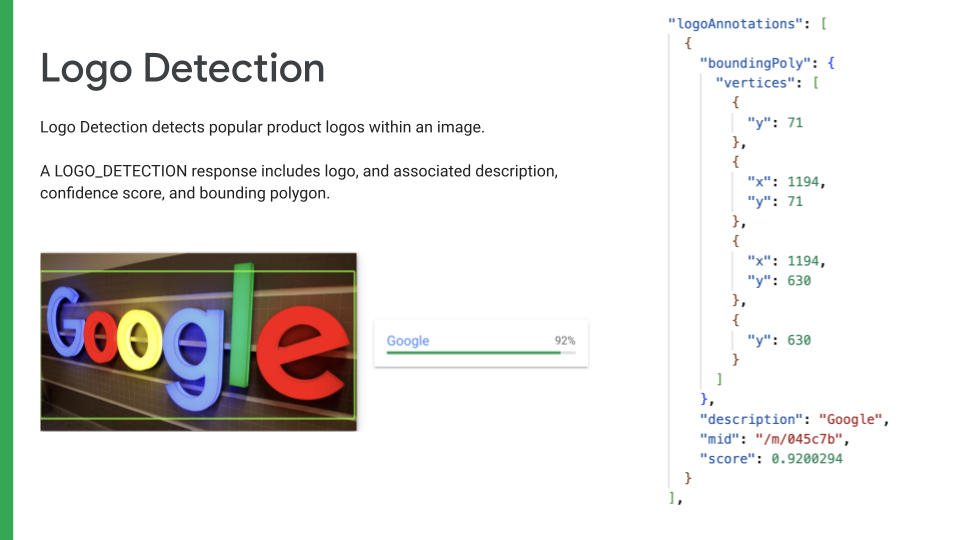

2. Logo Detection

Google Cloud's Vision API is trained to recognize a wide range of popular logos across various industries, and is capable of detecting multiple logos within a single image.

A LOGO_DETECTION response includes the logo and associated description, confidence score, and bounding polygon.

For each logo detected, the API provides:

- Description - name or a description of the logo identified

- Confidence score - indicates the likelihood of the detected logo being correct

- Bounding polygon - information that's useful for determining the precise position and size of the logo within the image

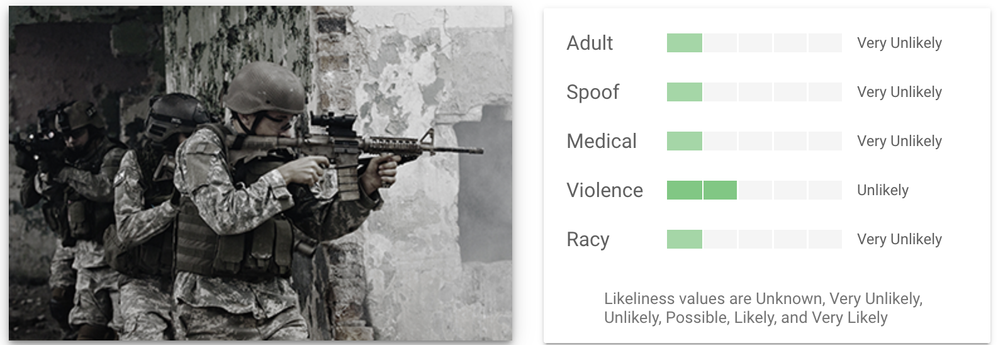

3. SafeSearch Detection

SafeSearch Detection categorizes content into following categories, and returns the likelihood that each is present in a given image.

- Adult

- Spoof

- Medical

- Violence

- Racy

The likelihood levels indicate the degree to which the Vision API believes the content falls into each category. The likelihood levels include the following values:

- VERY_UNLIKELY: The content is highly unlikely to be within the specified category

- UNLIKELY: The content is unlikely to be within the specified category

- POSSIBLE: The content may or may not be within the specified category

- LIKELY: The content is likely to be within the specified category

- VERY_LIKELY: The content is highly likely to be within the specified category

More information on each category can be found here.

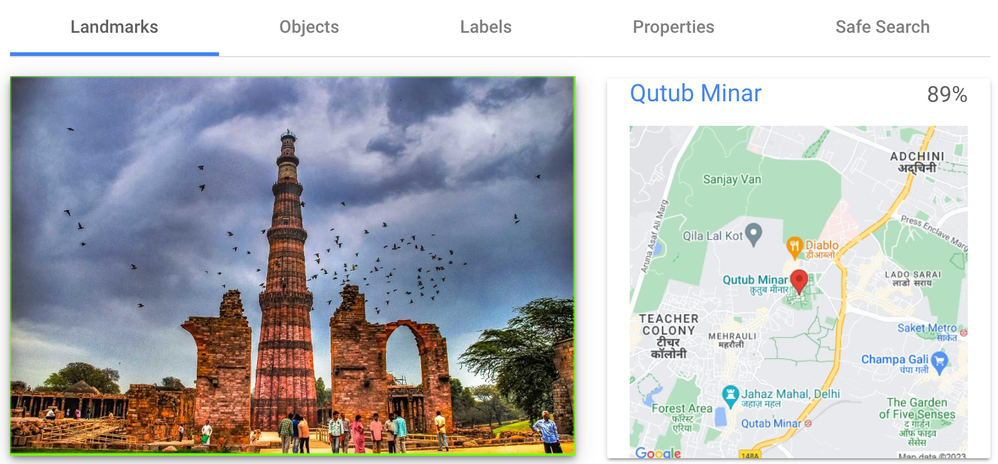

4. Landmark Detection

Landmark detection allows you to analyze images to identify specific landmarks, such as buildings, natural features, and other recognizable locations.

The Vision API recognizes landmarks and provides information about them, including their name, location, and other relevant details.

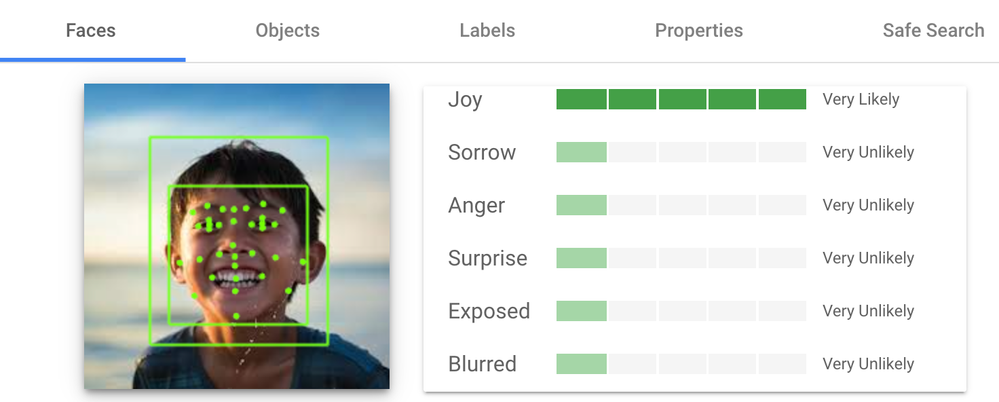

5. Face Detection

Face Detection helps locate faces with bounding polygons, and identifies specific facial "landmarks," such as eyes, ears, nose, mouth, etc., along with their corresponding confidence values.

It returns likelihood ratings for emotions like joy, sorrow, anger, surprise, and general image properties like underexposed, blurred, and headwear present.

Likelihood ratings are expressed as 6 different values: UNKNOWN, VERY_UNLIKELY, UNLIKELY, POSSIBLE, LIKELY, or VERY_LIKELY.

6. Web Detection

The Vision API's Web Detection feature searches and identifies references to an image that are either similar or identical. The Web Detection feature offers six different types of information listed below:

- Web entities: returns a list of recommended tags associated with the image. These tag values can be Label, Brand, or Logo depending on the extracted information from the image.

- Full matching images: identifies images identical to the image sent in the request

- Partial matching images: identifies images partially similar to the image sent in the request

- Pages with matching images: returns website info that contain images identical to the image present in the request

- Visually similar images: returns a list of images that are similar to the image

- Best guess labels: returns label name that can be used to identify the image

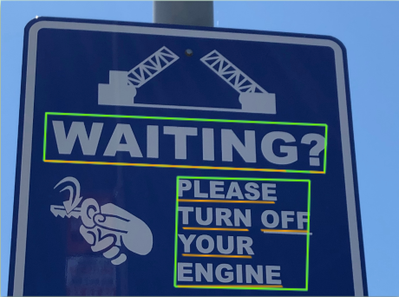

7. Optical Character Recognition

The Vision API can detect and extract text from images. There are two annotation features that support optical character recognition (OCR):

- TEXT_DETECTION detects and extracts text from any image. The response from Vision API includes the detected phrase, its bounding box, and individual words and their bounding boxes.

- DOCUMENT_TEXT_DETECTION also extracts text from an image or file, but the response is optimized for dense text and documents. The JSON includes page, block, paragraph, word, and break information. One specific use of document text detection is to detect handwriting in an image.

JSON request format sent to Vision API

The body of your POST request contains a JSON object, containing a single requests list, which itself contains one or more objects of type AnnotateImageRequest:

Every request:

- Must contain a requests list

Within the requests list:

- image specifies the image file. It can be sent as a base64-encoded string, a Cloud Storage file location, or as a publicly-accessible URL. See Providing the image for details.

- features lists the types of annotation to perform on the image. You can specify one or many types, as well as the maxResults to return for each

- imageContext specifies hints to the service to help with annotation: bounding boxes, languages, and crop hints aspect ratios

{

"requests":[

{

"image":{

"content":"/9j/7QBEUGhvdG9...image contents...eYxxxzj/Coa6Bax//Z"

},

"features":[

{

"type":"LABEL_DETECTION",

"maxResults":1

}

]

}

]

}

JSON response format from Vision API

The response from the Vision API contains a list of Image Annotation results with a score associated with each entity.

The annotate request receives a JSON response of type AnnotateImageResponse. Although the requests are similar for each feature type, the responses for each feature type can be quite different.

For example, the below response is returned for the image:

{

"responses": [

{

"labelAnnotations": [

{

"mid": "/m/0bt9lr",

"description": "dog",

"score": 0.97346616

},

{

"mid": "/m/09686",

"description": "vertebrate",

"score": 0.85700572

},

{

"mid": "/m/01pm38",

"description": "clumber spaniel",

"score": 0.84881884

},

{

"mid": "/m/04rky",

"description": "mammal",

"score": 0.847575

},

{

"mid": "/m/02wbgd",

"description": "english cocker spaniel",

"score": 0.75829375

}

]

}

]

}

Providing the image

You can provide the image in your request in one of three ways:

- As a base64-encoded image string. If the image is stored locally, you can convert it into a string and pass it as the value of image.content

- As a Cloud Storage URI. Pass the full URI as the value of image.source.imageUri.

- The file in Cloud Storage must be accessible to the authentication method you're using.

- If you're using an API key, the file must be publicly accessible.

- If you're using a service account, the file must be accessible to the user who created the service account.

- Use a publicly-accessible HTTP or HTTPS URL.

- Pass the URL as the value of image.source.imageUri.

- When fetching images from HTTP/HTTPS URLs, Google cannot guarantee that the request will be completed.

- Your request may fail if the specified host denies the request (e.g. due to request throttling or DoS prevention), or if Google throttles requests to the site for abuse prevention.

- As a best practice, don't depend on externally-hosted images for production applications

Implementing the Vision API using Python

Next, we will look into how to use the Vision API to extract information from an image and store the results in BigQuery using the Python Client library provided by the Vision API.

Prerequisites

1. A Google Cloud project with billing enabled is the first step to use the Vision API.

2. Create a BigQuery Dataset

export BIGQUERY_DATASET=<bigquery_dataset_name>

bq mk -d --location=${REGION}${BIGQUERY_DATASET}

3. Enable Vision API

gcloud services enable vision.googleapis.com

4. Set up authentication and access control

- In the overview page for the Cloud Vision API, in the top right corner of the screen, click “Create Credentials”

- Now you will be able to create a key so that you can authenticate yourself when you try to connect to the Cloud Vision API.

- Choose a service account name, and set your role to “Owner”. Set the key type to JSON. Click continue. You will now be able to download a JSON file containing your credentials.

5. The first step for using the Python variant of the Vision API, is to install it. The best way to install it is through pip.

pip install google-cloud-vision

Writing the script

Now we will write a Python script to detect features on an image using Cloud Vision API and store the results into BigQuery.

1. Import the required libraries

In this step, you import necessary libraries to interact with both the Vision API and BigQuery.

google.cloud.vision_v1 is used for the Vision API, and google.cloud.bigquery is used for BigQuery interactions.

import json

from google.cloud import bigquery

from google.cloud import vision_v1

2. The next step is to create a function, which takes in an image and creates a vision object out of it and sends the request to Vision API.

This function accepts the path to the input image. It can be either a publicly accessible image URL or path to the image present locally.

It uses the ImageAnnotatorClient from the Vision API to create a client. The image is read and converted into a Vision API image object. Features to be detected (e.g. face detection, label detection) can be specified. If not specified, Vision API considers all the features that are supported.

A request is made to the Vision API using the specified features, and it returns an AnnotateImageResponse object with features that are detected from the image.

def annotate(image_path: str):

# Create a client for the Google Cloud Vision API.

client = vision_v1.ImageAnnotatorClient()

# Check if the image_path starts with "http" or "gs:" indicating it's a remote image or a local file.

if image_path.startswith("http") or image_path.startswith("gs:"):

# If it's a remote image, create an Image object with an image URI.

image_src=vision_v1.Image()

image_src.source.image_uri = image_path

else:

# If it's not a remote image, it's assumed to be a local file.

# Open the image file in binary read ('rb') mode and read its content.

with open(image_path, "rb") as image_file:

content = image_file.read()

# Create an Image object with the image's content.

image_src=vision_v1.Image(content=content)

# Create a request to annotate the image using the Vision API.

request = vision_v1.AnnotateImageRequest(image=image_src)

# Send the request to the Vision API and receive a response.

response = client.annotate_image(request)

# Check if the response contains an error message. If so, raise an exception with the error message.

if response.error.message:

raise Exception(

"{}\nFor more info on error messages, check: "

"https://cloud.google.com/apis/design/errors".format(response.error.message)

)

# Return the response, which contains annotations for the processed image.

return response

3. Once we have the Annotated Image Response from Vision API, next we write the results into the BigQuery table.

Before adding it to the BigQuery table, you can prepare the Annotated Response according to the need and then insert the structured data into a BigQuery table.

def write_to_bigquery(image_path, annotated_image_response, table_id):

from google.cloud import bigquery

print("Inserting the record into the table for image ", image)

record = {

"image_data": image_path,

"label_annotations": annotated_image_response["label_detection"],

"logo_annotations": annotated_image_response["logo_detection"],

"text_annotations": annotated_image_response["text_detection"],

"landmark_annotations" : annotated_image_response["landmark_detection"],

"face_annotations" : annotated_image_response["face_detection"]

}

bigquery_client = bigquery.Client()

bigquery_client.insert_rows_json(table_id, [record])

Thanks for reading! This content was based on a recent Google Cloud Community event, Unlocking visual intelligence: A Google Cloud showcase of Vision AI. You can see the session recording here for additional details into the topic.

If you have any questions, please leave a comment below. Thank you!

Twitter

Twitter