- Google Cloud

- Cloud Forums

- Data Analytics

- Data fusion Bigquery plugin

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have a pipeline that is failing in Bigquery plugin, now I created another with just that plugin and the problem is the same, only getting the data triggers the bug.

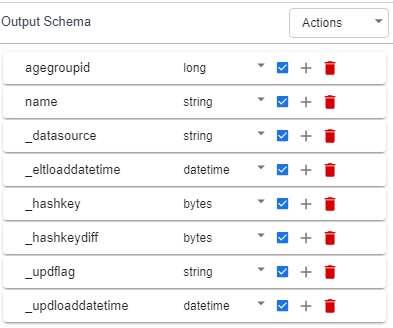

My schema only only have supports data types

This is the log

Thanks for your helpjava.lang.ClassCastException: org.apache.avro.generic.GenericData$Record cannot be cast to org.apache.avro.generic.GenericRecord

at io.cdap.plugin.gcp.bigquery.source.BigQuerySource.transform(BigQuerySource.java:202)

at io.cdap.cdap.etl.common.plugin.WrappedBatchSource.lambda$transform$2(WrappedBatchSource.java:71)

at io.cdap.cdap.etl.common.plugin.Caller$1.call(Caller.java:30)

at io.cdap.cdap.etl.common.plugin.WrappedBatchSource.transform(WrappedBatchSource.java:70)

at io.cdap.cdap.etl.common.plugin.WrappedBatchSource.transform(WrappedBatchSource.java:35)

at io.cdap.cdap.etl.common.TrackedTransform.transform(TrackedTransform.java:80)

at io.cdap.cdap.etl.spark.function.BatchSourceFunction.call(BatchSourceFunction.java:57)

at io.cdap.cdap.etl.spark.function.BatchSourceFunction.call(BatchSourceFunction.java:34)

at org.apache.spark.api.java.JavaRDDLike.$anonfun$flatMap$1(JavaRDDLike.scala:125)

at scala.collection.Iterator$anon$11.nextCur(Iterator.scala:484)

at scala.collection.Iterator$anon$11.hasNext(Iterator.scala:490)

at scala.collection.Iterator$anon$11.hasNext(Iterator.scala:489)

at scala.collection.Iterator$anon$11.hasNext(Iterator.scala:489)

at scala.collection.Iterator$anon$10.hasNext(Iterator.scala:458)

at org.apache.spark.internal.io.SparkHadoopWriter$.$anonfun$executeTask$1(SparkHadoopWriter.scala:135)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1473)

at org.apache.spark.internal.io.SparkHadoopWriter$.executeTask(SparkHadoopWriter.scala:134)

at org.apache.spark.internal.io.SparkHadoopWriter$.$anonfun$write$1(SparkHadoopWriter.scala:88)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:131)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:497)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1439)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:500)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

- Labels:

-

BigQuery

-

Cloud Data Fusion

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The log you provided indicates that the BigQuery plugin in Google Cloud Data Fusion is encountering a ClassCastException. This error typically arises when there's a mismatch between the expected Avro schema and the actual data format being read from BigQuery.

To address this issue, consider the following steps:

-

Verify BigQuery Schema: Ensure that the schema of the data in BigQuery matches what the BigQuery plugin expects. You can check the schema with the following SQL query:

SELECT schema.name,schema.fieldsFROM`$dataset.INFORMATION_SCHEMA.SCHEMAS`WHEREschema.name = '$table'Replace

$datasetand$tablewith the names of your dataset and table in BigQuery. -

Use SPECIFIC_AVRO_READER_CONFIG: If your data conforms to a known Avro schema, set the

SPECIFIC_AVRO_READER_CONFIGproperty totrue. This instructs the BigQuery plugin to use a specific Avro reader for deserialization. Add this to your pipeline configuration:"config": {"SPECIFIC_AVRO_READER_CONFIG": true } -

Use GENERIC_AVRO_READER_CONFIG: For more flexibility, especially with frequently changing schemas, use a generic Avro reader by setting

GENERIC_AVRO_READER_CONFIGtotrue. This can be slower but offers more adaptability:"config": {"GENERIC_AVRO_READER_CONFIG": true } -

Update Plugins and Dependencies: Ensure all plugins and dependencies in Data Fusion, including the BigQuery plugin, are up to date and compatible with each other.

-

Enhance Logging and Debugging: Increase the logging level in your Data Fusion pipeline for more detailed error messages, which can provide further insights into the issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your answer, the easiest solution was to change bigquery plugin'd version to previous one (0.21) that was working a couple days ago. Another solution that worked with plugin version 0.22 is to activate pushdown option in pipeline configuration. Everything shows that is a bigquery plugin bug.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am glad to hear that you were able to resolve the issue by reverting to an earlier version of the BigQuery plugin and by activating the pushdown option in the pipeline configuration.

Your findings that these solutions worked while the latest version of the plugin did not, suggests a possible bug or compatibility issue in the newest version of the BigQuery plugin. It's beneficial to report this to the Google Cloud support, if you haven't already. This can help them address the issue in future releases and improve the tool for all users.

-

Analytics General

407 -

Apache Kafka

6 -

BigQuery

1,359 -

Business Intelligence

91 -

Cloud Composer

101 -

Cloud Data Fusion

104 -

Cloud PubSub

195 -

Data Catalog

93 -

Data Transfer

171 -

Dataflow

216 -

Dataform

329 -

Dataprep

27 -

Dataproc

123 -

Datastream

50 -

Google Data Studio

80 -

Looker

130

- « Previous

- Next »

Twitter

Twitter