- Google Cloud

- Cloud Forums

- Data Analytics

- Re: Firestore -> BigQuery

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Folks,

My company has a mobile app with lots of data stored in Firestore collections. We'd like to run data analytics on the dataset, but I'm not clear on the most efficient way to get the Firestore data to BigQuery for analysis. We'd like to get the data into BigQuery and automatically keep it up to date periodically to run analytic reports and create dashboards based on various aggregations.

I'm technical but not a professional developer, so I prefer to work with no-code or low-code tools as much as feasible.

The dataset would probably be up to several million rows, by a dozen or so columns for a typical collection.

Any advice on the best way to move Firestore data to BigQuery tables continuously?

Thank you in advance!

- Labels:

-

BigQuery

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As shown in the Firestore to BigQuery – Everything You Need to Know article, there are several ways to move your data from Firestore to BigQuery.

As shown in How to automate Firestore to BigQuery export section,

The process of exporting your data and updates in real-time is called streaming and luckily, there is an extension that helps you stream your Firestore data directly to BigQuery.

How to stream Firestore data to BigQuery

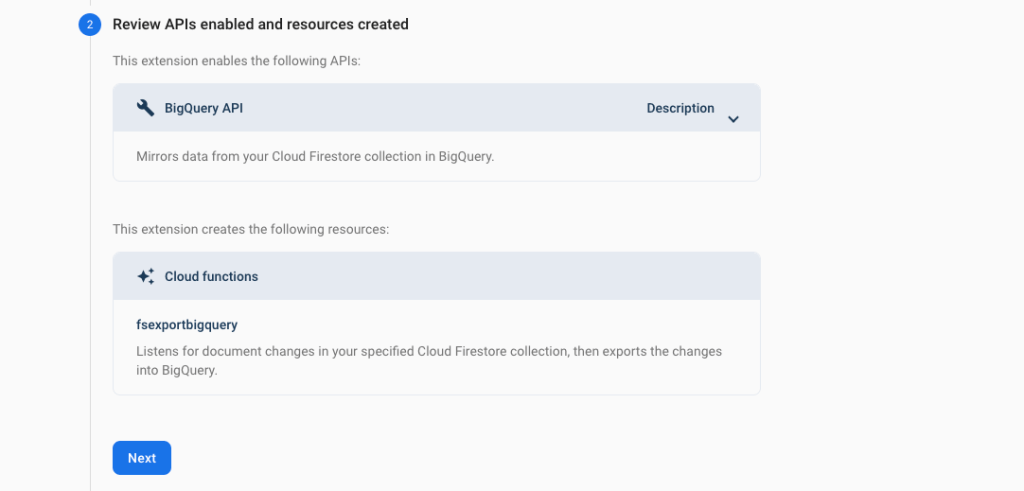

In order to stream Firestore data to BigQuery and update your BigQuery data when a document or a collection changes, you can use the “Export Collections to BigQuery” Firebase extension.

This extension exports the documents in a Cloud Firestore collection to BigQuery in real-time and incremental. It scans for document changes in the collection and automatically sends the action to BigQuery as well (document creation, deletion, or update). In order to install this extension, you can:

- Go to the extension page.

- If you have already set up billing hit “Next”

- Review which APIs will be enabled and hit “Next”.

- Review all the access rights provided to the extension and hit “Next”.

- Enter the configuration information:

- Cloud functions location: Usually the same location as your Firestore database and BigQuery dataset

- BigQuery dataset location: The location where BigQuery dataset can be found.

- Collection path: The collection you want to stream. You may use

{wildcard}notation to match a subcollection of all documents in a collection, for example,businesses/{locationid}/restaurants. Read more about wildcards.- Dataset Id: The BigQuery dataset where your table will be placed.

- Table Id: The BigQuery table where your data will be streamed.

- BigQuery SQL table partitioning option: Add in case you need your BigQuery table partitioned.

- Click “Install extension”.

Backfill your BigQuery dataset

This extension only sends the content of documents that have been changed – it does not export your full dataset of existing documents into BigQuery. So, to backfill your BigQuery dataset with all the documents in your collection, you can run the import script provided by this extension.

Important: Run the import script over the entire collection after installing this extension, otherwise all writes to your database during the import might be lost.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've two different projects, Firestore is setup for Project A and BigQuery for project B and I am getting data set not found error in my collection logs, how to resolve that?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There was the error: Entity "9WkeuAde52Qvd1dcfo9e" was of unexpected kind "locations".. How to solve it?

-

Analytics General

396 -

Apache Kafka

5 -

BigQuery

1,324 -

Business Intelligence

89 -

Cloud Composer

93 -

Cloud Data Fusion

101 -

Cloud Functions

1 -

Cloud PubSub

189 -

Cloud Storage

1 -

Data Catalog

90 -

Data Transfer

165 -

Dataflow

213 -

Dataform

318 -

Dataprep

27 -

Dataproc

120 -

Datastream

45 -

Dialogflow

1 -

Gen App Builder

1 -

Google Data Studio

76 -

Looker

125

- « Previous

- Next »

Twitter

Twitter