- Google Cloud

- Cloud Forums

- Data Analytics

- Not able to run Dataflow

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

Recently I have been trying to create a dataflow for a batch job copying data from MongoDB into BigQuery. I am using the Google provided template for this with no additional configuration but the required ones.

At first I got the error code:

Failed to start the VM, launcher-XXX, used for launching because of status code: INVALID_ARGUMENT, reason: Invalid Error:

Message: Invalid value for field 'resource.networkInterfaces[0]': '{ "network": "global/networks/default", "accessConfigs": [{ "type": "ONE_TO_ONE_NAT", "name"...'. No default subnetwork was found in the region of the instance.

HTTP Code: 400.

I did not specify either network or subnetwork in the config. My understanding is that if this is not specified the worker will use the default network and subnet created when the project is created. However, my default network did not have any subnet connected to it.

The day after, when I logged in to GCP a subnetwork had appeared under my default network, and when I retried my job with the exact same configuration I got a new error, namely:

"Failed to start the VM, launcher-XXX, used for launching because of status code: UNAVAILABLE, reason: One or more operations had an error: 'operation-XXX': [UNAVAILABLE] 'HTTP_503'.."

When I have researched this new error it seems to be because of the regions, however, I have made sure that everything is running in the same region: europe-west3. My subnet, cloud storage and target dataset are all located in europe-west3.

Any help is appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This error indicates that Dataflow couldn't locate a default subnet within your network in the europe-west3 region. Dataflow requires a subnet to place worker VMs and manage network traffic efficiently. This broader error signifies that GCE might have been temporarily unavailable.

The absence of a subnet in the europe-west3 region directly caused the first error. Even without explicit network settings, Dataflow requires a subnet to operate.

The second error suggests issues with the europe-west3 region itself. Even though a new subnet appeared and might seem to resolve the issue, persistent problems with Compute Engine could still be causing the UNAVAILABLE error.

Here are some troubleshooting steps to address:

Network and Subnet Verification

-

Confirm Subnet Existence: Ensure that your default network now definitely includes a subnet in the

europe-west3region. -

Subnet Configuration: Verify that the subnet’s IP range is sufficiently large to accommodate the worker VMs that Dataflow will create.

-

Permissions: Check that the Dataflow service account has the necessary permissions (Compute Network User and Compute Instance Admin) to operate within the subnet.

Try an Alternative Region

-

Temporary Workaround: If persistent UNAVAILABLE errors occur, consider executing your Dataflow job in a different region to determine if the issue is specific to

europe-west3. Note that changing regions can affect data residency and transfer costs.

Inspect Compute Engine Quotas

-

VM Instances: Review whether you are nearing any Compute Engine quotas, particularly for the number of VM instances in

europe-west3. Quota information can be found in the Google Cloud Console, where you can also request increases if necessary.

Advanced Troubleshooting

-

Firewall Rules: Confirm that your firewall rules permit necessary internal TCP and UDP traffic for Dataflow worker VMs, allowing both inbound and outbound connections.

-

Metadata Limits: If your pipeline uses a significant number of staged JAR files or complex options, consider simplifying these to avoid hitting metadata limits.

Additional Considerations

-

Explicit Network Specification: For better control and to avoid reliance on default settings, explicitly specify your network and subnet in your Dataflow pipeline options. Refer to the Dataflow documentation for instructions on how to set these parameters.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, thanks for the reply!

- I have made sure that the subnet does exist in europe-west3.

- I don't know how large the range should be but I am using the default range.

- I have made sure that the <project_id>-compute@developer.gserviceaccount.com has the correct permissions. Might have added more than necessary now but they include:

- Cloud Dataflow Service Agent

- Compute Instance Admin (v1)

- Compute Network User

- Dataflow Admin

- Dataflow Worker

- Storage Object Admin

- My organisation has limits to opened regions. Therefore I need to open up a new region, which we have done, but that region does not have a subnetwork connected to it. I wonder if the new subnet will be automatically created or if I have to do that myself? If so, what IPv4-range should be used? We are using the auto-creation network and subnetworks.

- For Compute Engine quotas we are still far off any limitations. This is the first thing we are doing in this project

- The firewall rules should, to my very limited understanding of this, allow the necessary internal TCP and UDP traffic.

- I don't know about the metadata limits.

- I have tried explicitly stating the subnetwork with the full link without success.

Is there anything else I can do in order to troubleshoot further?

Thanks again

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jonte393 ,

Since your organization uses an auto-mode network, subnets should indeed automatically be created in all regions available to your project. However, if you've opened a new region and the subnet hasn't appeared yet, you might need to either wait a bit (as there can sometimes be a delay in the auto-creation process) or create it manually to expedite the process.

Creating a Subnet Manually

If you decide to manually create a subnet:

-

Go to the VPC networks in the Google Cloud Console.

-

Select your network and then choose "Subnets".

-

Click on “Create subnet” and select the new region.

-

IPv4 Range: For the IP range, you can use a private range typical for internal networks such as

10.0.0.0/20. This provides up to 4091 usable IP addresses, sufficient for most uses. Ensure this range doesn't overlap with other subnets in your network.

It seems you’ve assigned comprehensive permissions to your service account, which is good. Over-permissioning isn't ideal for security practices (principle of least privilege), but it can help rule out permission issues in troubleshooting phases.

If the firewall settings are a concern, you can specifically check that the rules allow:

-

All internal traffic within the VPC (

sourceRangesset to internal IP ranges like10.0.0.0/8) -

Necessary external traffic, if your workers need to access Google services or other external endpoints.

Metadata limits typically involve limits on the size and number of items (like JAR files, temporary files) that can be handled by the system. These are not often a concern unless your job configuration is unusually large or complex.

Since specifying the subnet explicitly didn't resolve the issue, this suggests the problem might not be with the subnet settings per se but perhaps with how the resources are being provisioned or interacted with.

Additional Troubleshooting Steps

-

Logs and Error Messages: Look at the detailed logs in Dataflow and Compute Engine. Often, the logs will provide more specific error messages or warnings that can give clues beyond the general errors you see on the surface.

-

Networking Test: Run a smaller, simpler job that uses similar resources to see if the issue is with the specific configuration of your main job or with the basic network setup.

-

Google Cloud Support: Given your organization's restrictions and the complexities of your setup, engaging with Google Cloud Support might provide insights specific to your configurations and potential issues in the backend that are not visible through the console.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reply once again @ms4446!

So just like you said I had to wait a bit for the new subnet to be created in the new region. I tried running it in the new region with the same issue.

I looked into the logs of the Compute Engine which gave me the error message:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I also tried with different machine types, I tried the n1-standard-1, n1-standard-2 and n2d-standard2. Same issue persists...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dealing with the "ZONE_RESOURCE_POOL_EXHAUSTED" error can indeed be challenging. This error means that the Google Cloud region you're trying to use doesn't currently have enough resources (like CPUs or memory) to allocate to your project. Here are some steps and considerations to help address this issue:

Strategies to Handle Resource Exhaustion

-

Wait and Retry: Sometimes, simply waiting and retrying after some time can be effective, as Google's infrastructure dynamically reallocates resources.

-

Spread Load Across Multiple Zones: If possible, configure your Dataflow job to distribute load across multiple zones within the same region or even across different regions to mitigate the impact of a single zone's resource exhaustion.

-

Contact Support: If this issue is persistent and impacting your operations, contacting Google Cloud Support can provide insights into when resources might be available or if there's an ongoing capacity issue in the region.

Trying Different Combinations of Regions/Zones

-

Persistent Issue Across Regions: Since you've already tried this across multiple zones and regions without success, continuing to randomly try different combinations isn't likely the most efficient use of your time.

-

Strategic Selection of Regions: Instead of randomly selecting regions, consider those known for higher availability or newer regions that might have more free capacity. This can sometimes require adjusting your network settings to include these regions.

External Connections and Firewall Settings

For your connection to an external MongoDB database:

-

Default Firewall Settings: The default GCP firewall does not necessarily allow outgoing connections to external databases. You typically need to create specific rules to allow such connections.

-

Creating Firewall Rules: You should ensure that outgoing traffic to the IP address (and port) of your MongoDB server is explicitly allowed. Here’s how you can do it:

-

Go to the VPC network section in the Google Cloud Console.

-

Click on Firewall and then Create Firewall Rule.

-

Specify the targets (e.g., all instances in the network), the source IP ranges (e.g., your instances' IPs or subnets), and the required ports (usually 27017 for MongoDB).

-

Save and apply the rule.

-

Considerations for Different Machine Types

-

Machine Type Impacts: If changing machine types didn’t resolve the issue, it's less likely about the specific compute requirements of the instances and more about overall regional resource availability.

-

Use Custom Machine Types: If standard machine types are not available, you might try using custom machine types that could have configurations (like different CPU to memory ratios) that are more readily available in the cloud.

Next Steps

-

Review and Adjust Quotas: Double-check that you have enough quota in the desired regions for CPUs and other resources. Even if the region has capacity, your project's quota could be a limiting factor.

-

Scheduled Deployment: Consider scheduling your job to run during off-peak hours when the demand for resources might be lower.

If you continue to face these issues despite following these strategies, engaging with Google Cloud's customer support might provide more specific assistance and possibly escalate the issue if it's indicative of a larger systemic problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello again, I let this rest during the weekend and have now spent the entirety of today with the problem.

- I let it wait during the weekend and am still running into the same issues.

- I thought that Dataflow Jobs for MongoDB did not allow multi-region, but have asked my GCP-admin to try and open up multi-region.

- For machine-types I've tried many different configurations, what I noticed though in the logs is that even though I specify a machine-typ different from the default one in the dataflow console, it still gives me this error message

I did successfully run the word-count job with a custom-machine type but when I tried running my MongoDB-job in the same region with the same machines it did not work.

I did check our quotas and we are not exceeding any of them.

- For the Firewall-rules, if this was the problem would I not be getting a different error message then?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Generally, Dataflow jobs are regional, not multi-regional. This means that all resources used by a job, including temporary and staging locations, need to be in the same region as the Dataflow job. Expanding access to multiple regions won't typically help unless you're specifically aiming to distribute different jobs or increase your chances of finding available resources.

If the logs indicate that the system is defaulting to a different machine type than you specify, there might be a misconfiguration in how the machine type is being set in the job’s parameters. Double-check that the machine type is correctly specified in your job configuration.

The fact that the word-count job succeeded suggests the setup and basic configurations are correct. The difference in outcomes between the word-count and MongoDB jobs could point to specific resource or configuration needs unique to the MongoDB job.

If the firewall were blocking your MongoDB connections, you would typically see timeout errors or specific connection refusal errors, not the ZONE_RESOURCE_POOL_EXHAUSTED error. This error strictly relates to resource availability within the Google Cloud infrastructure.

To eliminate any doubts regarding firewall settings, you might set up a simple VM in the same network and try to connect to your MongoDB instance using the same credentials and connection string. This can help confirm if the issue is network-related or specific to how Dataflow handles the connection.

Since you successfully ran a word-count job, try modifying the MongoDB job to simplify it (e.g., reduce data volumes or complexity). See if there’s a particular aspect of the MongoDB job (like large data pulls) that could be causing the resource exhaustion.

Dive deeper into the logs. Look not just for errors but also for warnings or informational messages that might give clues about what’s happening when the MongoDB job is initiated.

Given the persistent nature of your issue and the complexity involved, getting direct support from Google Cloud might yield faster resolution. They can provide insights specific to your project's resource usage and configuration.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

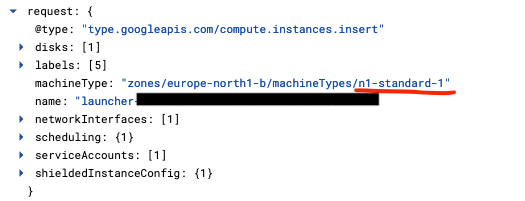

Okay, so we have done further troubleshooting and I believe it has to do with the machine-type.

We are not able to create a VM-instance with the n1-standard-1 machine type in europe-north1 due to resource exhaustion. However, we are able to create an n2-standard-2 machine type.

When I ran the word-count job, it was not able to create the VM-instance with n1-standard1 in either europe-north1 or europe-west3, independent of the zone I chose. But we could run the job with the n2-standard-2 machine-type.

When I'm trying to start the MongoDB-job and specifying the machine-type to either a custom machine or the n2-standard-2, the request still tries to create the n1-standard-1 machine. I have tried it both through the Cloud Shell and through the console. But neither of these ways are actually changing my request to start the machine-type I specified but is still trying to start the n1-standard-1.

My Cloud Shell code:

gcloud dataflow flex-template run XXX \

--project=XXX \

--region=europe-north1 \

--template-file-gcs-location=gs://dataflow-templates-europe-north1/latest/flex/MongoDB_to_BigQuery \

--parameters \

"outputTableSpec=XXX,\

mongoDbUri=XXX,\

database=XXX,\

collection=XXX,\

userOption=NONE,\

workerMachineType=n2-standard-2"

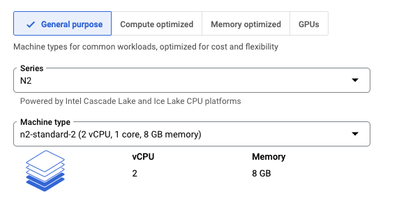

In the console I'm specifying the machine-type under the Optional Parameters:

This is the request in the logs for the VM-instance

I don't know if this is a bug with the MongoDB to BigQuery template or something else. But I've opened a Github-issue for it as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So after some digging around I found this in the terraform script for the template:

variable "launcher_machine_type" {

type = string

description = "The machine type to use for launching the job. The default is n1-standard-1."

default = null

}

variable "machine_type" {

type = string

description = "The machine type to use for the job."

default = null

}

The launcer_machine_type is a parameter I can't find a way of defining either in the Cloud Shell or in the console, and I would assume is the reason for the job to always default back to the n1-standard-1 machine type instead of the machine I'm specifying in the workerMachineType-parameter.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

t looks like the issue you're encountering might be related to how the Dataflow job configuration is managed in the MongoDB to BigQuery template, specifically around the handling of machine type parameters. The details you provided about the launcher_machine_type and machine_type variables in the Terraform script suggest a potential configuration gap in how these parameters are exposed or used.

Steps and Considerations to Address This Issue

-

Confirm Template Configuration:

- Review the GitHub repository for the MongoDB to BigQuery template to ensure you understand any default settings or mandatory parameters, especially those related to the machine types.

- Sometimes, templates are configured with specific defaults that might override user settings if not properly adjusted.

-

Modify the Terraform Script:

- If you have the ability to modify and host your own version of the Dataflow template, you might consider changing the default values for

launcher_machine_typeandmachine_typein the Terraform script to match your desired configuration. - This could involve setting

launcher_machine_typeton2-standard-2or another appropriate type:

variable "launcher_machine_type" { type = string description = "The machine type to use for launching the job." default = "n2-standard-2" }- After modifying, host the modified template in your own Google Cloud Storage bucket and reference it when launching jobs.

- If you have the ability to modify and host your own version of the Dataflow template, you might consider changing the default values for

-

Explicitly Specify Machine Types:

- Ensure that the

workerMachineTypeparameter is correctly specified as you have done. - You might also need to specify the

launcherMachineTypeif that's an available parameter in the actual job deployment command or script, which could be missing from the standard template settings.

- Ensure that the

-

Contact Google Cloud Support:

- Since this issue could be indicative of a bug or a missing feature in the template or the Dataflow service handling, reaching out to Google Cloud Support might provide more direct and detailed assistance.

- They can offer guidance specific to your project and resource configuration.

-

Monitor and Follow Up on GitHub Issue:

- Since you've already opened a GitHub issue, keep an eye on that for updates from the template maintainers.

- Community feedback and maintainer responses can provide fixes or workarounds that other users have found effective.

-

Test with Different Configurations:

- As a temporary workaround, test the job with different regions or machine types that are not facing resource exhaustion.

- This could help isolate if the issue is strictly related to machine type handling or a broader configuration or template issue.

If after these steps the issue persists, you might need to consider alternative ways to orchestrate your workload, possibly using a custom setup or another template that allows more granular control over the execution environment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey there,

I'm having similar frustrating challenges except with postgres.

Just wondering if you ever managed to solve this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No nothing unfortunately. Posted on their Github but have not received a reply there. So went a different route where I ultimately use BigQuery Data Transfer instead.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jonte393 the errors you’re facing seem to stem from network and region configuration issues with your Dataflow job. Let me walk you through the root causes and provide solutions to get things running smoothly:

Error 1: INVALID_ARGUMENT - No Default Subnetwork

This error happens when your default network doesn’t have a subnetwork in the specified region. While GCP typically creates a default network and subnets for you, some configurations or project setups might skip this.

Solution: Create a Subnetwork

- Go to VPC Network > Subnets in your GCP Console.

- Create a new subnetwork in the required region (e.g., europe-west3).

- Update your Dataflow job configuration to use this subnetwork explicitly by including the --subnetwork parameter.

Example Command:

Error 2: UNAVAILABLE - HTTP_503

This error usually indicates resource constraints in the region, such as insufficient compute resources or temporary service disruptions.

Solution Options:

Retry the Job

These errors are often transient and may resolve with retries. You can enable Dataflow AutoRetry to handle such failures automatically.

Add these flags to your job configuration:--maxNumWorkers=3 --autoscalingAlgorithm=THROUGHPUT_BASED

Switch to a Nearby Region

If retries don’t work, consider switching to a region with better resource availability, such as europe-west1 or europe-west4.

Alternative Approach: Use Windsor.ai for MongoDB to BigQuery Transfers

If configuring Dataflow proves too challenging or time-consuming, consider using a platform like Windsor.ai.

Why Windsor.ai?

- Simpler Setup: No need to deal with complex network configurations.

- Automated Pipelines: Supports incremental updates for efficient data transfers.

- User-Friendly Interface: Perfect for both batch and real-time migrations.

Hope this helps!

-

Analytics General

407 -

Apache Kafka

6 -

BigQuery

1,352 -

Business Intelligence

91 -

Cloud Composer

100 -

Cloud Data Fusion

102 -

Cloud PubSub

195 -

Data Catalog

91 -

Data Transfer

169 -

Dataflow

216 -

Dataform

324 -

Dataprep

27 -

Dataproc

122 -

Datastream

49 -

Google Data Studio

81 -

Looker

130

- « Previous

- Next »

Twitter

Twitter