- Google Cloud

- Cloud Forums

- Data Analytics

- Re: Using BigQueryTemplate for streaming ongoing i...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From a java application (spring) i need to stream (insert) ongoing data (1TB per day).

Can i use for this BigQueryTemplate?

Or for streaming i must use Storage Write Api?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Each API offers advantages under different scenarios:

- JsonStreamWriter: Ideal for fine-tuning performance with asynchronous capabilities and automated schema updates.

- BigQueryWriteClient: More straightforward for simpler streaming workflows and manual asynchronous control.

Since your primary goal is optimization, the JsonStreamWriter might be a better fit due to its asynchronous design.

AppendRowsRequest Reuse Unfortunately, you cannot reuse the AppendRowsRequest.build() object. Each request encapsulates a unique batch of data. While there's some overhead in constructing these requests, it's usually less significant than network and serialization factors. Focus on optimizing batch size for better performance.

BigQueryWriteClient with Multiple Tables Yes! It's perfectly fine to reuse the BigQueryWriteClient across multiple tables in parallel. The key is to manage separate write streams for each table to ensure data integrity.

Transforming Rows with ProtoData The .map(row -> AppendRowsRequest.ProtoData.newBuilder()...) section converts rows into Google's Protobuf format. This is essential for BigQuery's efficient data transfer. However, since your source data is already in JSON, using JsonStreamWriter for direct JSON ingestion would streamline the process.

JSON Input and JsonStreamWriter To simplify your process, I highly recommend using JsonStreamWriter directly with your JSON data. It eliminates the Protobuf conversion step, simplifying your code.

API Methods and Versions Please double-check your google-cloud-bigquerystorage library version for compatibility. Method availability can vary across versions.

Additional Tips

- Error Handling: Implement robust error handling and retries for resilience.

- Monitoring: Establish logging and metrics for visibility into your streaming operations.

- Security: Always prioritize data security and compliance best practices.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Based on the volume and requirements you described, the BigQuery Storage Write API is highly recommended. This tool is specifically engineered for high-volume, low-latency streaming, ensuring efficient and cost-effective data management.

Key Benefits:

- High Throughput: Seamlessly manages large-scale data transfers.

- Low Latency: Ensures data is quickly available for querying.

- Cost-Effective: Designed to minimize costs at large scales.

The BigQueryTemplate might not meet your needs effectively due to its limitations with high-throughput streaming.

Implementation Tips:

- Batch Processing: Group data into batches or micro-batches to optimize throughput and control costs.

- Data Serialization with Avro: Utilizes a compact format that enhances schema management—critical for handling large datasets. Implementing Avro serialization involves encoding your data structures into a byte format that BigQuery can process efficiently.

- Schema Management: Regular updates and compatibility checks with your BigQuery schema are essential for maintaining data integrity.

- Cost Management: Keep a close watch on your expenses; consider fixed pricing plans or dedicated query slots as economical solutions.

- Integration Options: Utilize Apache Beam or Spring Cloud Data Flow to enhance your data processing workflows, providing flexibility and scalability for complex scenarios.

Example:

import com.google.cloud.bigquery.storage.v1.AppendRowsRequest;

import com.google.cloud.bigquery.storage.v1.BigQueryWriteClient;

import com.google.cloud.bigquery.storage.v1.TableName;

import com.google.protobuf.ByteString;

import java.util.List;

import java.util.stream.Collectors;

public class BigQueryStreamExample {

public static void main(String[] args) throws Exception {

try (BigQueryWriteClient writeClient = BigQueryWriteClient.create()) {

String projectId = "your-project-id";

String datasetName = "your-dataset-name";

String tableName = "your-table-name";

TableName parentTable = TableName.of(projectId, datasetName, tableName);

List<ByteString> rows = prepareDataRows(); // Serialize data, ideally using Avro

AppendRowsRequest request = AppendRowsRequest.newBuilder()

.setWriteStream(parentTable.toString())

.addAllRows(rows.stream()

.map(row -> AppendRowsRequest.ProtoData.newBuilder().setRows(row).build())

.collect(Collectors.toList()))

.build();

writeClient.appendRowsCallable().call(request);

}

}

private static List<ByteString> prepareDataRows() {

// Placeholder for data serialization logic

return null;

}

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for detailed reply, some follow up questions:

you are using different api then I assumed,

I was thinking about

com.google.cloud.bigquery.storage.v1.JsonStreamWriter;

as it allows , as I understand, asynchronous execution and other tweaks.

can you elaborate which one is better?

Also from your code,

should I crate

AppendRowsRequest.build()

for each mcro-batch(row)? or I can reuse it?

If I can't reuse, is the build heavy operation?

In case I have multiple tables can I reuse

BigQueryWriteClient

in parallel?

Wha is the reason for:

map(row -> AppendRowsRequest.ProtoData.newBuilder().setRows(row).build())

?

Why I need ProtoBuf?

FYI: my source comes in json format

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

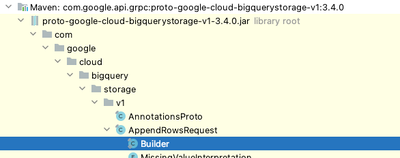

Also there is no method addAllRows in the builder (3.4.0)

and writeClient.appendRowsCallable().call() - not accepting AppendRowRequest

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Each API offers advantages under different scenarios:

- JsonStreamWriter: Ideal for fine-tuning performance with asynchronous capabilities and automated schema updates.

- BigQueryWriteClient: More straightforward for simpler streaming workflows and manual asynchronous control.

Since your primary goal is optimization, the JsonStreamWriter might be a better fit due to its asynchronous design.

AppendRowsRequest Reuse Unfortunately, you cannot reuse the AppendRowsRequest.build() object. Each request encapsulates a unique batch of data. While there's some overhead in constructing these requests, it's usually less significant than network and serialization factors. Focus on optimizing batch size for better performance.

BigQueryWriteClient with Multiple Tables Yes! It's perfectly fine to reuse the BigQueryWriteClient across multiple tables in parallel. The key is to manage separate write streams for each table to ensure data integrity.

Transforming Rows with ProtoData The .map(row -> AppendRowsRequest.ProtoData.newBuilder()...) section converts rows into Google's Protobuf format. This is essential for BigQuery's efficient data transfer. However, since your source data is already in JSON, using JsonStreamWriter for direct JSON ingestion would streamline the process.

JSON Input and JsonStreamWriter To simplify your process, I highly recommend using JsonStreamWriter directly with your JSON data. It eliminates the Protobuf conversion step, simplifying your code.

API Methods and Versions Please double-check your google-cloud-bigquerystorage library version for compatibility. Method availability can vary across versions.

Additional Tips

- Error Handling: Implement robust error handling and retries for resilience.

- Monitoring: Establish logging and metrics for visibility into your streaming operations.

- Security: Always prioritize data security and compliance best practices.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please post the maven dependency compatible with the above code you posted?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To work with BigQuery, specifically utilizing essential components like JsonStreamWriter or BigQueryWriteClient, you'll need to integrate Google's google-cloud-bigquerystorage library into your Maven project. Let's see how to configure your pom.xml file:

<dependencies>

<dependency>

<groupId>com.google.cloud</groupId>

<artifactId>google-cloud-bigquerystorage</artifactId>

<version>3.4.0</version>

</dependency>

<dependency>

<groupId>io.grpc</groupId>

<artifactId>grpc-netty-shaded</artifactId>

<version>1.47.0</version> </dependency>

<dependency>

<groupId>com.google.cloud</groupId>

<artifactId>google-cloud-bigquery</artifactId>

<version>2.8.0</version> </dependency>

</dependencies> Points to Note:

- Version Compatibility: Double-check that the versions of

google-cloud-bigquerystorageand other dependencies play nicely together. Mismatches can cause problems during execution. - Updates: Stay on top of the latest library versions for access to new features and enhancements. Find these on the Maven Central Repository or the official Google Cloud Java Client for Google BigQuery Storage GitHub page (https://cloud.google.com/java/docs/reference/google-cloud-bigquerystorage/latest/com.google.cloud.bi...).

- Additional Libraries: Your application might require extra dependencies (e.g., Google's authentication libraries) for seamless operational handling.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Still,

com.google.cloud.bigquery.storage.v1.Builder class doesn't have addAllRows

I also don't see in javadocs

Not sure what I am doing wrong.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Fadi_dr ,

To clarify the process and address the confusion around the addAllRows functionality.

Key Concepts:

- Protobuf and Data Representation: Data in the BigQuery Storage API must be structured using Protocol Buffers (protobufs). Each row should conform to a protobuf message definition that you've created.

- Data Batching: Group the protobuf representations of your rows into batches. This approach optimizes data transmission and management.

AppendRowsRequest: Encapsulate these batches withinAppendRowsRequestobjects.BigQueryWriteClient: Use theBigQueryWriteClientto send theseAppendRowsRequestobjects to the BigQuery Storage API.

Illustrative Code Example:

// ... (Import statements)

// Assuming you have a protobuf definition called 'MyRow'

List<MyRow> rows = ...; // Populate with your data

// Create a BigQueryWriteClient

BigQueryWriteClient client = BigQueryWriteClient.create();

// Specify your BigQuery table details

WriteStream stream = WriteStream.newBuilder()

.setTableName("project_id:dataset.table")

.build();

AppendRowsRequest.Builder requestBuilder = AppendRowsRequest.newBuilder().setWriteStream(stream);

// Add rows to the request (adjust batching as needed)

// **Comment: Control batch size here for optimization**

for (MyRow row : rows) {

requestBuilder.addProtoRows(row.toByteString());

}

// Send the request

client.appendRows(requestBuilder.build()); Important Notes:

- Error Handling: Implement robust error handling mechanisms (try-catch blocks, etc.) to manage potential issues during data transmission.

- Schema Management: Ensure your protobuf schema aligns perfectly with your BigQuery table schema to prevent mismatches and errors.

- Alternative Methods: For larger datasets or continuous data flows, consider exploring BigQuery's streaming insert capabilities, which can be found at Google's BigQuery streaming data documentation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks.

still there is no method addProtoRows

Not very important because i will go with with JsonStreamWriter

-

Analytics General

408 -

Apache Kafka

6 -

BigQuery

1,353 -

Business Intelligence

91 -

Cloud Composer

100 -

Cloud Data Fusion

103 -

Cloud PubSub

195 -

Data Catalog

91 -

Data Transfer

169 -

Dataflow

216 -

Dataform

325 -

Dataprep

27 -

Dataproc

122 -

Datastream

49 -

Google Data Studio

81 -

Looker

130

- « Previous

- Next »

Twitter

Twitter