- Google Cloud

- Cloud Forums

- Google Kubernetes Engine (GKE)

- Re: Persistent GKE Metrics Agent Errors Following ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I recently upgraded both the cluster and node pool manually on my GKE instance. Since the upgrade, the gke-metrics-agent has been consistently logging errors when attempting to scrape the gpu-maintenance-handler? This is particularly strange since none of the workloads are using GPU.

Previous Version: 1.25.8-gke.1000

Current Version: 1.26.5-gke.1200

These errors did not appear in the log history prior to the upgrade, and I have not identified any performance issues or disruptions associated with them.

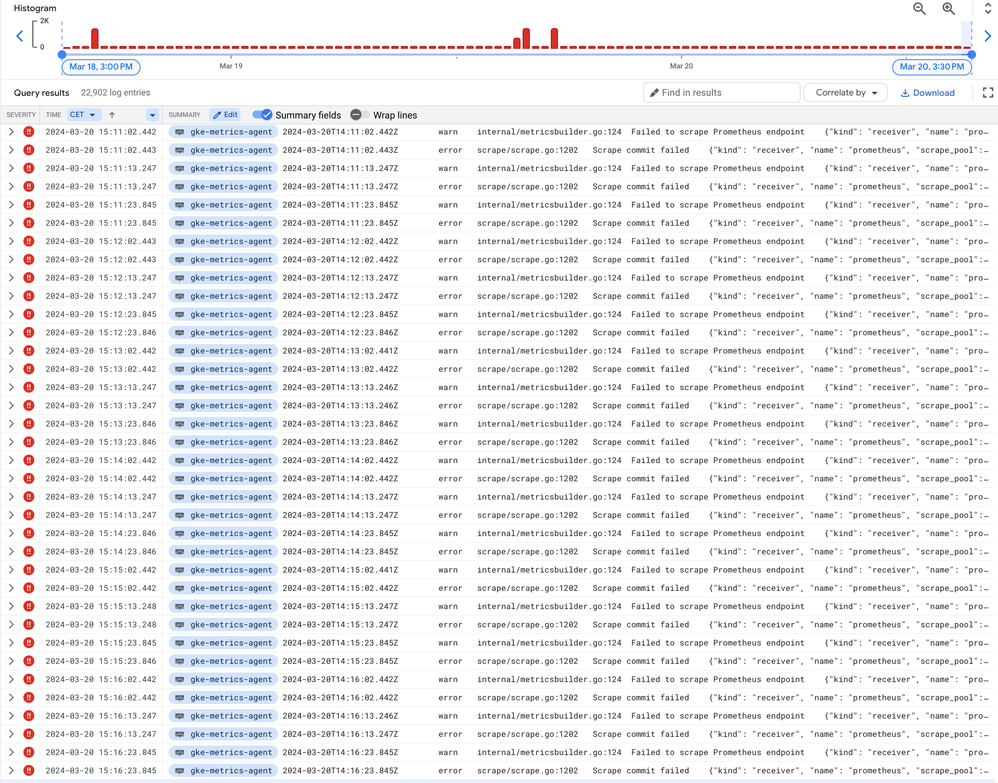

Here are the errors in the order they appear:

{

"insertId": "insertId-value",

"labels": {

"compute.googleapis.com/resource_name": "gke-generic-cluster-generic-node-pool-hash",

"k8s-pod/component": "gke-metrics-agent",

"k8s-pod/controller-revision-hash": "controller-revision-hash-value",

"k8s-pod/k8s-app": "gke-metrics-agent",

"k8s-pod/pod-template-generation": "pod-template-generation-value"

},

"logName": "projects/generic-project/logs/stderr",

"receiveTimestamp": "2023-06-21T14:35:27.987750145Z",

"resource": {

"labels": {

"cluster_name": "generic-cluster",

"container_name": "gke-metrics-agent",

"location": "europe-west3",

"namespace_name": "kube-system",

"pod_name": "gke-metrics-agent-generic",

"project_id": "generic-project"

},

"type": "k8s_container"

},

"severity": "ERROR",

"textPayload": "2023-06-21T14:35:26.486Z\twarn\tinternal/metricsbuilder.go:124\tFailed to scrape Prometheus endpoint\t{\"kind\": \"receiver\", \"name\": \"prometheus\", \"scrape_timestamp\": 1687358126485, \"target_labels\": \"map[__name__:up gke_component_name:nodes/gpu_maintenance_handler instance:127.0.0.1:8526 job:gpu-maintenance-handler]\"}",

"timestamp": "2023-06-21T14:35:26.486549967Z"

}{

"insertId": "insertId-value",

"labels": {

"compute.googleapis.com/resource_name": "gke-generic-cluster-generic-node-pool-hash",

"k8s-pod/component": "gke-metrics-agent",

"k8s-pod/controller-revision-hash": "controller-revision-hash-value",

"k8s-pod/k8s-app": "gke-metrics-agent",

"k8s-pod/pod-template-generation": "pod-template-generation-value"

},

"logName": "projects/generic-project/logs/stderr",

"receiveTimestamp": "2023-06-21T14:35:27.987750145Z",

"resource": {

"labels": {

"cluster_name": "generic-cluster",

"container_name": "gke-metrics-agent",

"location": "europe-west3",

"namespace_name": "kube-system",

"pod_name": "gke-metrics-agent-generic",

"project_id": "generic-project"

},

"type": "k8s_container"

},

"severity": "ERROR",

"textPayload": "2023-06-21T14:35:26.486Z\terror\tscrape/scrape.go:1202\tScrape commit failed\t{\"kind\": \"receiver\", \"name\": \"prometheus\", \"scrape_pool\": \"gpu-maintenance-handler\", \"target\": \"http://127.0.0.1:8526/metrics\", \"err\": \"process_start_time_seconds metric is missing\"}",

"timestamp": "2023-06-21T14:35:26.486608287Z"

}{

"insertId": "insertId-value",

"labels": {

"compute.googleapis.com/resource_name": "gke-generic-cluster-generic-node-pool-hash",

"k8s-pod/component": "gke-metrics-agent",

"k8s-pod/controller-revision-hash": "controller-revision-hash-value",

"k8s-pod/k8s-app": "gke-metrics-agent",

"k8s-pod/pod-template-generation": "pod-template-generation-value"

},

"logName": "projects/generic-project/logs/stderr",

"receiveTimestamp": "2023-06-21T14:35:34.993277578Z",

"resource": {

"labels": {

"cluster_name": "generic-cluster",

"container_name": "gke-metrics-agent",

"location": "europe-west3",

"namespace_name": "kube-system",

"pod_name": "gke-metrics-agent-generic",

"project_id": "generic-project"

},

"type": "k8s_container"

},

"severity": "ERROR",

"textPayload": "2023-06-21T14:35:30.523Z\twarn\tinternal/metricsbuilder.go:124\tFailed to scrape Prometheus endpoint\t{\"kind\": \"receiver\", \"name\": \"prometheus/nostarttime\", \"scrape_timestamp\": 1687358130522, \"target_labels\": \"map[__name__:up instance:127.0.0.1:10231 job:netd]\"}",

"timestamp": "2023-06-21T14:35:30.523522336Z"

}

So far, I've considered adding an exclusion filter for these errors, but I wanted to understand them better before doing so. Has anyone else experienced similar issues after an upgrade? Does anyone have insights about what might be causing these errors, or any potential impacts on my cluster that I should be aware of?

Any help or advice would be greatly appreciated.

Thanks!

- Labels:

-

GKE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'd go ahead and add the exclusion filters for now as they won't cause any issues. This issue has been reported internally and the team is currently figuring out the appropriate fix.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

[Need latest info/update regarding solution for GKE Metrics error - related to prometheus]

We are using GKE version 1.26.10-gke.1101000, with Release Channel as Stable channel.

We are getting the below errors frequently in gke-metrics-agent, but we couldn't find the cause.

Is there any way to suppress these error logs? or Is there any fix or workaround available?.

error ==scrape==/==scrape==.go:1202 ==Scrape== commit failed {"kind": "receiver", "name": "prometheus", "==scrape==_pool": "gpu-maintenance-handler", "target": "http://127.0.0.1:8526/metrics", "err": "process_start_time_seconds metric is missing"}

warn internal/metricsbuilder.go:124 Failed to ==scrape== Prometheus endpoint {"kind": "receiver", "name": "prometheus", "==scrape==_timestamp": 1712227367385, "target_labels": "map[__name__:up gke_component_name:nodes/gpu_maintenance_handler instance:127.0.0.1:8526 job:gpu-maintenance-handler]"}

Thanks in Advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> I'd go ahead and add the exclusion filters

An example to exclude gke-metadata-server INFO log is:

```bash

gcloud logging sinks update _Default --add-exclusion=name=exclude-unimportant-gke-metadata-server-logs,filter=' resource.type = "k8s_container" resource.labels.namespace_name = "kube-system" resource.labels.pod_name =~ "gke-metadata-server-.*" resource.labels.container_name = "gke-metadata-server" severity <= "INFO" '

```

You can modify the above filter to exclude the above spammy log where payload matches "gpu-maintenance-handler" and container name is gke-metrics-agent.

- https://cloud.google.com/logging/docs/export/configure_export_v2#filter-examples

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

do you have some reference to track that issue? Otherwise guess I will have to open another internal case.

BR Thomas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are using 1.28.3-gke.1203001 and get this error.

Any update?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is this a new cluster or an existing cluster which was upgraded?

Also, can you post the log message(s) you are seeing?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The cluster was updated from 1.27 to 1.28 last month, but I have noticed the errors only this week, therefore I am not sure if they were already there:

{

"textPayload": "2024-01-11T09:20:01.616Z\twarn\tinternal/metricsbuilder.go:124\tFailed to scrape Prometheus endpoint\t{\"kind\": \"receiver\", \"name\": \"prometheus\", \"scrape_timestamp\": 1704964801615, \"target_labels\": \"map[__name__:up gke_component_name:addons/gke_metadata_server instance:127.0.0.1:989 job:addons]\"}",

"timestamp": "2024-01-11T09:20:01.617190050Z",

"severity": "ERROR",

"labels": {

"k8s-pod/component": "gke-metrics-agent",

"k8s-pod/k8s-app": "gke-metrics-agent",

"compute.googleapis.com/resource_name": "gke-default-pool-40924eba-02kp",

"k8s-pod/pod-template-generation": "3",

"k8s-pod/controller-revision-hash": "76c6ff9889"

},

"receiveTimestamp": "2024-01-11T09:20:01.693056134Z"

}{

"textPayload": "2024-01-11T09:21:01.617Z\terror\tscrape/scrape.go:1202\tScrape commit failed\t{\"kind\": \"receiver\", \"name\": \"prometheus\", \"scrape_pool\": \"addons\", \"target\": \"http://127.0.0.1:989/metricz\", \"err\": \"process_start_time_seconds metric is missing\"}",

"insertId": "a43lb9nc1ty7lxac",

"timestamp": "2024-01-11T09:21:01.617966077Z",

"severity": "ERROR",

"labels": {

"compute.googleapis.com/resource_name": "gke-default-pool-40924eba-02kp",

"k8s-pod/pod-template-generation": "3",

"k8s-pod/controller-revision-hash": "76c6ff9889",

"k8s-pod/component": "gke-metrics-agent",

"k8s-pod/k8s-app": "gke-metrics-agent"

},

"receiveTimestamp": "2024-01-11T09:21:01.690544703Z"

}Do you suggest to recreate the cluster from scratch?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should not need to (re)create it from scratch. Just trying to see where the issue might be.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We just updated to 1.28.3-gke.1286000 and still see this error.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seeing the same on 1.26.6-gke.1700. Is there a version with a fix for this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I'm getting the same errors, is there an active issue that's tracking this? Would like to follow along

To give more context, the errors started when I self deployed a promtheus operator. But now even if I remove all prometheus related deployments, the error persists. Every few seconds, there `gke-metrics-agent` will log two error messages:

{

"textPayload": "2024-02-05T22:38:10.056Z\twarn\tinternal/metricsbuilder.go:124\tFailed to scrape Prometheus endpoint\t{\"kind\": \"receiver\", \"name\": \"prometheus\", \"scrape_timestamp\": 1707172690055, \"target_labels\": \"map[__name__:up gke_component_name:addons/gke_metadata_server instance:127.0.0.1:989 job:addons]\"}",

"insertId": "9punzz5v2b34nf25",

"resource": {

"type": "k8s_container",

"labels": {

"project_id": "c7e-prod",

"container_name": "gke-metrics-agent",

"location": "us-east4-a",

"pod_name": "gke-metrics-agent-bmss7",

"namespace_name": "kube-system",

"cluster_name": "us-east4-a"

}

},

"timestamp": "2024-02-05T22:38:10.057060435Z",

"severity": "ERROR",

"labels": {

"compute.googleapis.com/resource_name": "gke-us-east4-a-nap-e2-highmem-4-4nbvk-ea1fc62c-plqs",

"k8s-pod/component": "gke-metrics-agent",

"k8s-pod/pod-template-generation": "21",

"k8s-pod/k8s-app": "gke-metrics-agent",

"k8s-pod/controller-revision-hash": "6bccd476f"

},

"logName": "projects/c7e-prod/logs/stderr",

"receiveTimestamp": "2024-02-05T22:38:12.868037001Z"

}{

"textPayload": "2024-02-05T22:38:10.056Z\terror\tscrape/scrape.go:1202\tScrape commit failed\t{\"kind\": \"receiver\", \"name\": \"prometheus\", \"scrape_pool\": \"addons\", \"target\": \"http://127.0.0.1:989/metricz\", \"err\": \"process_start_time_seconds metric is missing\"}",

"insertId": "ce9jqt5ufoogep5p",

"resource": {

"type": "k8s_container",

"labels": {

"location": "us-east4-a",

"namespace_name": "kube-system",

"project_id": "c7e-prod",

"pod_name": "gke-metrics-agent-bmss7",

"cluster_name": "us-east4-a",

"container_name": "gke-metrics-agent"

}

},

"timestamp": "2024-02-05T22:38:10.057149815Z",

"severity": "ERROR",

"labels": {

"k8s-pod/controller-revision-hash": "6bccd476f",

"k8s-pod/pod-template-generation": "21",

"compute.googleapis.com/resource_name": "gke-us-east4-a-nap-e2-highmem-4-4nbvk-ea1fc62c-plqs",

"k8s-pod/component": "gke-metrics-agent",

"k8s-pod/k8s-app": "gke-metrics-agent"

},

"logName": "projects/c7e-prod/logs/stderr",

"receiveTimestamp": "2024-02-05T22:38:12.868037001Z"

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you manage you get them away ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am seeing the same errors flooding the log with 1.27.7-gke.1121002 and autopilot. Has anybody managed to figure out what is going on and how to address it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you paste one log to show which endpoint is failed to be scraped?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

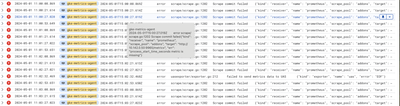

Sure! Would this be sufficient?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Add exclusion filters for now as they won't cause any issues. This issue (spam logs around gke_metadata_server) has been reported internally and the team is currently figuring out & rolling out the appropriate fix.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are seeing the same errors plus additional ones that seem related. This is on a new Autopilot cluster

```

{

"insertId": "61ztn0mr4hn580go",

"jsonPayload": {

"stacktrace": "google3/cloud/kubernetes/metrics/components/collector/collector.runScrapeLoop\n\tcloud/kubernetes/metrics/components/collector/collector.go:86\ngoogle3/cloud/kubernetes/metrics/components/collector/collector.Run\n\tcloud/kubernetes/metrics/components/collector/collector.go:62\nmain.main\n\tcloud/kubernetes/metrics/components/collector/main.go:40\nruntime.main\n\tthird_party/go/gc/src/runtime/proc.go:267",

"caller": "collector/collector.go:86",

"error": "failed to process 70 (out of 1313) input lines",

"msg": "Failed to process metrics",

"scrape_target": "http://localhost:9990/metrics",

"level": "error",

"ts": 1713527688.3943982

},

"resource": {

"type": "k8s_container",

"labels": {

"project_id": "*****",

"cluster_name": "*****",

"location": "us-east1",

"namespace_name": "kube-system",

"pod_name": "anetd-g7f9p",

"container_name": "cilium-agent-metrics-collector"

}

},

"timestamp": "2024-04-19T11:54:48.394748813Z",

"severity": "ERROR",

"labels": {

"k8s-pod/controller-revision-hash": "56b47ff86",

"k8s-pod/k8s-app": "cilium",

"k8s-pod/pod-template-generation": "1",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-3e896fe4-v7gq"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-19T11:54:50.753109177Z"

}

```

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for reporting this! What's your GKE cluster version?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe i was on 1.26 but after upgrading to 1.29 this morning most of the errors have gone away. After the upgrade, I went from a few hundred thousand of these errors to a few thousand. The most frequent errors now are:

{

"textPayload": "2024-04-19T13:59:53.651Z\terror\tscrape/scrape.go:1202\tScrape commit failed\t{\"kind\": \"receiver\", \"name\": \"prometheus\", \"scrape_pool\": \"addons\", \"target\": \"http://10.142.0.52:9965/metrics\", \"err\": \"process_start_time_seconds metric is missing\"}",

"insertId": "b2i0irp6br8bsy47",

"resource": {

"type": "k8s_container",

"labels": {

"container_name": "gke-metrics-agent",

"cluster_name": "*****",

"pod_name": "gke-metrics-agent-zw9mn",

"project_id": "*****,

"namespace_name": "kube-system",

"location": "us-east1"

}

},

"timestamp": "2024-04-19T13:59:53.653623276Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"k8s-pod/k8s-app": "gke-metrics-agent",

"k8s-pod/controller-revision-hash": "77f87b67bb",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-3e896fe4-v7gq",

"k8s-pod/component": "gke-metrics-agent"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-19T13:59:57.734292060Z"

}

And

{

"insertId": "9krsksykukai5amv",

"jsonPayload": {

"error": "rpc error: code = InvalidArgument desc = One or more TimeSeries could not be written: Unrecognized metric labels: [scanning_mode]",

"level": "error",

"msg": "Failed to export metrics to Cloud Monitoring",

"stacktrace": "google3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).exportBuffer\n\tcloud/kubernetes/metrics/common/gcm/export.go:434\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:383\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).Flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:369\ngoogle3/cloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.ExportPushMetrics\n\tcloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.go:193\nmain.main\n\tcloud/kubernetes/distro/containers/image_package_extractor/img_pkg_extractor/main.go:112\nruntime.main\n\tthird_party/go/gc/src/runtime/proc.go:271",

"caller": "gcm/export.go:434",

"ts": 1713535108.3320804

},

"resource": {

"type": "k8s_container",

"labels": {

"cluster_name": "******",

"pod_name": "image-package-extractor-nzrmb",

"location": "us-east1",

"container_name": "image-package-extractor",

"project_id": "******",

"namespace_name": "kube-system"

}

},

"timestamp": "2024-04-19T13:58:28.332394487Z",

"severity": "ERROR",

"labels": {

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"k8s-pod/k8s-app": "image-package-extractor",

"compute.googleapis.com/resource_name": "gk3-******-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/pod-template-generation": "2"

},

"logName": "projects/******/logs/stderr",

"receiveTimestamp": "2024-04-19T13:58:32.985203921Z"

}- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

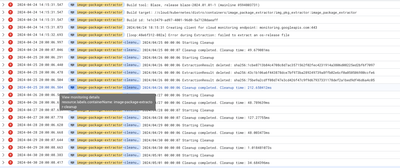

Chart After Upgrade

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> I believe i was on 1.26 but after upgrading to 1.29 this morning most of the errors have gone away.

Yes, we are aware of the spamming issue and rolling out the fix in the PROD.

> "textPayload": "2024-04-19T13:59:53.651Z\terror\tscrape/scrape.go:1202\tScrape commit failed\t{\"kind\": \"receiver\", \"name\": \"prometheus\", \"scrape_pool\": \"addons\", \"target\": \"http://10.142.0.52:9965/metrics\", \"err\": \"process_start_time_seconds metric is missing\"}",

This seems a new one. Want to figure out which component is `http://10.142.0.52:9965/metrics/`. Could you share more error logs from gke-metrics-agent (after removing sensitive information)?

> image-package-extractor-nzrmb

In checking this one.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is a sample so you can see frequency

[

{

"insertId": "b1w9bdlet471bcgw",

"jsonPayload": {

"stacktrace": "google3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).exportBuffer\n\tcloud/kubernetes/metrics/common/gcm/export.go:434\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:383\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).Flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:369\ngoogle3/cloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.ExportPushMetrics\n\tcloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.go:193\nmain.main\n\tcloud/kubernetes/distro/containers/image_package_extractor/img_pkg_extractor/main.go:112\nruntime.main\n\tthird_party/go/gc/src/runtime/proc.go:271",

"ts": 1713807879.8436852,

"level": "error",

"caller": "gcm/export.go:434",

"msg": "Failed to export metrics to Cloud Monitoring",

"error": "rpc error: code = InvalidArgument desc = One or more TimeSeries could not be written: Unrecognized metric labels: [scanning_mode]"

},

"resource": {

"type": "k8s_container",

"labels": {

"namespace_name": "kube-system",

"project_id": "*****",

"container_name": "image-package-extractor",

"pod_name": "image-package-extractor-nzrmb",

"location": "us-east1",

"cluster_name": "*****-cluster"

}

},

"timestamp": "2024-04-22T17:44:39.843964020Z",

"severity": "ERROR",

"labels": {

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"k8s-pod/pod-template-generation": "2",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/k8s-app": "image-package-extractor"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T17:44:42.986227404Z"

},

{

"insertId": "ydu9m7s90t1jzemf",

"jsonPayload": {

"pid": "1",

"message": "[loop:111494d1-7ffc] Failed to flush metrics: 1 error occurred:"

},

"resource": {

"type": "k8s_container",

"labels": {

"location": "us-east1",

"pod_name": "image-package-extractor-nzrmb",

"cluster_name": "*****-cluster",

"project_id": "*****",

"container_name": "image-package-extractor",

"namespace_name": "kube-system"

}

},

"timestamp": "2024-04-22T17:44:39.844018024Z",

"severity": "ERROR",

"labels": {

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/pod-template-generation": "2"

},

"logName": "projects/*****/logs/stderr",

"sourceLocation": {

"file": "metrics.go",

"line": "194"

},

"receiveTimestamp": "2024-04-22T17:44:42.986227404Z"

},

{

"textPayload": "\t* failed to export 1 (out of 1) batches of metrics to Cloud Monitoring",

"insertId": "bjd2lrzhvl1a79rf",

"resource": {

"type": "k8s_container",

"labels": {

"namespace_name": "kube-system",

"container_name": "image-package-extractor",

"project_id": "*****",

"location": "us-east1",

"cluster_name": "*****-cluster",

"pod_name": "image-package-extractor-nzrmb"

}

},

"timestamp": "2024-04-22T17:44:39.844026764Z",

"severity": "ERROR",

"labels": {

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"k8s-pod/pod-template-generation": "2"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T17:44:42.986227404Z"

},

{

"insertId": "mphzrjb69j8jkwic",

"jsonPayload": {},

"resource": {

"type": "k8s_container",

"labels": {

"pod_name": "image-package-extractor-nzrmb",

"namespace_name": "kube-system",

"cluster_name": "*****-cluster",

"location": "us-east1",

"project_id": "*****",

"container_name": "image-package-extractor"

}

},

"timestamp": "2024-04-22T17:44:39.844036633Z",

"severity": "ERROR",

"labels": {

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/pod-template-generation": "2",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T17:44:42.986227404Z"

},

{

"insertId": "4yaee9or924mwznn",

"jsonPayload": {

"error": "rpc error: code = InvalidArgument desc = One or more TimeSeries could not be written: Unrecognized metric labels: [scanning_mode]",

"caller": "gcm/export.go:434",

"ts": 1713808179.8788006,

"stacktrace": "google3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).exportBuffer\n\tcloud/kubernetes/metrics/common/gcm/export.go:434\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:383\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).Flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:369\ngoogle3/cloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.ExportPushMetrics\n\tcloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.go:193\nmain.main\n\tcloud/kubernetes/distro/containers/image_package_extractor/img_pkg_extractor/main.go:112\nruntime.main\n\tthird_party/go/gc/src/runtime/proc.go:271",

"msg": "Failed to export metrics to Cloud Monitoring",

"level": "error"

},

"resource": {

"type": "k8s_container",

"labels": {

"cluster_name": "*****-cluster",

"pod_name": "image-package-extractor-nzrmb",

"location": "us-east1",

"namespace_name": "kube-system",

"project_id": "*****",

"container_name": "image-package-extractor"

}

},

"timestamp": "2024-04-22T17:49:39.879074866Z",

"severity": "ERROR",

"labels": {

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/pod-template-generation": "2",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T17:49:42.986718157Z"

},

{

"insertId": "h6rb4crynhaoycqv",

"jsonPayload": {

"pid": "1",

"message": "[loop:1640181e-2cd5] Failed to flush metrics: 1 error occurred:"

},

"resource": {

"type": "k8s_container",

"labels": {

"pod_name": "image-package-extractor-nzrmb",

"project_id": "*****",

"cluster_name": "*****-cluster",

"container_name": "image-package-extractor",

"namespace_name": "kube-system",

"location": "us-east1"

}

},

"timestamp": "2024-04-22T17:49:39.879130090Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"k8s-pod/k8s-app": "image-package-extractor",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"sourceLocation": {

"file": "metrics.go",

"line": "194"

},

"receiveTimestamp": "2024-04-22T17:49:42.986718157Z"

},

{

"textPayload": "\t* failed to export 1 (out of 1) batches of metrics to Cloud Monitoring",

"insertId": "36rsvn83qmp6t5ev",

"resource": {

"type": "k8s_container",

"labels": {

"namespace_name": "kube-system",

"container_name": "image-package-extractor",

"project_id": "*****",

"location": "us-east1",

"cluster_name": "*****-cluster",

"pod_name": "image-package-extractor-nzrmb"

}

},

"timestamp": "2024-04-22T17:49:39.879138850Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"k8s-pod/k8s-app": "image-package-extractor",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T17:49:42.986718157Z"

},

{

"insertId": "4s678m0emtkqu3fl",

"jsonPayload": {},

"resource": {

"type": "k8s_container",

"labels": {

"project_id": "*****",

"namespace_name": "kube-system",

"cluster_name": "*****-cluster",

"container_name": "image-package-extractor",

"pod_name": "image-package-extractor-nzrmb",

"location": "us-east1"

}

},

"timestamp": "2024-04-22T17:49:39.879143766Z",

"severity": "ERROR",

"labels": {

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"k8s-pod/pod-template-generation": "2",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/k8s-app": "image-package-extractor"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T17:49:42.986718157Z"

},

{

"insertId": "5u2y2i6q2z6re20c",

"jsonPayload": {

"stacktrace": "google3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).exportBuffer\n\tcloud/kubernetes/metrics/common/gcm/export.go:434\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:383\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).Flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:369\ngoogle3/cloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.ExportPushMetrics\n\tcloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.go:193\nmain.main\n\tcloud/kubernetes/distro/containers/image_package_extractor/img_pkg_extractor/main.go:112\nruntime.main\n\tthird_party/go/gc/src/runtime/proc.go:271",

"error": "rpc error: code = InvalidArgument desc = One or more TimeSeries could not be written: Unrecognized metric labels: [scanning_mode]",

"ts": 1713808479.9243226,

"caller": "gcm/export.go:434",

"msg": "Failed to export metrics to Cloud Monitoring",

"level": "error"

},

"resource": {

"type": "k8s_container",

"labels": {

"pod_name": "image-package-extractor-nzrmb",

"project_id": "*****",

"location": "us-east1",

"namespace_name": "kube-system",

"cluster_name": "*****-cluster",

"container_name": "image-package-extractor"

}

},

"timestamp": "2024-04-22T17:54:39.924640468Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"k8s-pod/k8s-app": "image-package-extractor"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T17:54:42.993583135Z"

},

{

"insertId": "0v66ob3147msmsy5",

"jsonPayload": {

"pid": "1",

"message": "[loop:22111037-6d9a] Failed to flush metrics: 1 error occurred:"

},

"resource": {

"type": "k8s_container",

"labels": {

"cluster_name": "*****-cluster",

"namespace_name": "kube-system",

"pod_name": "image-package-extractor-nzrmb",

"container_name": "image-package-extractor",

"project_id": "*****",

"location": "us-east1"

}

},

"timestamp": "2024-04-22T17:54:39.924724111Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j"

},

"logName": "projects/*****/logs/stderr",

"sourceLocation": {

"file": "metrics.go",

"line": "194"

},

"receiveTimestamp": "2024-04-22T17:54:42.993583135Z"

},

{

"textPayload": "\t* failed to export 1 (out of 1) batches of metrics to Cloud Monitoring",

"insertId": "et7p0lyt920kmxit",

"resource": {

"type": "k8s_container",

"labels": {

"pod_name": "image-package-extractor-nzrmb",

"project_id": "*****",

"cluster_name": "*****-cluster",

"namespace_name": "kube-system",

"container_name": "image-package-extractor",

"location": "us-east1"

}

},

"timestamp": "2024-04-22T17:54:39.924734378Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"k8s-pod/k8s-app": "image-package-extractor",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T17:54:42.993583135Z"

},

{

"insertId": "a43qcsqwbwm262t5",

"jsonPayload": {},

"resource": {

"type": "k8s_container",

"labels": {

"pod_name": "image-package-extractor-nzrmb",

"project_id": "*****",

"cluster_name": "*****-cluster",

"namespace_name": "kube-system",

"location": "us-east1",

"container_name": "image-package-extractor"

}

},

"timestamp": "2024-04-22T17:54:39.924739335Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T17:54:42.993583135Z"

},

{

"insertId": "ztr6tvtpsmfhjor1",

"jsonPayload": {

"stacktrace": "google3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).exportBuffer\n\tcloud/kubernetes/metrics/common/gcm/export.go:434\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:383\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).Flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:369\ngoogle3/cloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.ExportPushMetrics\n\tcloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.go:193\nmain.main\n\tcloud/kubernetes/distro/containers/image_package_extractor/img_pkg_extractor/main.go:112\nruntime.main\n\tthird_party/go/gc/src/runtime/proc.go:271",

"ts": 1713808779.9680266,

"caller": "gcm/export.go:434",

"level": "error",

"msg": "Failed to export metrics to Cloud Monitoring",

"error": "rpc error: code = InvalidArgument desc = One or more TimeSeries could not be written: Unrecognized metric labels: [scanning_mode]"

},

"resource": {

"type": "k8s_container",

"labels": {

"pod_name": "image-package-extractor-nzrmb",

"project_id": "*****",

"namespace_name": "kube-system",

"container_name": "image-package-extractor",

"cluster_name": "*****-cluster",

"location": "us-east1"

}

},

"timestamp": "2024-04-22T17:59:39.968348780Z",

"severity": "ERROR",

"labels": {

"k8s-pod/k8s-app": "image-package-extractor",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"k8s-pod/pod-template-generation": "2"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T17:59:42.988893049Z"

},

{

"insertId": "c1zxforrv0zr9tuz",

"jsonPayload": {

"message": "[loop:3dded954-e4b0] Failed to flush metrics: 1 error occurred:",

"pid": "1"

},

"resource": {

"type": "k8s_container",

"labels": {

"namespace_name": "kube-system",

"project_id": "*****",

"location": "us-east1",

"container_name": "image-package-extractor",

"pod_name": "image-package-extractor-nzrmb",

"cluster_name": "*****-cluster"

}

},

"timestamp": "2024-04-22T17:59:39.968439845Z",

"severity": "ERROR",

"labels": {

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/pod-template-generation": "2"

},

"logName": "projects/*****/logs/stderr",

"sourceLocation": {

"file": "metrics.go",

"line": "194"

},

"receiveTimestamp": "2024-04-22T17:59:42.988893049Z"

},

{

"textPayload": "\t* failed to export 1 (out of 1) batches of metrics to Cloud Monitoring",

"insertId": "vv41dscsqfkn7za6",

"resource": {

"type": "k8s_container",

"labels": {

"cluster_name": "*****-cluster",

"pod_name": "image-package-extractor-nzrmb",

"project_id": "*****",

"container_name": "image-package-extractor",

"namespace_name": "kube-system",

"location": "us-east1"

}

},

"timestamp": "2024-04-22T17:59:39.968450187Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"k8s-pod/k8s-app": "image-package-extractor",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T17:59:42.988893049Z"

},

{

"insertId": "8izqbm8k7nlmoejc",

"jsonPayload": {},

"resource": {

"type": "k8s_container",

"labels": {

"cluster_name": "*****-cluster",

"location": "us-east1",

"container_name": "image-package-extractor",

"project_id": "*****",

"namespace_name": "kube-system",

"pod_name": "image-package-extractor-nzrmb"

}

},

"timestamp": "2024-04-22T17:59:39.968455206Z",

"severity": "ERROR",

"labels": {

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"k8s-pod/pod-template-generation": "2",

"k8s-pod/k8s-app": "image-package-extractor",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T17:59:42.988893049Z"

},

{

"insertId": "neh8mj4ec79h0uj8",

"jsonPayload": {

"error": "rpc error: code = InvalidArgument desc = One or more TimeSeries could not be written: Unrecognized metric labels: [scanning_mode]",

"ts": 1713809080.0101368,

"stacktrace": "google3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).exportBuffer\n\tcloud/kubernetes/metrics/common/gcm/export.go:434\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:383\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).Flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:369\ngoogle3/cloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.ExportPushMetrics\n\tcloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.go:193\nmain.main\n\tcloud/kubernetes/distro/containers/image_package_extractor/img_pkg_extractor/main.go:112\nruntime.main\n\tthird_party/go/gc/src/runtime/proc.go:271",

"caller": "gcm/export.go:434",

"msg": "Failed to export metrics to Cloud Monitoring",

"level": "error"

},

"resource": {

"type": "k8s_container",

"labels": {

"project_id": "*****",

"pod_name": "image-package-extractor-nzrmb",

"namespace_name": "kube-system",

"location": "us-east1",

"container_name": "image-package-extractor",

"cluster_name": "*****-cluster"

}

},

"timestamp": "2024-04-22T18:04:40.010484201Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"k8s-pod/k8s-app": "image-package-extractor"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:04:42.994832182Z"

},

{

"insertId": "4lsp95tguou8ewcs",

"jsonPayload": {

"pid": "1",

"message": "[loop:21d34b1e-f234] Failed to flush metrics: 1 error occurred:"

},

"resource": {

"type": "k8s_container",

"labels": {

"location": "us-east1",

"project_id": "*****",

"namespace_name": "kube-system",

"pod_name": "image-package-extractor-nzrmb",

"container_name": "image-package-extractor",

"cluster_name": "*****-cluster"

}

},

"timestamp": "2024-04-22T18:04:40.010521030Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"k8s-pod/k8s-app": "image-package-extractor"

},

"logName": "projects/*****/logs/stderr",

"sourceLocation": {

"file": "metrics.go",

"line": "194"

},

"receiveTimestamp": "2024-04-22T18:04:42.994832182Z"

},

{

"textPayload": "\t* failed to export 1 (out of 1) batches of metrics to Cloud Monitoring",

"insertId": "8ib99nqtonro7kiy",

"resource": {

"type": "k8s_container",

"labels": {

"project_id": "*****",

"location": "us-east1",

"namespace_name": "kube-system",

"pod_name": "image-package-extractor-nzrmb",

"container_name": "image-package-extractor",

"cluster_name": "*****-cluster"

}

},

"timestamp": "2024-04-22T18:04:40.010528693Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:04:42.994832182Z"

},

{

"insertId": "jsslo91ry32jmlq8",

"jsonPayload": {},

"resource": {

"type": "k8s_container",

"labels": {

"pod_name": "image-package-extractor-nzrmb",

"namespace_name": "kube-system",

"cluster_name": "*****-cluster",

"location": "us-east1",

"container_name": "image-package-extractor",

"project_id": "*****"

}

},

"timestamp": "2024-04-22T18:04:40.010534447Z",

"severity": "ERROR",

"labels": {

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/pod-template-generation": "2",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:04:42.994832182Z"

},

{

"insertId": "wcsefs3iq7uwupk2",

"jsonPayload": {

"stacktrace": "google3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).exportBuffer\n\tcloud/kubernetes/metrics/common/gcm/export.go:434\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:383\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).Flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:369\ngoogle3/cloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.ExportPushMetrics\n\tcloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.go:193\nmain.main\n\tcloud/kubernetes/distro/containers/image_package_extractor/img_pkg_extractor/main.go:112\nruntime.main\n\tthird_party/go/gc/src/runtime/proc.go:271",

"msg": "Failed to export metrics to Cloud Monitoring",

"level": "error",

"caller": "gcm/export.go:434",

"ts": 1713809380.0458107,

"error": "rpc error: code = InvalidArgument desc = One or more TimeSeries could not be written: Unrecognized metric labels: [scanning_mode]"

},

"resource": {

"type": "k8s_container",

"labels": {

"cluster_name": "*****-cluster",

"location": "us-east1",

"namespace_name": "kube-system",

"container_name": "image-package-extractor",

"pod_name": "image-package-extractor-nzrmb",

"project_id": "*****"

}

},

"timestamp": "2024-04-22T18:09:40.046175396Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:09:42.988626981Z"

},

{

"insertId": "86vyr695dn6jq96x",

"jsonPayload": {

"message": "[loop:d2b39755-5f2a] Failed to flush metrics: 1 error occurred:",

"pid": "1"

},

"resource": {

"type": "k8s_container",

"labels": {

"container_name": "image-package-extractor",

"cluster_name": "*****-cluster",

"project_id": "*****",

"namespace_name": "kube-system",

"pod_name": "image-package-extractor-nzrmb",

"location": "us-east1"

}

},

"timestamp": "2024-04-22T18:09:40.046271116Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/k8s-app": "image-package-extractor"

},

"logName": "projects/*****/logs/stderr",

"sourceLocation": {

"file": "metrics.go",

"line": "194"

},

"receiveTimestamp": "2024-04-22T18:09:42.988626981Z"

},

{

"textPayload": "\t* failed to export 1 (out of 1) batches of metrics to Cloud Monitoring",

"insertId": "wt8414psuwhrrdem",

"resource": {

"type": "k8s_container",

"labels": {

"project_id": "*****",

"namespace_name": "kube-system",

"pod_name": "image-package-extractor-nzrmb",

"cluster_name": "*****-cluster",

"location": "us-east1",

"container_name": "image-package-extractor"

}

},

"timestamp": "2024-04-22T18:09:40.046282226Z",

"severity": "ERROR",

"labels": {

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/pod-template-generation": "2",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:09:42.988626981Z"

},

{

"insertId": "13bdwwt51bjimcm6",

"jsonPayload": {},

"resource": {

"type": "k8s_container",

"labels": {

"cluster_name": "*****-cluster",

"pod_name": "image-package-extractor-nzrmb",

"namespace_name": "kube-system",

"location": "us-east1",

"project_id": "*****",

"container_name": "image-package-extractor"

}

},

"timestamp": "2024-04-22T18:09:40.046288181Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:09:42.988626981Z"

},

{

"insertId": "rjsa6rob8m9jvp30",

"jsonPayload": {

"level": "error",

"msg": "Failed to export metrics to Cloud Monitoring",

"ts": 1713809680.083746,

"error": "rpc error: code = InvalidArgument desc = One or more TimeSeries could not be written: Unrecognized metric labels: [scanning_mode]",

"caller": "gcm/export.go:434",

"stacktrace": "google3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).exportBuffer\n\tcloud/kubernetes/metrics/common/gcm/export.go:434\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:383\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).Flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:369\ngoogle3/cloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.ExportPushMetrics\n\tcloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.go:193\nmain.main\n\tcloud/kubernetes/distro/containers/image_package_extractor/img_pkg_extractor/main.go:112\nruntime.main\n\tthird_party/go/gc/src/runtime/proc.go:271"

},

"resource": {

"type": "k8s_container",

"labels": {

"project_id": "*****",

"namespace_name": "kube-system",

"pod_name": "image-package-extractor-nzrmb",

"location": "us-east1",

"cluster_name": "*****-cluster",

"container_name": "image-package-extractor"

}

},

"timestamp": "2024-04-22T18:14:40.084068359Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/k8s-app": "image-package-extractor"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:14:43.022195699Z"

},

{

"insertId": "7g3bgek7l949dkji",

"jsonPayload": {

"pid": "1",

"message": "[loop:01943047-af33] Failed to flush metrics: 1 error occurred:"

},

"resource": {

"type": "k8s_container",

"labels": {

"cluster_name": "*****-cluster",

"project_id": "*****",

"pod_name": "image-package-extractor-nzrmb",

"namespace_name": "kube-system",

"container_name": "image-package-extractor",

"location": "us-east1"

}

},

"timestamp": "2024-04-22T18:14:40.084146529Z",

"severity": "ERROR",

"labels": {

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/pod-template-generation": "2",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"sourceLocation": {

"file": "metrics.go",

"line": "194"

},

"receiveTimestamp": "2024-04-22T18:14:43.022195699Z"

},

{

"textPayload": "\t* failed to export 1 (out of 1) batches of metrics to Cloud Monitoring",

"insertId": "aqcmnhat2e5yyzg9",

"resource": {

"type": "k8s_container",

"labels": {

"namespace_name": "kube-system",

"location": "us-east1",

"project_id": "*****",

"pod_name": "image-package-extractor-nzrmb",

"container_name": "image-package-extractor",

"cluster_name": "*****-cluster"

}

},

"timestamp": "2024-04-22T18:14:40.084156969Z",

"severity": "ERROR",

"labels": {

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/pod-template-generation": "2",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:14:43.022195699Z"

},

{

"insertId": "h78ab5gfion9ywff",

"jsonPayload": {},

"resource": {

"type": "k8s_container",

"labels": {

"namespace_name": "kube-system",

"pod_name": "image-package-extractor-nzrmb",

"container_name": "image-package-extractor",

"cluster_name": "*****-cluster",

"location": "us-east1",

"project_id": "*****"

}

},

"timestamp": "2024-04-22T18:14:40.084162372Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/k8s-app": "image-package-extractor"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:14:43.022195699Z"

},

{

"insertId": "ack8v1zvhukgvntg",

"jsonPayload": {

"msg": "Failed to export metrics to Cloud Monitoring",

"ts": 1713809980.1258762,

"caller": "gcm/export.go:434",

"error": "rpc error: code = InvalidArgument desc = One or more TimeSeries could not be written: Unrecognized metric labels: [scanning_mode]",

"stacktrace": "google3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).exportBuffer\n\tcloud/kubernetes/metrics/common/gcm/export.go:434\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:383\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).Flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:369\ngoogle3/cloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.ExportPushMetrics\n\tcloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.go:193\nmain.main\n\tcloud/kubernetes/distro/containers/image_package_extractor/img_pkg_extractor/main.go:112\nruntime.main\n\tthird_party/go/gc/src/runtime/proc.go:271",

"level": "error"

},

"resource": {

"type": "k8s_container",

"labels": {

"container_name": "image-package-extractor",

"project_id": "*****",

"pod_name": "image-package-extractor-nzrmb",

"location": "us-east1",

"cluster_name": "*****-cluster",

"namespace_name": "kube-system"

}

},

"timestamp": "2024-04-22T18:19:40.126177999Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:19:43.017486897Z"

},

{

"insertId": "5zkvqz36irrwmste",

"jsonPayload": {

"pid": "1",

"message": "[loop:6e93391b-630b] Failed to flush metrics: 1 error occurred:"

},

"resource": {

"type": "k8s_container",

"labels": {

"cluster_name": "*****-cluster",

"namespace_name": "kube-system",

"location": "us-east1",

"project_id": "*****",

"pod_name": "image-package-extractor-nzrmb",

"container_name": "image-package-extractor"

}

},

"timestamp": "2024-04-22T18:19:40.126326022Z",

"severity": "ERROR",

"labels": {

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/pod-template-generation": "2",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"sourceLocation": {

"file": "metrics.go",

"line": "194"

},

"receiveTimestamp": "2024-04-22T18:19:43.017486897Z"

},

{

"textPayload": "\t* failed to export 1 (out of 1) batches of metrics to Cloud Monitoring",

"insertId": "u26fgold7yjnwd3g",

"resource": {

"type": "k8s_container",

"labels": {

"pod_name": "image-package-extractor-nzrmb",

"location": "us-east1",

"project_id": "*****",

"cluster_name": "*****-cluster",

"container_name": "image-package-extractor",

"namespace_name": "kube-system"

}

},

"timestamp": "2024-04-22T18:19:40.126369973Z",

"severity": "ERROR",

"labels": {

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/pod-template-generation": "2",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:19:43.017486897Z"

},

{

"insertId": "scccxinf1wvssux5",

"jsonPayload": {},

"resource": {

"type": "k8s_container",

"labels": {

"cluster_name": "*****-cluster",

"pod_name": "image-package-extractor-nzrmb",

"container_name": "image-package-extractor",

"namespace_name": "kube-system",

"project_id": "*****",

"location": "us-east1"

}

},

"timestamp": "2024-04-22T18:19:40.126376485Z",

"severity": "ERROR",

"labels": {

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/pod-template-generation": "2",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:19:43.017486897Z"

},

{

"insertId": "0o6qx49iuhmq40g1",

"jsonPayload": {

"stacktrace": "google3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).exportBuffer\n\tcloud/kubernetes/metrics/common/gcm/export.go:434\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:383\ngoogle3/cloud/kubernetes/metrics/common/gcm/gcm.(*exporter).Flush\n\tcloud/kubernetes/metrics/common/gcm/export.go:369\ngoogle3/cloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.ExportPushMetrics\n\tcloud/kubernetes/distro/containers/image_package_extractor/pkg/metrics/metrics.go:193\nmain.main\n\tcloud/kubernetes/distro/containers/image_package_extractor/img_pkg_extractor/main.go:112\nruntime.main\n\tthird_party/go/gc/src/runtime/proc.go:271",

"caller": "gcm/export.go:434",

"level": "error",

"error": "rpc error: code = InvalidArgument desc = One or more TimeSeries could not be written: Unrecognized metric labels: [scanning_mode]",

"ts": 1713810280.1703477,

"msg": "Failed to export metrics to Cloud Monitoring"

},

"resource": {

"type": "k8s_container",

"labels": {

"project_id": "*****",

"container_name": "image-package-extractor",

"location": "us-east1",

"cluster_name": "*****-cluster",

"pod_name": "image-package-extractor-nzrmb",

"namespace_name": "kube-system"

}

},

"timestamp": "2024-04-22T18:24:40.170653238Z",

"severity": "ERROR",

"labels": {

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/pod-template-generation": "2",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:24:43.000020124Z"

},

{

"insertId": "xw1v5sq1zl3oils6",

"jsonPayload": {

"pid": "1",

"message": "[loop:7f6cd67d-4fb9] Failed to flush metrics: 1 error occurred:"

},

"resource": {

"type": "k8s_container",

"labels": {

"location": "us-east1",

"container_name": "image-package-extractor",

"project_id": "*****",

"namespace_name": "kube-system",

"cluster_name": "*****-cluster",

"pod_name": "image-package-extractor-nzrmb"

}

},

"timestamp": "2024-04-22T18:24:40.170731390Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"k8s-pod/k8s-app": "image-package-extractor",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"sourceLocation": {

"file": "metrics.go",

"line": "194"

},

"receiveTimestamp": "2024-04-22T18:24:43.000020124Z"

},

{

"textPayload": "\t* failed to export 1 (out of 1) batches of metrics to Cloud Monitoring",

"insertId": "ymmg2nquq0tdijkn",

"resource": {

"type": "k8s_container",

"labels": {

"container_name": "image-package-extractor",

"location": "us-east1",

"namespace_name": "kube-system",

"project_id": "*****",

"pod_name": "image-package-extractor-nzrmb",

"cluster_name": "*****-cluster"

}

},

"timestamp": "2024-04-22T18:24:40.170740978Z",

"severity": "ERROR",

"labels": {

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/controller-revision-hash": "76b5dd6d95",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/pod-template-generation": "2"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:24:43.000020124Z"

},

{

"insertId": "94kdn77debuwo579",

"jsonPayload": {},

"resource": {

"type": "k8s_container",

"labels": {

"cluster_name": "*****-cluster",

"location": "us-east1",

"namespace_name": "kube-system",

"project_id": "*****",

"container_name": "image-package-extractor",

"pod_name": "image-package-extractor-nzrmb"

}

},

"timestamp": "2024-04-22T18:24:40.170746767Z",

"severity": "ERROR",

"labels": {

"k8s-pod/pod-template-generation": "2",

"compute.googleapis.com/resource_name": "gk3-*****-clust-pool-2-d073bcf5-sk6j",

"k8s-pod/k8s-app": "image-package-extractor",

"k8s-pod/controller-revision-hash": "76b5dd6d95"

},

"logName": "projects/*****/logs/stderr",

"receiveTimestamp": "2024-04-22T18:24:43.000020124Z"

}

]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ack, thanks for sharing the `image-package-extractor` error logs. In checking with internal teams.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you check whether these ERROR logs still exist?

Checked with internal teams, this issue should be fixed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like we are still getting those errors

Maybe we need to go through a maintenance window for an upgrade?

We are also getting some other errors that look like they should be label maybe as more common info logs

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> It looks like we are still getting those errors

Okay. Will contact internal teams to double check.

> Maybe we need to go through a maintenance window for an upgrade?

This fix doesn't need a cluster upgrade. Will double check.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> "textPayload": "2024-04-19T13:59:53.651Z\terror\tscrape/scrape.go:1202\tScrape commit failed\t{\"kind\": \"receiver\", \"name\": \"prometheus\", \"scrape_pool\": \"addons\", \"target\": \"http://10.142.0.52:9965/metrics\", \"err\": \"process_start_time_seconds metric is missing\"}",

This seems a new one. Want to figure out which component is `http://10.142.0.52:9965/metrics/`. Could you share more error logs from gke-metrics-agent (after removing sensitive information)?

> We are also getting some other errors that look like they should be label maybe as more common info logs

Yes, the internal team is aware of this one and fixing it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

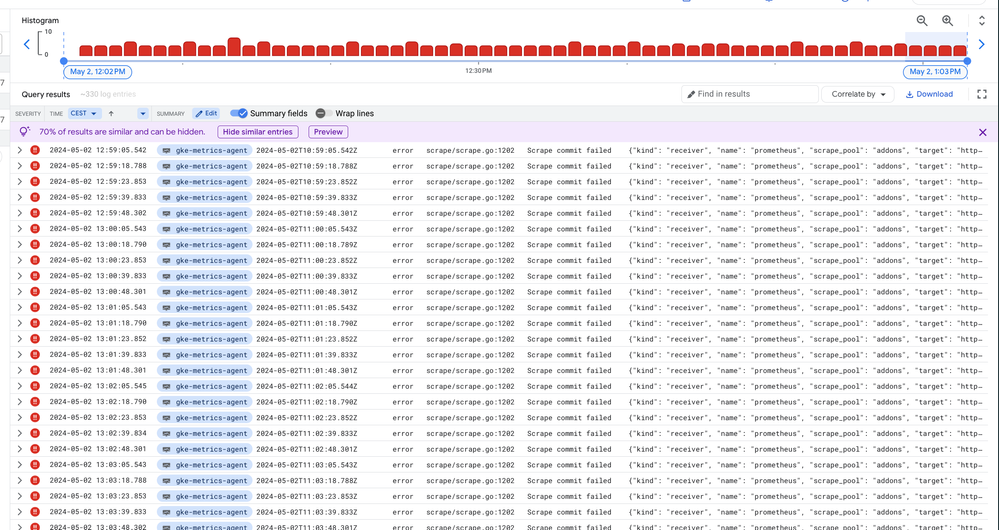

What I see now is that it changes from oscillating between two error messages to only one (this is 1.29.3-gke.1093000).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you share one detailed log after removing sensitive data?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

{

"textPayload": "2024-05-02T13:59:50.187Z\terror\tscrape/scrape.go:1202\tScrape commit failed\t{\"kind\": \"receiver\", \"name\": \"prometheus\", \"scrape_pool\": \"addons\", \"target\": \"http://10.0.0.93:9965/metrics\", \"err\": \"process_start_time_seconds metric is missing\"}",

"insertId": "fo6lo23qkkc58dgb",

"resource": {

"type": "k8s_container",

"labels": {

"project_id": "…",

"cluster_name": "main",

"pod_name": "gke-metrics-agent-hsg56",

"namespace_name": "kube-system",

"location": "us-central1",

"container_name": "gke-metrics-agent"

}

},

"timestamp": "2024-05-02T13:59:50.187923826Z",

"severity": "ERROR",

"labels": {

"k8s-pod/k8s-app": "gke-metrics-agent",

"k8s-pod/controller-revision-hash": "6dc8695bb6",

"compute.googleapis.com/resource_name": "gk3-main-pool-3-765257ff-t8dy",

"k8s-pod/component": "gke-metrics-agent",

"k8s-pod/pod-template-generation": "6"

},

"logName": "projects/…/logs/stderr",

"receiveTimestamp": "2024-05-02T13:59:51.744537996Z"

}- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

\"addons\", \"target\": \"http://10.0.0.93:9965/metrics\",Want to figure out which target.

Could you try hiding these similar logs and see whether it has another log which indicates the target?

Twitter

Twitter