- Google Cloud

- Cloud Forums

- Infrastructure: Compute, Storage, Networking

- Intermittent connection Issues with Cloud DNS and ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We've been encountering intermittent issues with our app (URL Removed by Staff). The problem seems to stem from the IP address associated with our load balancer. While this issue initially surfaced about 6-7 months ago, its frequency has recently escalated significantly.

- Labels:

-

Cloud DNS

-

Cloud Load Balancing

-

Networking

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @akashfp ,

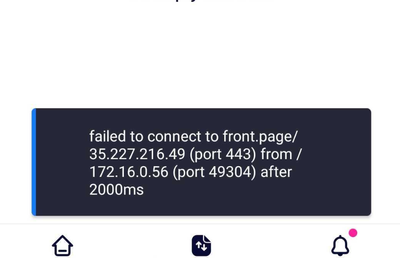

It seems the error is telling there is an issue with the connection of your app to the front-end, which is hosted at the IP address 35.227.216.49 on port 443 (which is typically used for HTTPS traffic). Also, the error indicates that it is trying to connect on a random port of range 49304. (This is coming from 172.16.0.56 )

It would be helpful if you can check both logs of the backend and the load balancer, as it contains information that can help diagnose the issue. You can refer to this documentation on how to view the logs for the load balancer and backend.

I would also recommend checking the load balancer configurations, especially if the health checks are working correctly.

@akashfp wrote:

While this issue initially surfaced about 6-7 months ago, its frequency has recently escalated significantly.

For this case, the backend servers may be overloaded, or there could be issues with the application code running on the backend servers.

Again, the logs from load balancers and backend will help us pinpoint the cause of the connection error. For now what I provided are the general steps or areas that you can check to isolate the issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Marvin_Lucero , thanks for reply,

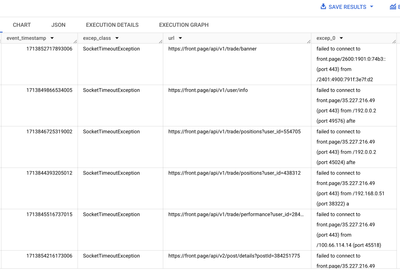

We have checked logs on both load balancer (resource.type="http_load_balancer") and servers (k8s pods), but not able to find logs related to these issues. Also these requests are failing Intermittently for random API, here are some examples (we are tracking these API errors from our front-end to bigquery)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

without having full access to logs and network info, and I can ping both IPV4 and IPV6 addresses of front.page from network, i do have a question. It seems you have a backend on a private net and your fronted on a public net. If so, why are you trying to connect the two over the public networks? Why not stay private?

That being said, check firewalls and routes on the backend networks, make sure they can get out. Also, make sure those log entries are not coming from 1 single node, if so, kill it and bring up another one, it could have gotten stuck or something.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @IveGotIt , Actually our frontend is Android app and the above logs are being sent by Android to BigQuery using firebase crashlytics, that is they are not from our backend infra.

Coming to you second point I've checked firewall rules and Cloud Armor policies and tried testing them on my own IP, the error they give are different than the issue and seems like working fine.

Moreover these problem only Intermittent, after some retry it works on devices, so I don't think the problem exist in any routing on backend networks.

-

Accelerators

26 -

Actifio

9 -

Analytics Block

1 -

Apache Kafka

5 -

API Hub

1 -

Apigee X

1 -

App Management

1 -

Application Migration

45 -

Backup and DR

51 -

Batch

163 -

BigLake

2 -

Cloud Armor

7 -

Cloud CDN

67 -

Cloud DNS

147 -

Cloud Interconnect

65 -

Cloud Load Balancing

293 -

Cloud Logging

1 -

Cloud SQL for SQL Server

1 -

Cloud Storage

562 -

Cloud VPN

104 -

Compute Engine

1,125 -

Data Applications

30 -

Data Transfer

109 -

Dynamic Workload Scheduler

1 -

Earth Engine

1 -

Filestore

44 -

Geo Expansion

2 -

Google Cloud VMware Engine (GCVE)

96 -

Graphics Processing Units (GPUs)

55 -

High Performance Computing (HPC)

29 -

Infrastructure General

483 -

Integrations

1 -

load balancer

1 -

Microsoft on GCP

26 -

Network Intelligence Center

21 -

Network Planner

46 -

Networking

472 -

Other

1 -

Persistent Disk

66 -

Private Cloud Deployment

1 -

Resources

1 -

SAP on GCP

18 -

Secure Web Proxy

1 -

Security Keys

1 -

Service Directory

21 -

Spectrum Access System (SAS)

99 -

URL Maps

1 -

VM Manager

75 -

Workload Manager

11

- « Previous

- Next »

Twitter

Twitter