Author: Hafez Mohamed

Deep Dive into UDM Parsing – How I learned to stop worrying and love the "log"

This guide explores UDM (Unified Data Model) parsing, focusing on transforming JSON logs into the Google UDM schema. We'll cover log schema analysis, custom parser creation, and leveraging Jinja templates for efficient development.

Problem Formulation

Log parsing involves transforming various log formats (free-text, XML, CSV, JSON) into UDM's structured JSON format for Events and Entities. UDM requires specific field types and nested structures, beyond simple text placeholders. In short, UDM is a schema !

Prologue

We have different types of logs incoming to the SIEM with different formats, either ;

- Free-text

- XML

- CSV

- JSON .

These formats are eventually transformed into either one of 2 JSON formats, the Unified Data Model UDM https://c

This is a detailed high-level workflow for the parser design process. loud.google.com/chronicle/docs/reference/udm-field-list for Events or for Entities, through a series of Gostash https://cloud.google.com/chronicle/docs/reference/parser-syntax statements (Google’s Logstash version) within the log parsing process

The UDM Events are what you would see as raw attributes in the SIEM UDM search to describe the event logs, while the entities logs are what you would see as Enriched attributes in the UDM search and entities objects in the entities explorer.

We are going to cover these use cases.

Parser Design Workflow

- Identify Log Type and Key Fields: Determine the log source and its essential fields (e.g., timestamp, severity, user).

- Analyze Schema and Format: Examine the log structure (JSON, free-text, etc.), identify data types, and note recurring/permanent fields.

- Define Pre-Tokenization Conditions: Specify conditions for parsing (e.g., only logs with WARN severity or higher).

- Tokenize Required Fields: Extract the identified fields using Gostash (Google's Logstash).

- Apply Post-Tokenization Conditions: Add attributes based on extracted data (e.g., "internal" for corporate users).

- Map to UDM: Assign extracted tokens to the corresponding UDM schema fields.

- Validate the Parser: Test the parser using the SIEM's validation feature (1k logs preferred) or the ingestion API https://cloud.google.com/chronicle/docs/reference/ingestion-api or CBN tool https://github.com/chronicle/cbn-tool for smaller samples.

- Document and Backup: Maintain a version-controlled backup of your parsers for tracking changes and rollback capabilities.

- Monitor Performance: Regularly monitor parser performance, especially after vendor updates that might alter log formats.

UI Navigation

- Creating a new Parser : Go to the Settings > SIEM Settings > Parser Settings > Add Parser > Select "UDM" –or the ingestion label of your data source, if not present then raise a case with Support to add it - > Create

Approach

This series uses a practical, use-case-driven approach, focusing on common JSON log parsing scenarios and regular expressions. CSV parsing is straightforward, while key-value pairs require different techniques. The process involves tokenization (capturing data) and mapping (assigning to UDM).

Useful Tools

- LLMs: Generate sample logs for testing and practice.

- Jinja Templates: Simplify parser development by using Jinja's text generation capabilities. (Note: This series won't be a full Jinja tutorial.)

- JSONPath: Understanding JSONPath can aid in clarifying UDM syntax (optional).

- JSON Tree Viewers: Visualize JSON log structures for schema understanding.

Tokenization

Tokenization is the process of capturing fields from the log message and assigning them into a new field name (target token).

We will be using GenAI to generate a sample log message, this is the sample log message used during this guide ;

{

"timestamp": "2025-01-11T12:00:00Z",

"event_type": "user_activity",

"user": {

"id": 12345,

"username": "johndoe",

"profile": {

"email": "john.doe@example.com",

"location": "New York",

"VIP" : true

},

"sessions": [

{

"session_id": "abc-123",

"start_time": "2025-01-11T11:30:00Z",

"actions": [

{"action_type": "login", "timestamp": "2025-01-11T11:30:00Z", "targetIP":"10.0.0.10"},

{"action_type": "search", "query": "weather", "timestamp": "2025-01-11T11:35:00Z", "targetIP":"10.0.0.10"},

{"action_type": "logout", "timestamp": "2025-01-11T11:45:00Z", "targetIP":"10.0.0.11"}

]

},

{

"session_id": "def-456",

"start_time": "2025-01-11T12:00:00Z",

"actions": [

{"action_type": "login", "timestamp": "2025-01-11T12:00:00Z", "targetIP":"192.168.1.10"}

]

}

]

},

"system": {

"hostname": "server-001",

"ip_address": "192.168.1.100"

},

"Tags": ["login_logs", "dev"]

}

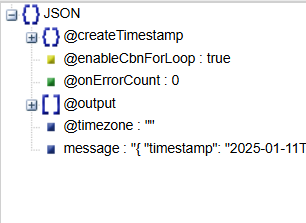

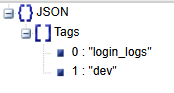

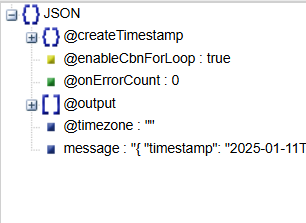

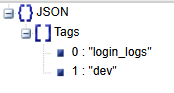

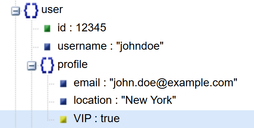

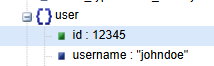

It is very useful to view JSON logs as a tree structure, there are multiple tools available including VSCode, in this guide I will be using the online version available at https://jsonviewer.stack.hu/ , make sure you use sanitized logs or approved JSON viewer tools internally.

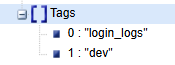

The JSON Tree for the log sample above is ;;

Schema Identification

To optimize log parsing, correctly define field types. Schema inference tools streamline this, especially for large datasets. In JSON logs, data fields are organized as key-value dictionaries in Python, enabling efficient processing

For the example above we identify the types of several data fields. JSON strings are generally represented as dictionaries in Python where field names are the keys.

|

Field Name

|

Data Type

|

Multiplicity

|

Hierarchy

|

|

|

Event_type

|

Primitive - String

|

Single-valued

|

Flat

|

|

|

Timestamp

|

Primitive- Date

|

Single-valued,

|

Flat

|

The field type is Simple because the field is on the topmost level of the JSON log, i.e. it is not nested under other fields

All date fields are strings that follow a particular date format. For this example this date field follows ISO 8601 format.

2025-01-11 → Date in YYYY-MM-DD format

T → Separator between date and time

12:00:00 → Time in HH:MM:SS (24-hour format)

Z → Zulu time (UTC, Coordinated Universal Time)

|

|

System{}

|

Composite (Object or dictionary)

|

Single-Valued

|

Nested

|

Composite field because its value has 2 other fields (dictionaries)

These fields have a hierarchy, making the JSON a tree structure.

Notice the curly brackets next to the field name, this is an indicator that this field is composite.

In order to access the composing nodes, we use the json dot notation, i.e. "System.hostname" and "System.ip_address" are how we access the subfields nodes of "System".

|

|

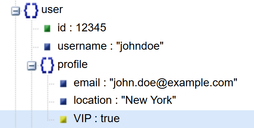

user{}

|

Composite (Object or dictionary)

|

Single-valued

|

Nested

|

Composite because, like "system", "User" is composed of different fields underneath.

Nested fields values are fields that contain other json fields ({id:..., username:...,...}) in a hierarchy.

In Python this is analogous to a key whose value is a dictionary.

|

|

user.VIP

|

Primitive-Boolean

|

Single-valued

|

Simple

|

Boolean fields are either true or false strictly lowercase without any quotes.

Boolean fields are not strings as they have no enclosing double quotes "".

This field "user.VIP" is a subfield field of "user" field.

This field is a subfield node of User parent field but it has no subfields nodes, so it is a simple in terms of hierarchy even though it is accessed through its parent node "users"

As you could notice, accessing the nested a hierarchy through JSON is done using dot notation indicating parent-subfield node relationship, for example here it is "users.vip" indicating the path to access "vip" through its parent node "users".

|

|

User.id

|

Primitive-Integer

|

Single-valued

|

Flat

|

Integer fields are digits without double quotes.

Integer fields (e.g. 12345) are handled differently from string fields surrounded by double quotes i.e. "12345" =! 12345, the left is a string of integer while the right is an integer.

The field "user.id" is flat because it has no subfields nodes, even though it has parent node "user"

|

|

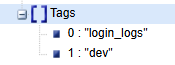

Tags[*]

|

Primitive-Integer-Repeated

|

Mutli-valued (Repeated, List)

|

Flat

|

Flat because it has no untested JSON fields, notice that "Tags" value is a list, not a dictionary (unlike "system")

Each element in the list structure a "String", so its data type is string but repeated, so we can consider this field a Repeated-Atomic field.

Notice the brackets "[ ]" on the left of the field name, this is an indicator that this field is not composite but a repeated one.

Repeated fields are accessed slightly different than composite fields, for example the second tag is accessed as "tags[1]" not "tags.1"

|

|

User.Sessions{}[*]

|

Dictionary (Composite)-Repeated

|

Mutli-valued (Repeated, List)

|

Nested

|

This is the most complex field type, it has all the sub-types discussed ;

Repeated as it is composed of 2 Session Objects (0 and 1 both branching from the bracket next to "Sessions" field name).

Each element in the list is a dictionary(object), so its Data type is dictionary-repeated or Repeated-Composite.

The field is nested because each element in the list has nested fields like "user.sessions[0].actions[1]"

|

|

user.sessions{}[*].actions{}[*].action_type

|

String

|

Single Valued (Mandatory)

|

Flat

|

Mandatory Fields Appear in ALL of their parents' instances. I.e. Every "actions" object has an "action_type"

|

|

user.sessions{}[*].actions{}[*].query

|

String

|

Single Valued (Optional)

|

Flat

|

Optional Fields Appear in SOME of their parents' instances. I.e. Not every "actions" object has a "query", but only the "search" actions will have it .

|

Exporting Logs from Python Scripts

Tip: When exporting logs from Python scripts, always make sure to ;

- Switch the single quote ` to double quotes ".

- Keep True/False are both lowercase.

- Remove None or Null and convert them to strings as "None" or "Null ".

- Pasting logs with formatting errors (like incorrect JSON or unbalanced brackets) into the parser UI will result in an error message.

If the logs are in the correct JSON format, they will appear like this in the UI ;

JSON Path Basics

This section covers the fundamentals of JSON Path, a query language for navigating and extracting data from JSON documents. Using a JSON Path evaluator, paste sample log messages and construct queries to select specific JSON fields. This will help you visualize JSON schemas and understand the distinctions between JSON Path and the Google GoStash parser syntax

In general in GoStash, the first steps in any JSON parsers are ;

- Use the JSON parse clause with serializing array fields -more into that later-, done by ;

filter {

json { source => "message" array_function => "split_columns"}

statedump {}}

}

- GoStash uses distinct syntaxes for field referencing, determined by the operator (e.g., assignment, conditional, loop, merge). This section will delve into the 'replace' operator, loops and if-conditionals, given their prevalence. The 'merge' operator will be addressed in a dedicated section

There are lots of JSON Path evaluators, we will be using https://jsonpath.com/ developed by “Hamasaki Kazuki” in this guide.

Select The Whole JSON

|

|

$

|

|

- Operator $ Selects the root node of the JSON file in JsonPath, it represents the root node of a JSON object.

- It returns a list that has 1 element which is the json log message.

- In GoStash; Input log messages fall under a default root field name called "message", so we could say that; "message" field name is the equivalent to the JSON Path $.

|

Note: JSON path returns a list by default, i.e. query a log message with $ will return [ …<log message content>... ] , but for the sake of simplicity, we will assume it returns just the log message (without the list) when compared with Gostash and JSONPath.

For example to select a whole JSON log using "$" ;

The actual return is ; [{"user": "Alex"}] not {"user": "Alex"}, for simplicity we will ignore this in the following sections.

Select a Simple field

|

|

$.event_type

|

|

|

The first operator $ selects the root json log, while the dot operator "." moves the selection one level down to the subfields nodes, and "event_type" picks the subfield node "event_type, So in effect this selects the value of the event_type field which is “user_activity”

In Gostash, to reference the same field :

- Assignment Operator : Use "%{event_type}".|

filter {

json { source => "message" array_function => "split_columns"}

mutate { replace => { "myVariable" => "%{event_type}" }}

}

- IF conditional : use [event_type]

filter {

json { source => "message" array_function => "split_columns"}

if [event_type]=="abc"{

}

}

- Loop : Not supported for simple fields.

|

Select a subfield field

|

| $.user.id |

|

|

Same like above ; $ selects the root json log, "." moves one level down to the subfields nodes, "event_type" picks the subfield node "event_type", but we move 1 step further and reference the 2nd level field "id"

In Gostash, to reference the same field :

- Assignment Operator :

-

- For non-string fields :

Not Supported. For example user.id is an integer not a string, so

filter {

json { source => "message" array_function => "split_columns"}

mutate { replace => { "myVariable" => "%{user.id}" }}

}

Will give an error, as "user.id" is an integer field not a string field -as we highlighted earlier.

-

For string fields:

Supported ; e.g. for "user.username" field is a string field, referenced by %{user.username}

filter {

json { source => "message" array_function => "split_columns"}

mutate { replace => { "myVariable" => "%{user.username}" }}

}

- IF conditional : Use [user][id] or [user][username]

filter {

json { source => "message" array_function => "split_columns"}

if [user][id] == 13 {

}

}

IF Conditionals in logstash use bracket notation instead of dot notation to reference nested fields.

IF conditionals support both string and non-string data types.

- Loop : Not supported for simple fields.

|

Select a Repeated field

|

|

$.Tags[*]

|

|

|

The syntax is similar to the above cases, but requires appending "[*]" to indicate querying ALL the repeated field values.

If we need to access the 2nd tags, The JSON Path is $.Tags[1]

In Gostash, to reference the 2nd Tag field :

- Assignment Operator :

- With "array_function":

- Accessing all values of a repeated field: Not supported.

In GoStash, you cannot use wildcard syntax (like %{Tags.*}) to access all items in a repeated field. You must reference specific elements by their index, such as the first or second tag.

json { source => "message" array_function => "split_columns"}

mutate { replace => { "myVariable" => "%{Tags.*}" }}

statedump {}

}

- Accessing a specific value of a repeated field: Supported

-

"%{Tags.1}" is supported

filter {

json { source => "message" array_function => "split_columns"}

mutate { replace => { "myVariable" => "%{Tags.1}" }}

statedump {}

}

- Without "array_function":

Not supported. Repeated fields won’t be accessible, i.e. %{Tags.1} is not possible if the JSON is parsed without the "array_function";

filter {

json { source => "message"}

mutate { replace => { "myVariable" => "%{Tags.1}" }}

statedump {}

}

"array_function" should be always used to allow accessing repeated fields.

- IF conditional : supported with "array_function" but uses the bracket notation

filter {

json { source => "message" array_function=>"split_columns"}

if [Tags][1] == "dev" {

statedump {}}

}

Without array_function: Not supported and will give an error ;

filter {

json { source => "message" }

if [Tags][1] == "dev" {

statedump {}}

}

- Loop :

- With array_function: Supported ;

filter {

json { source => "message" array_function => "split_columns"}

for index, _tag in Tags {

statedump {}}

}

The loop will execute two times, corresponding to the two values in the 'Tags' field. Pay attention to the use of 'Tags' without the '%{}' syntax. We'll cover the reason for this in a later section.

GoStash's syntax might allow you to write loops referencing specific indexes of repeated fields (e.g., Tags.0), but this will result in a logical error, and the loop's content will be skipped.

filter {

json { source => "message" array_function => "split_columns"}

for index, _tag in Tags.0 {

statedump {}}

}

- Without array_function: loop won't produce a syntax error, but it will fail to execute. This is a logical error, meaning the loop is structurally incorrect.

filter {

json { source => "message" }#array_function => "split_columns"}

for index, _tag in Tags {

statedump {}}

}

|

Select a Composite Field

|

| $.system or $.system[*[ |

OR

|

|

This example is a particular distinction between JSON Path and Gostash. You can select the composite field in JSONPath same way as selecting the simple fields using $.system , but it will generate a list of objects ;

[

{

"hostname": "server-001",

"ip_address": "192.168.1.100"

}

]

Appending [*] to the end as in $.system[*] will flatten json object inside the list to be ;

[

"server-001",

"192.168.1.100"

]

In Gostash, there is no direct equivalent to $.system[*]. Referencing a sub-field in a composite field is done the same way as the subfield fields but explicitly, i.e. %{user.username} but there is there is no syntax for something like %{user.*} .

Looping is supported for composite fields, you can loop for each sub-field ;

- Assignment Operator : Supported for explicit sub-field (as indicated earlier).

filter {

json { source => "message"}

mutate { replace => { "myVariable" => "%{system.hostname}" }}

statedump {}

}

- IF conditional : Supported using Bracket Notation (as indicated earlier).

filter {

json { source => "message"}

if [system][hostname] == "server-001" {

statedump {}

}

}

- Loop : Looping through a composite field is supported using the "map" keyword.

filter {

json { source => "message"}

for index, systemDetail in system map {

statedump {}}

}

This is a distinction from looping through repeated fields which does not require the "map" keyword. This will be discussed later in more details.

|

Capturing Basic JSON fields

The main body of a parser Go-stash are enclosed within a filter{...} statement like this ;

filter {

}

In the following section, we will examine common JSON transformation operations available in GoStash. For each operation, we will provide a detailed explanation of its syntax, demonstrate its effect on JSON data using 'statedump' debug output snippets, and include notes to highlight important aspects and potential considerations.

JSON Transformation Pseudocode

In the SecOps environment, incoming log messages are initially stored within a field named 'message'. To standardize and analyze this data, we need to convert it into the Unified Data Model (UDM) schema. To effectively describe the transformation process, we will employ a pseudocode that draws inspiration from the JSONPath syntax you've learned earlier. It's crucial to remember that this pseudocode serves as a descriptive tool for illustrating the transformations and is not a formal syntax that needs to be strictly adhered to.

- To illustrate JSON transformations, we'll employ a pseudocode with clear assignment operations. The arrow symbol (←) will denote assigning a value to a variable or field. For instance, x ← 5 signifies assigning the integer 5 to the variable 'x'. In the context of JSON, $.fixedToken ← "constantString" represents creating a new field named 'fixedToken' directly under the root node ('$') and assigning it the string value 'constantString'.

- Our pseudocode can also express copying values between fields. In Copying by reference we use M to indicate accessing the value of a variable or field. So, x ← M[y] means 'take the value currently stored in 'y' and assign it to 'x'. This concept extends to JSON structures. For instance, $.myToken ← $.message.username signifies creating a new field 'myToken' at the root level and assigning it the value found within the 'username' field, which is itself nested inside the 'message' field. This copies only the value, not the entire structure.

For example:

Input Log:

JSON

{ "event_type": "user_activity" }

Transformation:

Code snippet

$.myToken ← $.message.event_type

Output (UDM Schema):

JSON

{..., "myToken": "user_activity",... }

- In our pseudocode, composite fields, which are essentially objects containing other fields, are denoted using curly braces {}. This visually represents the nested structure. For example, a field named 'system' that has subfield fields like 'hostname' and 'ip_address' would be represented as $.system{}. For brevity, we can also simply denote it as $.system, but keeping in mind that “system” has a nested structure.

This distinction between composite and simple fields is particularly important in GoStash. To make this clear in our pseudocode, we'll add {} after composite field names (like $.system{}). This helps you visualize the structure and apply GoStash transformations correctly, however you can drop this notation once you get more fluent.

- To access a field nested within a composite field, you can use the dot notation, which is a common convention in JSONPath. For instance, to reference the 'hostname' field within the 'system' field, you would use $.system.hostname. Alternatively, you can explicitly denote the composite field using {}, like this: $.system{}.hostname. Both notations achieve the same result; the latter simply provides a visual cue that system is a composite field containing other fields.

- To represent a repeated field (a field containing a list of values), we'll use square brackets ``. For example, the field Tags with multiple values would be shown as $.Tags or $.Tags[*].

"For fields containing a list of composite values (objects), we'll use {}. For example, a 'sessions' field containing a list of session objects, each with 'session_id', 'start_time', and 'actions', would be shown as $.user.sessions[*], $.user{}.sessions{}[*].

JSON Flattening in Go-Stash

- Flattening is a common operation in JSON parsing that converts a repeated field, which is essentially an array, into a composite field with numbered keys. This transformation makes it easier to access individual elements within the repeated field. For example, if you have a repeated field $.Tags[*] with the values ["login_logs", "dev"], flattening it would result in $.Tags{} with the structure {"0": "login_logs", "1": "dev"}. After flattening, you can reference the first tag using $.Tags.0, the second tag using $.Tags.1, and so on. This provides a convenient way to work with individual elements within a previously repeated field.

- As we describe transformations, our pseudocode will use a flexible approach to referencing fields. Sometimes, it will directly reference fields within the original input log structure, which is always nested under the 'message' field (e.g., $.message.somefield). Other times, the pseudocode might reference fields that have already been created or modified within the target schema during earlier transformation steps (e.g., $.some_new_field). Both approaches are valid and serve to illustrate the flow of data transformation.

Print Tokens Captured

|

filter {

statedump {}

}

|

|

Snippet from statedump output:

{

"@createTimestamp": {

"nanos": 0,

"seconds": 1736558676

},

"@enableCbnForLoop": true,

"@onErrorCount": 0,

"@output": [],

"@timezone": "",

"message": "{\n \"timestamp\": \"2025-01-11T12:00:00Z\", \n \".....

|

- The statedump operation is a powerful debugging tool within the GoStash parser. It allows you to examine the internal state of the parser by printing all captured tokens at the point of its execution. This output is displayed on the right side of the parser UI, under the 'Internal State' section.

- You'll notice that some tokens are pre-created by the parser, such as @output and @enableCbnForLoop. These are internal tokens used by GoStash for various purposes.

- It's important to observe that your input log messages, which are initially contained within the message field, are treated as raw strings at this stage. This is indicated by the escape character \ preceding the double quotes within the message field. This raw string format signifies that the JSON data within the message field has not yet been parsed into a structured JSON object.

|

Parse the JSON Schema and Flatten Repeated Fields

|

filter {

json { source => "message" array_function => "split_columns"}

statedump {}

}

|

|

Snippet from statedump output:

{

"@createTimestamp": {

"nanos": 0,

"seconds": 1736558735

},

"@enableCbnForLoop": true,

"@onErrorCount": 0,

"@output": [],

"@timezone": "",

"event_type": "user_activity",

"message": "{\n

…..

"system": {

"hostname": "server-001",

"ip_address": "192.168.1.100"

},

"timestamp": "2025-01-11T12:00:00Z",

"user": {.....

|

- This code converts the unparsed JSON string in "message" field into a JSON schema.

- In the SecOps environment, incoming log messages are initially placed within a 'message' field. This means that from the perspective of the SIEM, your log data is structured as follows:

JSON

{ "message": {...your log data... } }

- This 'message' field acts as a container for your actual log data. To effectively parse and utilize the information within your logs, you need to convert this raw string representation into a structured JSON object. This is where the json filter comes in. It parses the content of the message field and transforms it into a usable JSON format, allowing you to access individual fields and values within your logs

- The initial stage of our log parsing process involves setting up the input and output structures and performing some basic transformations.

- Input: We define the entry point for our input logs using the pseudocode Input: $.message{} = {... }. This indicates that the incoming log messages are nested under the message field, as is the convention in SecOps SIEM.

- Output: We declare the target schema, which will eventually be converted to the UDM format, using Output: $. This signifies that the root level ($) of our output structure will be where we construct the UDM schema.

- Moving Fields: We then move all the first-level fields from the input log schema (under message) to the root level of the output schema. This is represented by the pseudocode $ ← $.message{}.

- Flattening: Finally, we apply a flattening operation to all repeated fields within the data. This is denoted by $ ← flatten($.*). Flattening converts repeated fields (arrays) into a composite format, making them easier to access and manipulate.

- To understand how json works, compare the JSON tree before and after. You'll see that fields like 'event_type' are no longer under 'message'; they're now at the same level as 'message

While without using the json parse clause, "event_type" is a subfield of the "message" field.

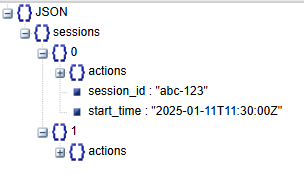

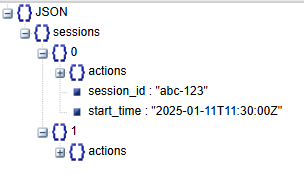

- The array_function plays a key role in simplifying complex JSON structures by flattening repeated fields. Let's break down how it works:

- Before Flattening: Imagine a user object with a sessions field that contains a list of session objects, like this:

JSON

"user": {

"sessions": [

{ "session_id": "abc-123",... },

{ "session_id": "def-456",... }

]

}

- After Flattening: The array_function transforms this structure by converting the sessions list into an object with numbered keys:

JSON

"user": {

"sessions": {

"0": { "session_id": "abc-123",... },

"1": { "session_id": "def-456",... }

}

}

This makes it much easier to access individual session objects. For example, you can now directly reference the first session as $.user.sessions.0.

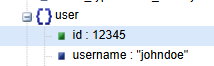

Before:

After:

The effect of flattening on the repeated Tags field:

- Before Flattening: The Tags field is a list of values, represented as $.Tags[*]:

JSON

"Tags": ["login_logs", "dev"]

- After Flattening: The array_function converts this list into an object with numbered keys, represented as $.Tags{}:

JSON

"Tags": {

"0": "login_logs",

"1": "dev"

}

Now you can easily access specific tags. For example, $.Tags.0 refers to "login_logs", and $.Tags.1 refers to "dev"."

Before

After

This conversion allows using dot notation to access repeated fields the same way as the subfields of parent nodes of composite fields.

|

Tips

- Always use "array_function" in the JSON parse clause whether you need to parse repeated fields or not

filter {

json { source => "message" array_function => "split_columns"}

for index, _tag in Tags map {

statedump {}}

}

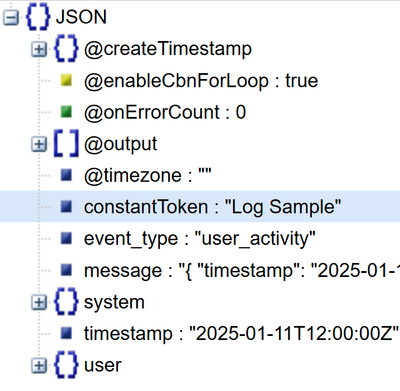

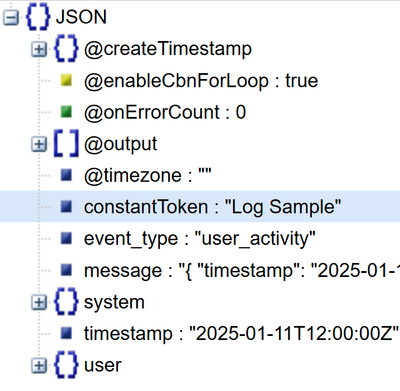

Assign a String Constant

|

|

Task: Assign a string “Log Sample” to a new token(variable) “constantToken”

|

filter {

mutate { replace => { "constantToken" => "Log Sample" }}

statedump {}

}

|

|

Snippet from statedump output:

….

"constantToken": "Log Sample",

….

|

- You can use the replace operation to assign constant values to tokens. In this case, $.constantToken ← "Log Sample" creates a new token called 'constantToken' and assigns it the string value 'Log Sample

|

Capture a Simple String Token

|

|

Task: Capture the value of $.user_activity into a new token “myEventClass”

|

filter {

json { source => "message" array_function => "split_columns"}

mutate { replace => { "myEventClass" => "%{event_type}" }}

statedump {}

}

|

|

Snippet from statedump output:

…

"eventClass": "user_activity",

….

|

- The equivalent variable assignment is similar to this ;

$← flatten($.message{})

$.myEventClass ← $.event_type

It's important to be aware of which schema you're referencing. If you're still working with the original input structure, then the equivalent of the second line would be $.myEventClass ← $.message{}.event_type, because the event_type field would still be nested under message

- The replace operation can also be used to copy values from existing fields into new tokens. In this example, $.myEventClass ← $.event_type performs the following:Creates a token: If a token named 'myEventClass' doesn't exist, it's created.

- Creates a token: If a token named 'myEventClass' doesn't exist, it's created.

- Copies the value: The value from the 'event_type' field is copied into the 'myEventClass' token.

This effectively creates a copy of the 'event_type' field's value under a new name ('myEventClass')."

- Token names are case sensitive, "event_type" is not the same as "Event_type".

|

Capture a subfield String Token

|

|

Task : Capture the value of $.user{}.username into a new token “myUser”

|

filter {

json { source => "message" array_function => "split_columns"}

mutate { replace => { "myUser" => "%{user.username}" }}

statedump {}

}

|

|

Snippet from statedump output:

…

"myUser": "johndoe"

….

|

- The equivalent variable assignment is similar to this ;

$ ← flatten($.message{})

$.myUser ← $.user{}.username

This assigns the value from the nested 'username' field (within 'user') to a new token called 'myUser'

|

Capture a Repeated String Field with Specific Order

|

|

Task: Capture the value of the first session ID $.user{}.sessions{}[0].session_id and the second tag $.Tags[0]

|

filter {

json { source => "message" array_function => "split_columns"}

mutate { replace => { "firstSession" => "%{user.sessions.0.session_id}" }}

mutate { replace => { "secondTag" => "%{Tags.1}" }}

statedump {}

}

|

Snippet from statedump output:

…

"firstSession": "abc-123",

….

|

- Equivalent to ;

$.← flatten($.message{})

$.firstSession ← $.user{}.sessions{}.0.session_id

$.secondTag ← $.Tags{}[].1

The array_function in GoStash introduces a key difference in how you work with repeated fields compared to JSONPath.

- JSONPath: In JSONPath, you would use $.Tags[*] to access all elements of the Tags array.

- GoStash: In GoStash, because array_function flattens the array, you can access individual elements using dot notation, like %{Tags.1} for the second element.

- Without array_function the variable assignment will fail,

i.e. %{Tags.1} is not supported without the "array_function" clause.

|

|

Task: Capture the first action type under the first action in the first session $.user{}.sessions{}[0].actions{}[0].action_type into “firstActionType”

|

### filter {

json { source => "message" array_function => "split_columns"}

mutate { replace => { "firstActionType" => "%{user.sessions.0.actions.0.action_type}" }}

statedump {}

}

|

Snippet from statedump output:

…

"event_type": "user_activity",

….

|

Equivalent to ;

$← flatten($.message{})

$.firstActionType ← $.user.sessions.0.actions.1.action_type

|

Initialize an empty field

|

|

Task: Declare and Clear an empty token “emptyPlaceholder”

|

### Capture the first session ID

filter {

json { source => "message" array_function => "split_columns"}

mutate { replace => { "emptyPlaceholder" => "" }}

statedump {}

}

|

Snippet from statedump output:

…

"emptyPlaceholder": "",

….

|

- Equivalent to ;

$.← flatten($.message{})

Declare Variable: string temp = ""

- In previous examples, we directly assigned values to tokens without explicitly initializing them. However, there are specific scenarios where initialization becomes crucial:

- Looping: When using loops to iterate over data, you often need to initialize a token to store values or accumulate results within the loop.

- Looped Concatenation: If you're combining values within a loop, you need an initialized token to store the concatenated result.

- Repeated Lists: When working with repeated lists (arrays), initializing a token can help you manage and manipulate the elements effectively.

- Deduplication: Removing duplicate entries from a list often requires an initialized token to track unique values.

In these cases, initializing the token ensures that it exists and is ready to be used in the intended operation. We'll explore these scenarios in more detail in the following sections.

|

Capture a field -if exists- with Exception Handling

|

| Task: Capture the third Tag if it exists without raising any parsing errors if it does not. |

filter {

json { source => "message" array_function => "split_columns"}

mutate { replace => { "my3rdTag" => "%{Tags.2}"} on_error => "3rdTag_isAbsent"}

statedump {}

}

|

Snippet from statedump output:

…

"3rdTag_isAbsent": true,

…

|

- Equivalent to ;

$← flatten($.message{})

$.my3rdTag ← $.Tags{}[].3, ifError raiseFlag: $.3rdTag_isMissing ← true

- This scenario demonstrates GoStash's robust error handling when dealing with repeated fields.

- Accessing Non-Existent Elements: When you attempt to access an element that doesn't exist within a flattened repeated field (e.g., trying to access the 3rd tag when there are only two), GoStash doesn't halt the parsing process.

- Raising a Flag: Instead, it raises a flag to indicate that the element was not found. In this case, the flag is named '3rdTag_isAbsent'. This allows you to identify potential issues in your data or parsing logic.

- Continuing Execution: Importantly, GoStash continues to execute the remaining lines of your parsing configuration. This ensures that even if there are minor inconsistencies in your data, the parsing process can still proceed and extract valuable information from other parts of the logs.

- The on_error clause is a powerful feature in GoStash that provides several benefits:

- Exception Handling: It allows you to gracefully handle exceptions or errors that might occur during parsing, such as trying to access a non-existent field.

- Field Existence Detection: You can use on_error to check whether a recurring or optional field is present in the data.

- Debugging Probe: It can act as a debugging tool, allowing you to raise flags or perform actions when specific conditions are met or not met.

In this example, without on_error, trying to access the non-existent 3rd tag would result in a compilation error, halting the parser execution. However, with on_error, GoStash raises a flag named '3rdTag_isAbsent' and sets it to 'True', allowing the parser to continue executing. This demonstrates how on_error can prevent complete parser failure and provide more robust error handling."

I.e. Using "mutate { replace => { "my3rdTag" => "%{Tags.2}"}}" statement without "on_error" will generate a compiling error, and will stop the parser execution as there is no 3rd tag field in this example.

- By combining on_error with conditionals, you can create 'smart' parsing rules that adapt to different log formats. For instance, you can check if a field like 'query' is present and then trigger specific actions based on its existence.

|

Capture a subfield Non-String Field

|

|

Task: Capture $.user{}.id integer field

|

filter {

json { source => "message" array_function => "split_columns"}

mutate {convert => {"user.id" => "string"} on_error =>

"userId_conversionError"}

mutate { replace => { "myUserId" => "%{user.id}" }}

mutate {convert => {"myUserId" => "integer"}}

statedump {}

}

|

Snippet from statedump output:

…

"myUserId": 12345,

"userId_conversionError": false

….

|

- Equivalent to ;

$← flatten($.message{})

$.user{}.id ← string($.user{}.id), IfError:

raiseFlag $.userId_conversionError ← True

$.myUserId ← $.user{}.id

$.myUserId ← integer($.myUserId)

- The replace operator in GoStash is primarily designed for working with string fields (textual data). If you need to capture a field that is not a string (e.g., a number, boolean, or date), you'll need to follow a specific process:

- Convert to String: Use the appropriate conversion function to convert the non-string field into a string representation.

- Assign with replace: Use the replace operator to assign the converted string value to a token.

- Convert Back to Original Type: Use another conversion function to convert the token back to its original data type.

- While both convert and replace work with fields, there's a slight difference in how you reference those fields:

|

|

Sample Token Types:

Assume an input message processed by the parser below ;

{ "booleanField": true,

"booleanField2": 1,

"floatField": 14.01,

"integerField": -3,

"uintegerField": 5,

"stringField": "any single-line string is here"}

|

filter {

json { source => "message"}

statedump {}

}

|

Snippet from statedump output:

{

"booleanField": true,

"booleanField2": 1,

"floatField": 14.01,

"integerField": -3,

"stringField": "any single-line string is here",

"uintegerField": 5

}

|

- In the example, GoStash automatically recognized and handled the various data types: boolean fields were parsed as booleans, integer fields as integers, and so on.

- The supported target data types for the convert statement are provided in https://cloud.google.com/chronicle/docs/reference/parser-syntax#convert_functions :

boolean

float

hash

integer

ipaddress

macaddress

string

uinteger

hextodec

hextoascii

|

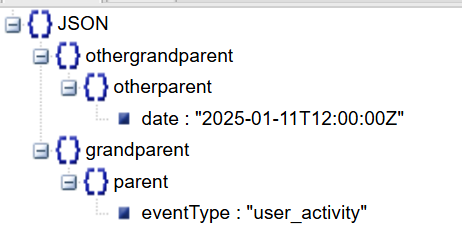

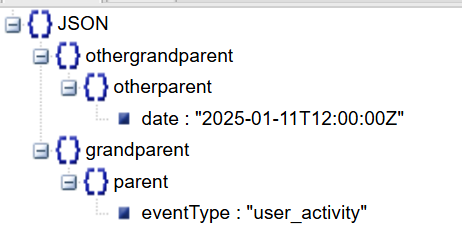

Constructing Nested String Elements

|

|

Task: Capture $.event_type and $.date into a Hierarchical token “grandparent.parent.eventType” and “othergrandparent.otherparent.date”

|

filter {

json { source => "message" array_function => "split_columns"}

mutate { replace => { "grandparent.parent.eventType" => "%{event_type}" }}

mutate { replace => { "myTimestamp" => "%{timestamp}" }}

mutate { rename => { "myTimestamp" => "othergrandparent.otherparent.date" }}

statedump {}

}

|

|

Snippet from statedump output:

… :

"grandparent": {

"parent": {

"eventType": "user_activity"

},

"othergrandparent": {

"otherparent": {

"date": "2025-01-11T12:00:00Z"

}

},

…

|

- Equivalent to ;

$← flatten($.message{})

$.grandparent{}.parent{}.eventType ← $.eventType

$.myTimestamp ← $.timestamp

Rename $.myTimestamp ⇒ $.othergrandparent.otherparent.date

- When capturing string fields using the replace operator, you have the ability to add any number of hierarchical parent levels to the captured token. This allows you to organize your data into a nested structure.

- Creating Nested Structures: The first line $.grandparent{}.parent{}.eventType ← $.eventType demonstrates how to create nested fields using the replace operator. It creates a hierarchy of fields named 'grandparent' and 'parent' and places the 'eventType' field inside this nested structure.

- Renaming and Moving Fields: The next two lines show how to rename and move a field using the rename operator.

- $.myTimestamp ← $.timestamp first creates a copy of the 'timestamp' field named 'myTimestamp'.

- Rename $.myTimestamp ⇒ $.othergrandparent.otherparent.date then renames 'myTimestamp' to 'date' and moves it under a new nested structure with 'othergrandparent' and 'otherparent' levels.

- However, the rename operator offers a more flexible way to achieve the same result. With rename, you can move existing fields and tokens within the JSON hierarchy, giving you more control over the final structure. We'll explore the capabilities of rename in a later section.

|

Loops

In this section we will discuss some token operations to manipulate the tokenized fields.

Loop through Composite Fields

|

|

Task: Loop through the subfields of the composite field $.system{}.

|

filter {

json { source => "message" array_function => "split_columns"}

for index_, field_ in system map {

statedump {}

}

}

|

|

Snippet from statedump output:

First Loop Iteration:

{….

"field_": "server-001",

"index_": "hostname",

"iter": {

"system-3": 0

},

"iter-3-33": {

"keys": [

"hostname",

"ip_address"

]

…}

Second Loop Iteration

{...

"field_": "192.168.1.100",

"index_": "ip_address",

"iter": {

"system-3": 1

},

"iter-3-33": {

"keys": [

"hostname",

"ip_address"

]

},

…}

|

- Equivalent to ;

$ ← flatten($.message{})

For index_, field_ in $.system{} :

Print All Fields

# Output:

# index_ = hostname in first run, ip_address in second run.

# field_ = server-001 in first run, then 192.168.1.100 in second run

- This loop provides a way to iterate over the fields within a composite field, such as 'system' in this case.

- index_: This variable acts as a counter or index, holding the name of the current field being processed within the loop. In the first iteration, index_ will be 'hostname', and in the second iteration, it will be 'ip_address'.

- field_: This variable stores the actual value of the field corresponding to the current index_. So, in the first run, field_ will hold the value 'server-001', and in the second run, it will hold '192.168.1.100'.

- The map keyword in GoStash provides a way to iterate over the fields within a composite field. Here's how it works:

- map keyword: Indicates that you want to loop through the subfields of a composite field.

- index_ variable: Instead of holding a numerical index (like in repeated fields -to be discussed later-), index_ stores the actual name of each field within the composite field. For example, if your composite field is 'system' with subfields 'hostname' and 'ip_address', index_ will be 'hostname' in the first iteration and 'ip_address' in the second.

- Values: Within the loop, you can access the value of each field using the field_ variable, as usual.

The values in the loop will be ;

| index_ |

field_ |

| "hostname" |

"server-001" |

| "ip_address" |

"192.168.1.100" |

- If "map" was not used as in ;

for index_, field_ in system {

You will get an error.

|

Tips:

- Be careful in writing the Looping field name, as looping through a non-existent field will not generate a syntax/compilation error but it will be a logical silent error.

- The loop body must not be empty, otherwise you will get "Failed to initialize filter" error

filter {

json { source => "message" array_function => "split_columns"}

for index_, field_ in Tags {

}

statedump {}

}

- Watch out for logical errors when using loops! If you're looping through a field that doesn't exist, the loop will silently fail. This can be tricky to debug, so double-check that your fields exist.

filter {

json { source => "message" array_function => "split_columns"}

for index_, field_ in nonExistentField {

statedump {}

}

}

Loop through Repeated Flattened Fields

|

|

Task: Loop through the repeated field $.Tags[].

|

#Loop through the Tags repeated field ;

filter {

json { source => "message" array_function => "split_columns"}

for index_, field_ in Tags {

statedump {}

}

}

|

|

Snippet from statedump output:

First Loop Iteration:

{….

"field_": "login_logs",

"index_": 0,

"iter": {

"Tags-4": 0

},

…}

Second Loop Iteration

{...

"field_": "dev",

"index_": 1,

"iter": {

"Tags-4": 1

},

…}

|

- Equivalent to ;

$← flatten($.message{})

For index_, field_ in $.Tags{}[*] : # $.message{}.Tags[*]

Print All Fields

# index_ = 0 in the first run then 1 in the second run (both are integers)

# field_ = "login_logs" in the first run then "dev" in the second run.

- This loop provides a way to iterate over the elements within a repeated field, such as 'Tags' in this case.

- index_: This variable acts as a counter or index, holding the numerical position of the current element being processed within the loop. In the first iteration, index_ will be 0, and in the second iteration, it will be 1.

- field_: This variable stores the actual value of the element corresponding to the current index_. So, in the first run, field_ will hold the value 'login_logs', and in the second run, it will hold 'dev'.

- The ‘Tags’ field is accessed in JSONPath as $.Tags[*] .

- Looping through flattened repeated fields is slightly different from looping through composite fields:

- No map keyword: You don't need to use the map keyword when looping through flattened repeated fields. The array_function handles the flattening, making the repeated field accessible like a composite field with numerical indices.

- index_ as numerical index: The index_ variable will hold integer values (0, 1, 2, etc.) representing the position of each element in the flattened repeated field.

- Example: If your flattened repeated field is 'Tags' with values 'login_logs' and 'dev', index_ will be 0 in the first iteration (for 'login_logs') and 1 in the second iteration (for 'dev')

The loop variables values will be ;

| index_ |

field_ |

| 0 |

"login_logs" |

| 1 |

"dev" |

- If "map" was used, i.e.

for index_, field_ in Tags map {

The loop variables “field_” will be the same, but the "index_" variable will be a string not an integer ;

| index_ |

field_ |

| "0" |

"login_logs" |

| "1" |

"dev" |

- When using numeric operators like <, <=, >, or >= with repeated fields in GoStash, keep in mind that the map keyword is not applicable in this scenario.

- map for field names: The map keyword is specifically designed for iterating over the names of fields within a composite field.

- Numeric operators for numbers: Numeric operators are used for comparing numerical values, such as the indices of elements within a repeated field.

- Avoid map with numeric comparisons: If you need to perform numeric comparisons within a loop that iterates over a repeated field, do not use the map keyword. Instead, rely on the numerical indices of the elements.

|

Tips

- When using loops in GoStash, the map keyword is generally recommended for both composite and repeated fields. This is because:

- Versatility: map works with both field names (in composite fields) and numerical indices (in flattened repeated fields).

- Consistency: Using map consistently can make your code easier to read and understand.

- Exception for Numeric Comparisons: The only scenario where you should avoid using map is when you need to perform numeric comparisons (using operators like <, <=, >, or >=) with the index of a repeated field. In these cases, you'll need to rely on the numerical index directly.

Writing A Simple UDM Event

Write Some Simple Fields into a UDM Event

|

|

Task: Capture some string fields like $.user{}.username into Non-Repeated UDM fields

|

filter {

json { source => "message" array_function => "split_columns"}

mutate {replace => {"event1.idm.read_only_udm.metadata.event_type" =>

"GENERIC_EVENT"}}

mutate {replace => {"event1.idm.read_only_udm.metadata.vendor_name" =>

"myVendor"}}

mutate {replace => {"event1.idm.read_only_udm.metadata.product_name" =>

"myProduct"}}

mutate {replace => {"event1.idm.read_only_udm.principal.application" =>

"%{event_type}"}}

mutate {replace => {"event1.idm.read_only_udm.principal.application" =>

"%{event_type}"}}

mutate {replace => {"event1.idm.read_only_udm.principal.administrative_domain" =>

"%{user.profile.location}"}}

mutate {replace => {"event1.idm.read_only_udm.principal.email" =>

"%{user.profile.email}"}}

mutate {replace => {"event1.idm.read_only_udm.principal.platform_version" =>

"V1"}}

mutate {convert => {"user.id" => "string"}}

mutate {replace => {"event1.idm.read_only_udm.principal.user.employee_id" =>

"%{user.id}"}}

mutate {merge => { "@output" => "event1" }}

#statedump {label => "end"}

}

|

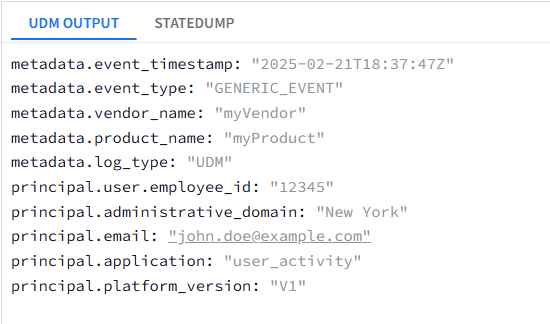

Snippet from statedump output:

"@output": [

{

"idm": {

"read_only_udm": {

"metadata": {

"event_type": "GENERIC_EVENT",

"product_name": "myProduct",

"vendor_name": "myVendor"

},

"principal": {

"administrative_domain": "New York",

"application": "user_activity",

"email": "john.doe@example.com",

"platform_version": "V1",

"user": {

"employee_id": "12345"

}

}

}

}

}

],

|

- When defining your UDM target schema in GoStash, you have the freedom to choose the name of the top-level field that will contain your event data.

- Default Value: By default, GoStash uses the field name 'event'.

- Customization: However, you can customize this to any value that suits your needs. In this example, we're using 'event1' to demonstrate this flexibility.

- Non-Reserved Keyword: It's important to note that 'event' is not a reserved keyword, meaning you can use it even if you choose a different name for your top-level field.

- When defining your UDM target schema in GoStash, it's essential to adhere to the UDM field name format to ensure compatibility and consistency.

- Top-Level Field: The name you choose for your top-level event field (e.g., 'event1') must be followed by '.idm.read_only_udm'. This creates the complete root node for your UDM schema (e.g., 'event1.idm.read_only_udm').

- UDM Field List: Refer to the official UDM field list documentation https://cloud.google.com/chronicle/docs/reference/udm-field-list#field_name_format_for_parsers for detailed information on valid field names and their formats.

- UDM Event Data Model: The overall hierarchy of your UDM schema should follow the structure defined in the UDM event data model documentation [insert hyperlink here]. This ensures that your data is organized according to the UDM standard.

- When building your UDM schema in GoStash, it's crucial to follow the hierarchical structure defined in the UDM event data model. This ensures that your data is organized correctly and can be interpreted by Chronicle.

- To select suitable fields for your UDM target schema, you'll need to navigate the UDM Event data model hierarchy, which is documented here: [link to UDM event data model].

- Starting Point: Begin with the 'Event' data model, not the 'Entity' data model. These two models have different structures and purposes.

- Hierarchy: The UDM Event data model defines a hierarchical structure for your fields. Start at the root node (e.g., 'event1' in our example) and follow the defined hierarchy to choose appropriate fields.

- Field Selection Criteria: When selecting fields, consider the following:

- Non-repeated fields: Choose fields that are single-valued, not repeated (i.e., not lists or arrays).

- String field type: Select fields that have a string data type.

- Field Type and Multiplicity: The documentation provides information about the field type (whether it's a primitive type like string or integer, or a composite object) and the multiplicity (whether it's repeated or single-valued). Use this information to guide your field selection.

- In the UDM schema, the event_type field plays a crucial role. It's a mandatory field that categorizes the event and determines the requirements for other fields within the schema.

- Location: The event_type field is located within the metadata object, creating the path metadata.event_type. You can find detailed information about this field in the documentation:

- Enumerated String: The event_type field is an enumerated string, meaning it can only hold specific predefined values. The allowed values are listed in the documentation.

- Mandatory and Optional Fields: The value you choose for event_type determines which other fields in the schema are mandatory or optional. This information is crucial for ensuring that your UDM events are valid and contain the necessary information for analysis. You can find the field requirements for each event_type in the UDM usage documentation: https://cloud.google.com/chronicle/docs/unified-data-model/udm-usage#required_and_optional_fields

Here's how the event_type influences mandatory fields:

- Example: HTTP Events: When parsing logs related to HTTP activity, you would set the event_type field to NETWORK_HTTP. This signals to Chronicle that the event is related to web traffic.

- Mandatory Fields: By setting event_type to NETWORK_HTTP, certain other fields become mandatory to provide a complete picture of the HTTP event. For example, the network.http.method field, which indicates the HTTP method used (GET, POST, PUT, etc.), is now required.

- Reference: You can find the specific mandatory fields for NETWORK_HTTP events in the UDM documentation: https://cloud.google.com/chronicle/docs/unified-data-model/udm-usage#network_http

This example demonstrates how the event_type acts as a key that unlocks specific requirements for other fields within the UDM schema. By understanding these requirements, you can ensure that your GoStash configurations produce valid and informative UDM events.

- Using 'GENERIC_EVENT' as your event_type is a good way to learn the basics of UDM without getting bogged down in complex field requirements. You can add more specific fields later as you become more familiar with the schema.

- To assign the value 'GENERIC_EVENT' to the event_type field in your UDM schema, you'll need to follow the hierarchical structure defined by UDM and utilize the concept of "Constructing Nested String Elements."

- The step-by-step approach to mapping simple string non-repeated fields to your UDM schema in GoStash:

- Identify a Suitable Parent: Start by identifying a relevant parent field from the UDM field list (e.g., 'about', 'additional', 'target').

- Check the Object Type: Refer to the 'Type' column in the UDM documentation to understand the structure and composition of the parent field.

- Select a Non-Repeated Field: Choose a suitable field within the parent that is not repeated (i.e., not a list or array).

- Continue Drilling Down: Repeat steps 2 and 3 until you reach a field that is either an enumerated string field or a single-valued field.

- Use replace to Build the Hierarchy: Use the replace operator to construct the full path from the root of your UDM schema ('event1.idm.read_only_udm') to the selected UDM field. This involves creating any necessary intermediate parent levels along the way.

This approach ensures that your data is mapped correctly to the UDM schema, following its hierarchical structure and field type requirements.

The final steps in your GoStash configuration involve writing the transformed data to the output and preparing the parser for production use:

- Writing to Output: The statement @output ← $.event1 instructs GoStash to take the data that has been structured under the event1 field and write it to the output destination. This makes the transformed data available for consumption by Chronicle or other systems.

|

Snippet from UDM Event Output;

|

Conclusion

This concludes Part 1 of our GoStash guide, where we've covered the foundational concepts of parsing and transforming log data into the Unified Data Model (UDM) format. We explored the key components of GoStash syntax, including field types, operators, and looping statements. Additionally, we delved into the intricacies of JSONPath and its relationship to GoStash, highlighting the differences and similarities between the two.

By now, you should have a good understanding of how to structure basic GoStash configurations, manipulate data fields, and create a valid UDM schema. Remember that practice is key to mastering GoStash. Experiment with different configurations, explore the GoStash documentation, and don't hesitate to seek help from the community if you encounter challenges.

In Part 2, we'll delve deeper into more advanced GoStash techniques, such as complex conditional logic, and repeated fields. We'll also explore how to handle various log formats and troubleshoot common parsing issues. Stay tuned for more exciting insights and practical examples that will empower you to become a GoStash expert!

Twitter

Twitter