- Google Cloud

- Cloud Forums

- Apigee

- Looking at Multi-Cluster Apigee Deployment Strateg...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With the flexibility to attach Apigee environments to different instances in Apigee X or the selective deployment of environments to different clusters in Apigee hybrid, the API platform provisioning gained an additional dimension that needs to be considered when planning your API deployment topology. This document intends to explain the benefits of different multi-cluster deployment topologies and give practical suggestions for how this can be implemented.

Why even deploy your API gateways to multiple clusters?

Historically, and especially with Apigee Edge, Apigee always advised for a symmetric active-active deployment model, which effectively meant that the same environment topology is replicated to another region to improve availability and reduce latency. Availability and latency are still the primary motivation to deploy API proxies to multiple clusters. Having additional redundancy in the API exposure layer helps prevent outages in a regional unavailability or even just for scheduled maintenance and cluster upgrades. Additionally, the closer the API deployments are located to the consumer and the target backends, the less impact the proxy has on the end to end latency of the API calls. For example if a consumer in Europe has to call an API proxy located in the US just to reach a backend that is also located in Europe, the latency can add up.

In addition to the requirements for low latency and high availability, many customers also look at multi-cluster deployment topologies to achieve other benefits. In some scenarios, traffic egress cost is an important consideration. In a suboptimal case where an Apigee X or hybrid deployment is located in a different region than an egress heavy backend, the egress traffic is effectively charged twice - once for the backend and once for the API proxy. In other scenarios, some customers are worried about API runtime traffic passing through gateways located in a different jurisdiction. For example, if data resides in a backend located within the EU and if a consumer is accessing this backend via an API proxy located in the US, then that traffic will be traversing an unintended geographical location. The desire, in this case, would be to route the traffic via an API proxy located within the EU region.

Implicit vs Explicit Multi-Cluster Routing

Given the requirements listed above, one could design their multi-cluster routing either explicitly or implicitly. An implicit routing would route the traffic intended for api.example.com to the API proxy located in a specific region based on either DNS or Anycast IP addresses. An explicit routing would, for example, add a location prefix to the hostname like us.api.example.com. This way consumers could directly target a specific region of an API Proxy with the downside of leaking implementation details to the clients.

Explicit routing is better suited for situations where traffic needs to be pinned to a specific region. If, for example, customers are stored in a regional service only, a request for us.api.example.com/customers/abc would return a different result than eu.api.example.com/customers/abc. In this case, adding an implicit routing that balances traffic based on availability or latency to the two services is definitely not desirable. On the other hand, if the services were all serving all global customers, an implicit routing would be preferable. This would simplify the client logic and transparently handle failures.

API Proxy Deployment Scenarios

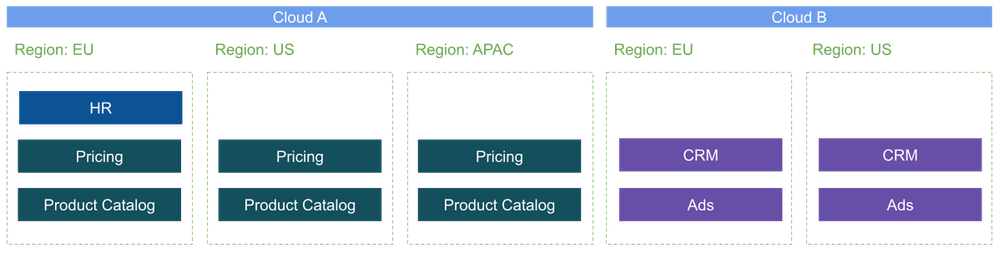

To illustrate how API Management can be used in a scenario where backends are asymmetrically distributed across multiple geographical regions and across different cloud providers, let us consider the following example setup:

A global enterprise has five services that they want to expose via an API Management platform. Their services are distributed across two cloud providers in three regions. For regulatory reasons, their HR system is only deployed in the EU region on cloud A. Their e-commerce applications for pricing and the product catalog are deployed across all three regions in cloud A. The enterprise also has a CRM and Ads service running in cloud B and distributed in two regions.

The APIs provided by these services are supposed to be exposed for global consumption from the external and internal network.

We now explore three different deployment scenarios that show different options for how to place and configure the API proxies. Because we will consider a multi-cloud deployment, we are using Apigee hybrid in this example. We can easily apply the same concepts to a deployment based on Apigee X if only regional traffic routing is considered.

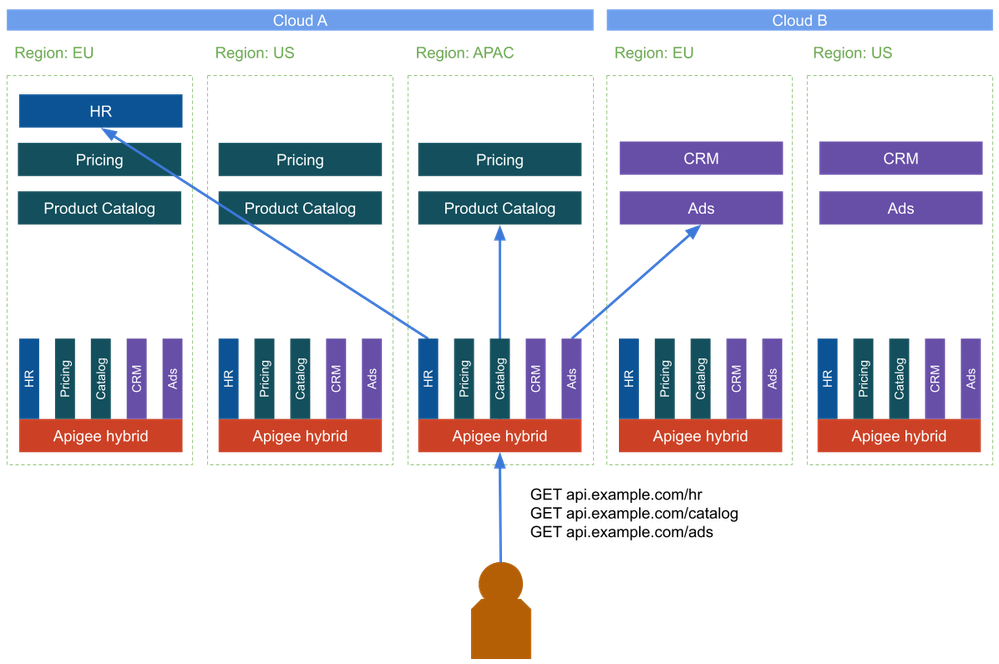

Scenario A: Symmetric Active-Active Deployment

In this first topology, we will deploy identical runtime components across all regions. This will also give us additional benefits for latency and availability The deployment is a reflection of how the multi-region deployment in Apigee Edge was achieved.

The premise here is that every API gateway deployment can reach every backend service. Of course, the backend services can and should be fronted by a load balancer to decouple the API expose layer from the implementation.

Because the example scenario uses two different cloud providers, the services either have to be exposed to the public internet or require interconnection between the networks across the cloud providers.

Considerations

|

Advantages

|

Disadvantages

|

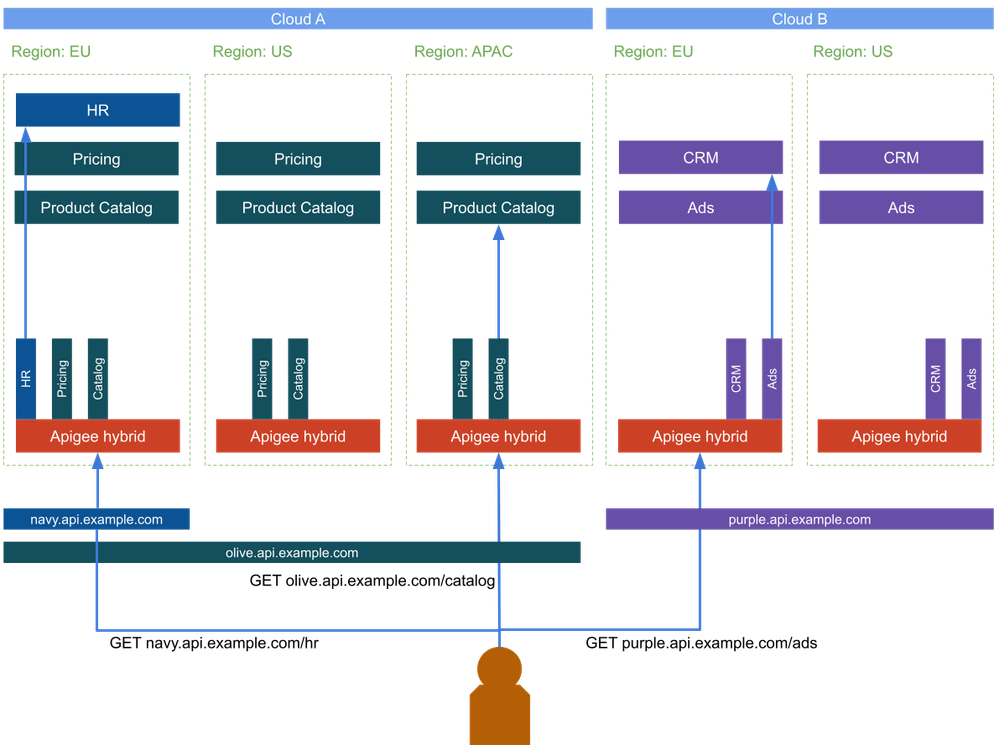

Scenario B: Local Proxy Deployment

Our second scenario takes a different approach and exposes only the local services on the local gateway. In this case, the only way to reach the HR system is to go through the Apigee Deployment in Cloud A in the EU region. The proposed design here uses environment groups with a dedicated hostname to designate where the traffic should go. In this case, the HR system is mapped to the navy environment group where the hostname only resolves to the Ingress of the hybrid deployment in Cloud A EU. For the product catalog we have three regions in Cloud A that can serve this API. The product catalog is mapped to the olive environment group with a hostname pointing to the Apigee instances in all three regions. If all components are available, a request originating from APAC would reach the Apigee hybrid deployment located in APAC and then get routed to the local service. The default Apigee routing does not take the health of the target services into account. Therefore, it is advisable to incorporate regional load balancing to ensure the APAC gateway utilizes the relevant service in another region if its intended target in the same region is unavailable or overloaded.

Considerations

|

Advantages

|

Disadvantages

|

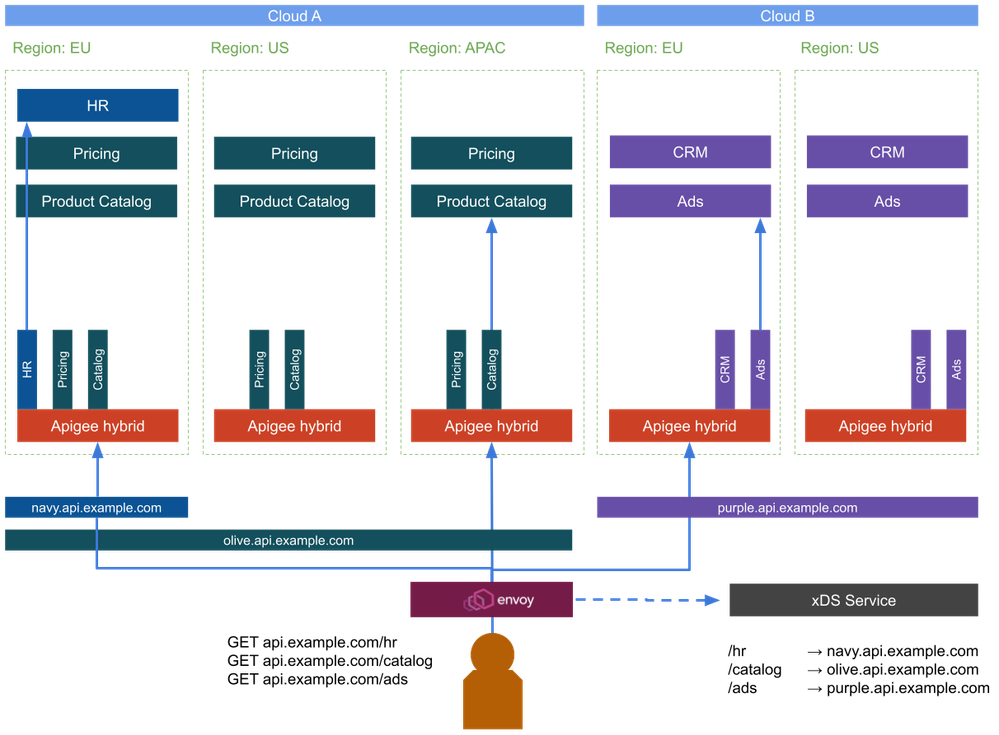

Scenario C: Multi-Cluster Dispatcher

Our third and last scenario builds on top of the previous ones but tries to re-introduce the single hostname topology to simplify the client logic. As before, we have localized gateway deployments that only expose the services available in the same region. For the client to call a single endpoint and reach the distributed services, we will have to introduce a new component that we will call a dispatcher proxy. This dispatcher proxy knows which API proxy is deployed to which environment group and can route the traffic accordingly to the correct hostname. The dispatcher is deployed in close proximity to the consumers and in all regions.

As an example, the call for the product catalog will reach the dispatcher proxy. The mapping within the dispatcher proxy will tell it that the traffic for /catalog is intended for the environment group with the hostname olive.api.example.com. The request will then be sent to an Apigee instance with a proxy deployment for this API. The same comments about the internal load balancing of the backend services for failover also apply in this scenario as well.

The main question now is how the dispatcher proxy can be configured to route traffic to the right environment group in the most efficient manner. In scenarios where the API topology remains static, this configuration could be fed into a simple proxy, such as envoy, in the form of a static configuration that maps paths to hosts. Another, more automated option would be to provide a dynamic configuration endpoint in the form of an Envoy xDS Service. The xDS service can be a gRPC or JSON REST API to feed the required mapping configuration to the envoy instances.

Routing Configuration - Example from within the Apigee Ingress Router

An similar implementation of such a service is used to configure the routing of the Apigee ingress by the Apigee watcher component.

curl -H "Authorization: Bearer $APIGEE_TOKEN" https://apigee.googleapis.com/v1/organizations/$ORG_NAME/deployedIngressConfigThe response body contains the necessary information to construct the reverse mapping of base paths to hostnames. A similar service would have to be implemented to serve as the xDS for the dispatcher proxy.

{

"name": "organizations/MY_ORG/deployedIngressConfig",

"revisionCreateTime": "...",

"environmentGroups": [

{

"name": "organizations/MY_ORG/envgroups/olive",

"hostnames": [

"olive.api.example.com"

],

"routingRules": [

{

"basepath": "/pricing/v0",

"environment": "organizations/MY_ORG/environments/ecom1"

},

{

"basepath": "/catalog/v0",

"environment": "organizations/MY_ORG/environments/ecom2"

}

],

"uid": "...",

"revisionId": "10"

},

// ...

],

}

Disclaimer: It is important to note that this API is intended to serve the internal watcher component of Apigee and is not meant to be consumed outside of this use case. Relying on such a low-level API always comes at the risk of breaking changes that potentially require you to change the configuration mechanism of the xDS for the dispatcher proxy.

Considerations

|

Advantages

|

Disadvantages

|

Thanks to Omid Tahouri and Ozan Seymen for their feedback on drafts of this article!

- Labels:

-

API Runtime

-

Apigee General

-

Apigee X

-

Hybrid

-

Analytics

524 -

API Gateway

58 -

API Hub

105 -

API Runtime

11,757 -

API Security

225 -

Apigee General

3,454 -

Apigee X

1,644 -

Cloud Endpoints

1 -

Developer Portal

1,957 -

Drupal Portal

56 -

Hybrid

529 -

Integrated Developer Portal

117 -

Integration

346 -

PAYG

19 -

Private Cloud Deployment

1,098 -

User Interface

91

- « Previous

- Next »

Twitter

Twitter