- Google Cloud

- Articles & Information

- Community Blogs

- Multi-cluster load balancing with GKE using standa...

Multi-cluster load balancing with GKE using standalone network endpoint groups in Google Cloud

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In this article, we'll show step-by-step how to configure multi-cluster load balancing with GKE (Google Kubernetes Engine) using standalone network endpoint groups (NEGs). Let's first start by explaining key terms.

Defining key terms

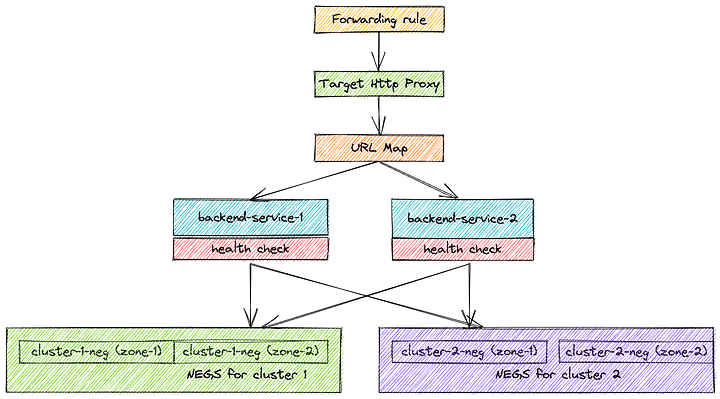

Forwarding Rule: Each rule is associated with a specific IP and port. Given we’re talking about global HTTP load balancing, this will be anycast global IP address (optionally reserved static IP). The associated port is the port on which the load balancer is ready to accept traffic from external clients. The port can be 80 or 8080 if the target is HTTP proxy, or 443 in case of HTTPS proxy.

Target HTTP(S) Proxy: Traffic is then terminated based on Target Proxy configuration. Each Target Proxy is linked to exactly one URL Map (N:1 relationship). You’ll also have to attach at least one SSL certificate and configure SSL Policy In case of HTTPS proxy.

URL Map: This is the core traffic management component and allows you to route incoming traffic between different Backend Services (including Cloud Storage buckets). Basic routing is hostname and path-based, but more advanced traffic management is possible as well - URL redirects, URL rewriting and header- and query parameter-based routing. Each rule directs traffic to one Backend Service.

Backend Service: This is a logical grouping of backends for the same service and relevant configuration options, such as traffic distribution between individual Backends, protocols, session affinity, or features like Cloud CDN, Cloud Armor, or IAP. Each Backend Service is also associated with a Health Check.

Health Check: Determines how individual backend endpoints are checked for being alive, and this is used to compute overall health state for each Backend. Protocol and port have to be specified when creating one, along with some optional parameters like check interval, healthy and unhealthy thresholds or timeout. Important bit to note is that firewall rules allow health-check traffic from a set of internal IP ranges.

Backend: Represents a group of individual endpoints in a given location. In the case of GKE, our backends will be NEGs. NEGs overview one per each zone of our GKE cluster (in case of GKE NEGs, these are zonal, but some backend types are regional).

Backend Endpoint: A combination of IP address and port, in case of GKE with container-native load balancing.

Steps to configure

Now that we've defined key terms, let's walk through the steps to configure multi-cluster load balancing with GKE using standalone NEGs.

1. Create two clusters in two different regions where we want to distribute the load across.

Let's say the load needs to be distributed amongst the Toronto region and Montreal region cluster.

gcloud container clusters create toronto-cluster \

--project [PROJECT_ID] \

--region northamerica-northeast1 \

--num-nodes [NUM_NODES]gcloud container clusters create montreal-cluster \

--project [PROJECT_ID] \

--region northamerica-northeast1 \

--num-nodes [NUM_NODES]2. Get the credentials for both clusters and run in separate tabs of terminal.

gcloud container clusters get-credentials montreal-cluster \

--region northamerica-northeast1 \

--project my-project-12345

gcloud container clusters get-credentials toronto-cluster \

--region northamerica-northeast2 \

--project my-project-123453. For each terminal (cluster), run the following command starting from this step:

kubectl create deployment hello-world --image=quay.io/stepanstipl/k8s-demo-app:latestThis command deploys one of the docker images, which showcases cluster name, region, etc. on the frontend web page once we open the url on 8080.

Also, it has two pages at /foo and /bar which correspond to two services. This will boil down to creating two backend services.

So basically, the image contains the complete app which has these two endpoints. By the end of this blog, you'll understand how the traffic for foo and bar service will be redirected to different clusters based on RATE as a load balancing policy. We can configure affinity as well, which I will cover in a subsequent tutorial.

4. Create a file called service.yaml and add the following content

apiVersion: v1

kind: Service

metadata:

name: hello-world

annotations:

cloud.google.com/neg: '{"ingress": true, "exposed_ports": {"8080":{}}}'

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 8080

selector:

app: hello-world

type: ClusterIPRun the following command to expose the deployment with service with the annotation cloud.google.com/neg, which means NEGS will be automatically created.

kubectl apply -f service.yamlNow that you have the service being exposed, it's time to setup HTTP L7 global load balancer.

5. Do the following steps just once (not like we have done previously starting at step 2).

gcloud compute health-checks create http health-check-foobar \

--use-serving-port \

--request-path="/healthz"

gcloud compute backend-services create backend-service-foo \

--global \

--health-checks health-check-foobar

gcloud compute backend-services create backend-service-bar \

--global \

--health-checks health-check-foobar

gcloud compute backend-services create backend-service-default \

--global

gcloud compute url-maps create foobar-url-map \

--global \

--default-service backend-service-default

gcloud compute url-maps add-path-matcher foobar-url-map \

--global \

--path-matcher-name=foo-bar-matcher \

--default-service=backend-service-default \

--backend-service-path-rules='/foo/*=backend-service-foo,/bar/*=backend-service-bar'

gcloud compute target-http-proxies create foobar-http-proxy \

--global \

--url-map foobar-url-map \

--global-url-map

gcloud compute forwarding-rules create foobar-forwarding-rule \

--global \

--target-http-proxy foobar-http-proxy \

--ports 8080

This will set up the baseline for the global load balancer. Now it's time to tell the load balancer to redirect its load to different NEGs, which have been automatically created by now.

6. Check the NEGs

kubectl get svc \

-o custom-columns='NAME:.metadata.name,NEG:.metadata.annotations.cloud\.google\.com/neg-status'Note down the NEG name and its associated region under each cluster terminal tabs.

7. Configure the load balancer

gcloud compute backend-services add-backend backend-service-foo \

--global \

--network-endpoint-group k8s1-31be30d1-default-hello-world-8080-640bb695 \

--network-endpoint-group-zone=northamerica-northeast1-c \

--balancing-mode=RATE \

--max-rate-per-endpoint=100

gcloud compute backend-services add-backend backend-service-bar \

--global \

--network-endpoint-group k8s1-31be30d1-default-hello-world-8080-640bb695 \

--network-endpoint-group-zone=northamerica-northeast1-c \

--balancing-mode=RATE \

--max-rate-per-endpoint=100

gcloud compute backend-services add-backend backend-service-foo \

--global \

--network-endpoint-group k8s1-42ea20f2-default-hello-world-8080-693ebc13 \

--network-endpoint-group-zone=northamerica-northeast2-c \

--balancing-mode=RATE \

--max-rate-per-endpoint=100

gcloud compute backend-services add-backend backend-service-bar \

--global \

--network-endpoint-group k8s1-42ea20f2-default-hello-world-8080-693ebc13 \

--network-endpoint-group-zone=northamerica-northeast2-c \

--balancing-mode=RATE \

--max-rate-per-endpoint=100

gcloud compute firewall-rules create fw-allow-gclb \

--network=default \

--action=allow \

--direction=ingress \

--source-ranges=0.0.0.0/0 \

--rules=tcp:8080Note: In Google Cloud, the balancing-mode parameter determines how the load balancer distributes incoming traffic to the backends. The available balancing modes are:

- RATE: This balancing mode distributes traffic based on a ratio of request rates among the backends. Backends with higher request rates receive a larger share of the traffic. The ratio is calculated based on the ratio of request counts over a specific time period.

- UTILIZATION: This mode distributes traffic to backends based on their utilization levels. The load balancer monitors the CPU utilization of each backend instance and directs traffic to the instances with lower utilization, helping to evenly distribute the load.

- CONNECTION: In this mode, the load balancer balances traffic based on the number of concurrent connections handled by each backend. It distributes incoming connections to the backends with fewer active connections to ensure even distribution.

- CPU: This mode distributes traffic based on the CPU utilization of the backend instances. Backends with lower CPU utilization receive a larger share of the traffic to achieve a balanced distribution.

- TCP: This mode is used for TCP load balancing. It distributes traffic to backends based on TCP session affinity or source IP address affinity. TCP session affinity ensures that requests from the same TCP session are routed to the same backend, while source IP affinity routes requests from the same client IP address to the same backend.

When adding a backend to a compute backend service using the gcloud command, you can specify the desired balancing mode using the balancing-mode parameter, followed by one of the modes mentioned above.

You've now completely and successfully configured multi-cluster load balancing with GKE using standalone NEGs in Google Cloud.

Testing

To test this, use:

http://<external IP of frontend LB>:8080/fooYou can get this <external IP of frontend LB> from the console by visiting load balancing service on the GCP console. Just type Load balancer and go to the tab frontend for your service.

Keep hitting the endpoints continuously to generate traffic and check for the changes on the cluster name. Now bring down one cluster and test it again.

- From "smol" to scaled: Deploying Hugging Face’s agent on Vertex AI

- Choosing between Google Kubernetes Engine (GKE) multi-cluster solutions in Google Cloud

- Network and Envoy Proxy Configuration to manage mTLS on Apigee X - part2/2

- Network and Envoy Proxy Configuration to manage mTLS on Apigee X - part1/2

Twitter

Twitter