- Google Cloud

- :

- Articles & Information

- :

- Community Blogs

- :

- Reducing costs with Storage Transfer Service from ...

Reducing costs with Storage Transfer Service from Amazon S3 to Cloud Storage (S3 to GCS)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Cloud migration is not only about what data is getting migrated, but also how it’s getting migrated. Common challenges that come with cloud migration are around how the data is migrated from a cloud data center, such as AWS, and managing the costs that are incurred as a result.

This article will address these challenges - diving into how to migrate objects from sources like Amazon S3 to Cloud Storage using Storage Transfer Service, and how you'll reduce costs while doing so.

What is Storage Transfer Service?

Storage Transfer Service is a solution provided by Google Cloud to help transfer data quickly and securely between object and file storage across Google Cloud, Amazon, Azure, on-premises, and more.

What's new in Storage Transfer Service?

One of the newest source options for the Storage Transfer Service is called S3-compatible object storage. This is used for any object storage sources that are compatible with the Amazon S3 API.

Here, instead of including just the Amazon S3 bucket name, you also need to provide the endpoint where the bucket is located. This will enable you to use S3 interface endpoints that are needed to access S3 in a private way, provided you have a VPN/Interconnect connection between AWS and Google Cloud networks.

For using this as a source, you need to set up agent pools, which run as containers on Google Compute Engine with Docker installed, Cloud Run, or Google Kubernetes Engine (GKE). A recommendation is to deploy the agents closer to the source, so even deploying agents on Amazon EC2 will work as well.

Why do we need S3-compatible object storage as a source, when we have Amazon S3 as a source?

Currently, the Amazon S3 source transfer the data to Cloud Storage using the internet. There are two caveats to this:

- While the throughput is strong and the data transfer is an encrypted transfer, there's always a concern that arises from internet-based transfers. This option cannot help us tunnel the transfer through VPC/Interconnect channels.

- During large amounts of object migration, egress costs of AWS during data transfer can be significant. Interconnect setup helps provide discounts for AWS egress charges depending on the vendor chosen, provided the data is coming out of AWS via Interconnect to Google Cloud.

Considering the above scenarios and use cases, we can leverage S3-compatible object sources.

How does Storage Transfer Service work with S3-compatible object sources?

Storage Transfer Service accesses your data in S3-compatible sources using transfer agents deployed on VMs close to the data source. These agents run in a Docker container and belong to an agent pool, which is a collection of agents using the same configuration and that collectively move your data in parallel.

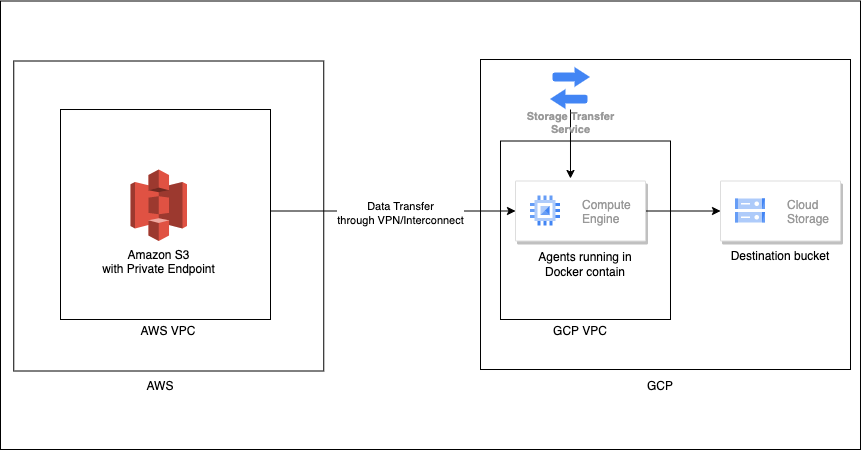

Because of this, you can create an interface endpoint for Amazon S3 in AWS, which is a private link to S3 and migrate data from the S3 bucket to Cloud Storage. Doing so requires an interconnectivity between AWS and GCP using VPN tunnel or an Interconnect tunnel. S3 bucket will transfer the data to the agent, which will be in Google Cloud via the private channel and then the data is stored in the Cloud Storage bucket.

Optionally, you can enable Private Google Access in VPC to ensure data from agent VM to Cloud Storage is also running through a private connection.

Steps required to set up the Storage Transfer Service environment and to initiate data transfer

The prerequisite are as follows:

- Ensure there is connection between AWS and Google Cloud environments using a VPN or Interconnect.

- Enable the Storage Transfer API.

- Have a Google Cloud user account/service account with Storage Transfer Admin Role. If you are using a Google Cloud service account, you need to export the service account key into a JSON file. You will have to store this service account key file in the Compute Engine instance where the agents will be installed.

- Have AWS Access Key ID and Secret Access Key handy. It should at least have read permissions on the S3 bucket.

- Set up a Compute Engine in the same Google Cloud VPC that is connected with the AWS environment using VPN/Interconnect and run the following commands (ensure gcloud is installed in the Compute Engine)

curl -fsSL https://get.docker.com -o get-docker.sh

sudo sh get-docker.sh

sudo systemctl enable docker

sudo docker run -ti --name gcloud-config google/cloud-sdk gcloud auth application-default login

The above commands will install Docker in the Compute Engine and will allow you to authenticate Docker with your Google Cloud credentials.

- Create an Interface endpoint for Amazon S3 in AWS. This will give an endpoint to us which looks like vpce-xxxx-xxxx.s3.*aws-region*.vpce.amazonaws.com. You can use this link to create a VPC endpoint. Note that we need an endpoint for the S3 service and we need an interface endpoint, not Gateway endpoint attached to the AWS VPC which is tunneled with Google Cloud.

- Test the endpoint to see if it's accessible via Google Cloud on port 443. You can use telnet as follows:

telnet *bucketname*.bucket.vpce-xxxx-xxxx.s3.*region-name*.vpce.amazonaws.com 443

You should immediately get a response similar to this:

Trying 172.31.35.33...

Connected to *bucketname*.bucket.vpce-xxxx-xxxx.s3.*region-name*.vpce.amazonaws.com.

Escape character is '^]'.

This verifies that the tunnel established is working fine and you can use the private link to access the S3 bucket from Google Cloud. If you do not receive this output, check and verify the tunnel connection is working properly.

Note: Notice that the endpoint has a word bucket after the bucket name and before the endpoint url. This will be embedded when you input the endpoint during the transfer job creation. So, the endpoint will look like bucket.vpce-xxxx-xxxx.s3.*region-name*.vpce.amazonaws.com.

Installing the transfer agents

- In the GCP console, navigate to Data Transfer > Agent Pools. Choose to Install Agents in Default Pool or you can Create another Agent Pool. Creating other pools helps you set up custom details and set a bandwidth limit if required for transfer. However, it's recommended to go with the default, as it can help in creating Pub/Sub topics for communication in step 2.

- After selecting the Pool, click on Install Agent. You will see a pop-up at the right. Click on the Create button at the section which mentions creating Cloud Pub/Sub topics and subscriptions, which help the Storage Transfer Service to provide updates on job creation/progress/updates. Note: This option is currently only visible if you choose the transfer_service_default pool, but it will help later if you create new pools as well as this is a one-time task.

- You will also see options below for parameters. Set Storage Type as S3-compatible object storage. Number of agents to install provides the number of Docker containers that will run in the agent VM. Note that the higher the number of containers, the higher the CPU utilization would be. Agent ID prefix is to set a prefix for the container name. You will also see a parameter for inputting the Access Key ID and Secret Access Key for the AWS credentials.

Expanding the advanced settings, you can choose the default GCP credentials or use the Service account file and mention the absolute path of the service account key file. All these options generate a set of commands showing with an example like this:

export AWS_ACCESS_KEY_ID=XXX

export AWS_SECRET_ACCESS_KEY=XXX

gcloud transfer agents install --pool=transfer_service_default --id-prefix=demo- --creds-file="/home/user/sa.json" --s3-compatible-mode

Note: I added export before the AWS environment variables, as not putting the export keyword was not allowing the agents to utilize the AWS credentials. Since this feature is pre-GA as of now, this issue can occur for some users.

- Run the above commands in the VM where you installed docker. This will run the containers and the agents inside the container. You can check the containers by using the following command:

sudo docker ps

Creating a transfer job

- In the data transfers, create a transfer job and choose source type as S3-compatible object storage and click next step.

- In Choose a source, select the agent pool where the agents are installed, in Bucket or folder type the name of the S3 bucket. In Endpoint, endpoint should be in the format bucket.vpce-xxxx-xxxx.s3.*region-name*.vpce.amazonaws.com. In the Signing region, type the AWS region where the bucket resides, and then go to the next step.

- In Choose a destination, choose your destination bucket and the folder path.

- In the next steps, you can choose if you want to run this job immediately or on a schedule. Optionally, you can check the settings similar to normal transfer jobs like overwriting objects or deleting source objects, etc. Once you have your desired settings click on Create to initiate the transfer.

- You can click on the job and check the status of the transfer. You will notice that the objects will be discovered and checksummed and the data transfer will be in progress depending on the size of the objects in the source bucket.

Any current limitations?

- The speed of the transfer will depend on how maximum bandwidth that is set for the pool, the size of the Compute Engine, the maximum network throughput it supports, and the maximum bandwidth capacity of the VPN/Interconnect tunnel.

- While the egress cost of AWS can be reduced, provided the Interconnect setup provides AWS egress discounts, the trade-off cost would be the agent VM’s running cost. However, that cost is not as high as the egress cost.

- Object reads could be a limit for how fast the transfer would go. The recommendation here is to include multiple prefixes (or folder paths) in multiple jobs while doing large data transfers.

Conclusion

Using the S3-compatible object storage as a source option for Amazon S3 buckets, we can now tunnel the transfer via VPN/Interconnect and transfer or migrate objects to Cloud Storage at large volumes. Using interconnect will help to save a lot of AWS egress cost and make the transfer more secure.

Thanks for reading! 😊

References:

- Building Enterprise-Ready Image Annotation Solutions with Cloud Vision API

- Enhancing Performance and Reducing App Bundle Size with Deferred Loading in Flutter

- No servers, no problem: A guide to deploying your React application on Cloud Run

- Mainframe Connector: Mainframe to BigQuery Data Migration

- Demystifying Pub/Sub: An Introduction to Asynchronous Communication

Twitter

Twitter