- Google Cloud

- Cloud Forums

- Data Analytics

- Error in Dataflow pipeline - reading json and writ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi everyone,

I'm using a basic ETL pipeline to run in dataflow. Python script is in my default cloud shell VM from where I'm running it. I have defined the arguments as mentioned below,

python3 my_pipeline.py --project centon-data-pim-dev --region us-central1 --stagingLocation gs://sample_practice_hrp/staging --tempLocation gs://sample_practice_hrp/temp --runner DataflowRunner

link to the sample file used,

https://github.com/GoogleCloudPlatform/training-data-analyst/blob/master/quests/dataflow_python/1_Ba...

while the other options are as below,

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For diagnosing such issues, you might consider the following strategies:

-

Use a structured approach to error solving: Start with the error messages you receive. Even if they seem cryptic at first, they often provide a starting point for your investigation. Then, check the prerequisites for your pipeline (like whether the required datasets and tables exist, whether the data is in the expected format, etc.).

-

Check permissions: Ensure that the Dataflow service account has the necessary permissions to both the source (GCS) and the destination (BigQuery). It requires

storage.objects.getandstorage.objects.listfor GCS, andbigquery.dataEditorandbigquery.jobUserfor BigQuery. -

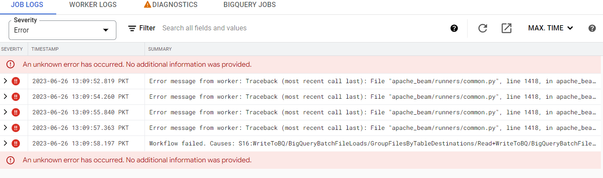

Examine the logs: Cloud Logging is an invaluable tool for understanding what's happening in your pipeline. The logs for Dataflow jobs can be lengthy, but they're often the best place to find detailed error messages. You can filter the logs by the job ID or other parameters to narrow down the volume of logs you need to examine.

-

Validate your data: Make sure the input data is in the expected format and schema. Any discrepancies in data format or schema can cause your pipeline to fail.

-

Use error handling in your code: Consider adding try/except blocks around parts of your code where errors may occur. This can provide more detailed error messages and make it easier to identify the cause of the issue.

-

Analytics General

408 -

Apache Kafka

6 -

BigQuery

1,355 -

Business Intelligence

91 -

Cloud Composer

101 -

Cloud Data Fusion

103 -

Cloud PubSub

195 -

Data Catalog

91 -

Data Transfer

169 -

Dataflow

216 -

Dataform

325 -

Dataprep

27 -

Dataproc

123 -

Datastream

49 -

Google Data Studio

81 -

Looker

130

- « Previous

- Next »

Twitter

Twitter