- Google Cloud

- Cloud Forums

- Databases

- Re: Connecting SQL database to Airflow in Google C...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hope all are well. I have a DAG running in the Google Cloud Composer. But, I am having an issue connecting SQL DB for the DAG.

One way I tried was to export my local SQLite db file to Google Cloud Bucket. Then add the URL of this to the Airflow Web UI connections by selecting type as SQLite and host as link to the db file in bucket. But the DAG is getting failed stating that it can’t find the database. Where test button in the connections tab in Airflow Web UI is saying that it cannot open the database.

Another way I tried was by launching a Google Cloud SQL instance and importing the db to that. However, I am not sure what option to select in the Airflow Web UI connections tab for this.

- Labels:

-

Cloud SQL for MySQL

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Connecting an SQLite database to Airflow in Google Cloud Composer

-

Not recommended: To connect an SQLite database to Airflow in Google Cloud Composer, you can export the database file to Google Cloud Storage (GCS) and then create an Airflow connection with the following information:

- Type: SQLite

- Host: The URL of the SQLite database file in GCS

- Username: Empty string

- Password: Empty string

-

Recommended: Instead of using the above approach, it is recommended to copy the SQLite database file from GCS to the local filesystem of the Airflow worker before any operations. This will allow Airflow to access the database file directly.

Connecting a Google Cloud SQL database to Airflow in Google Cloud Composer

-

To connect a Google Cloud SQL database to Airflow in Google Cloud Composer, you can create an Airflow connection with the following information:

- Type: The type of database you are using (e.g., MySQL,PostgreSQL)

- Host: The IP address or domain name of the Cloud SQL instance

- Username: The username for the Cloud SQL instance

- Password: The password for the Cloud SQL instance

-

Note: If using the Cloud SQL Proxy, the "Host" field should typically be

127.0.0.1.

Troubleshooting

If you are still having trouble connecting your SQL database to Airflow, here are a few things to check:

- Make sure that the database connection information is correct.

- Make sure that the Airflow user has permission to access the database.

- Try restarting the Airflow scheduler and workers.

- If you are still having trouble,contact Google Cloud support for assistance.

Additional information

- When connecting to a Google Cloud SQL database, it is important to ensure that the necessary Python packages/drivers are installed in the Airflow environment.

- You can use the Airflow "Secret Manager" connection type to securely store and manage your database connection credentials in Google Cloud Secret Manager.

- Once you have connected your SQL database to Airflow, you can use Airflow operators to access and manipulate data in the database.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey, thank you for the reply. Now, I am trying to add the connection in the Airflow Web UI. I selected the type as MySQL since that's the type of my cloud sql instance and database. Added the public IP of this instance as host. And when tried to test it getting the error:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are getting the error message "connect() argument 2 must be str, not None" when testing a MySQL connection in Airflow, it means that the Airflow connection tester is trying to pass a value of type None to the second argument of the connect() method. This can happen for a few reasons:

- A required field is missing or not set correctly.

- The username or password is incorrect.

- There is a problem with the MySQL server or database.

To troubleshoot the error, you can try the following:

- Check all required fields. Make sure that all of the required fields in the Airflow connection settings are filled out correctly.

- Try using a different username and password. If you are using a passwordless authentication setup, you can try using a different username or password.

- Check the MySQL server and database. Make sure that the MySQL server is running and that the database is accessible.

If you are still having trouble, you can try the following:

- Try creating a new connection. If you are able to create a new connection without any errors, then the problem is likely with your existing connection settings.

- Try restarting the Airflow scheduler and workers. This can sometimes fix connection problems.

Steps to edit an existing MySQL connection in Airflow

- Go to the Airflow Web UI and navigate to the Connections tab.

- Click on the name of the connection that you want to edit.

- Make the necessary changes to the connection settings.

- Click the "Save" button to save the changes.

Once you have made the necessary changes, try testing the connection again. If the connection test is successful, you should be able to use the Airflow connection to access your MySQL database.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I created the Cloud SQL instance without any password. So, what should I add for login and password in the Airflow Web UI adding connection page?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you created your Cloud SQL instance without a password, you will need to use a different authentication method to connect to it from Airflow.

One option is to use the Cloud SQL Proxy. To do this, you will need to set the Host field in the Airflow connection settings to 127.0.0.1. You can leave the Username and Password fields blank.

Another option is to use a service account to authenticate the Cloud SQL Proxy. To do this, you will need to create a service account and grant it the necessary permissions to access your Cloud SQL instance. You can then use the service account's credentials to configure the Cloud SQL Proxy.

Once the Cloud SQL Proxy is configured, you can use it to connect to your Cloud SQL instance from Airflow. To do this, you will need to set the Host field in the Airflow connection settings to 127.0.0.1. The Username field should contain the database user's username (e.g., root), and the Password field should be left blank if no password is set.

It is important to note that running a database without password protection, even behind a proxy, is a security risk. It is recommended that you set up authentication for your Cloud SQL instance.

Here are the steps to create an Airflow connection to your Cloud SQL instance using the Cloud SQL Proxy:

- Go to the Airflow Web UI and navigate to the Connections tab.

- Click the "Create" button to create a new connection.

- Select the "MySQL" connection type.

- Enter

127.0.0.1in the "Host" field. - Set the "Username" and "Password" fields to blank.

- Click the "Test" button to test the connection.

If the connection test is successful, you should be able to use the Airflow connection to access your Cloud SQL database.

Here are the steps to create an Airflow connection to your Cloud SQL instance using a service account:

- Go to the Airflow Web UI and navigate to the Connections tab.

- Click the "Create" button to create a new connection.

- Select the "MySQL" connection type.

- Enter

127.0.0.1in the "Host" field. - Enter the database user's username (e.g., root) in the "Username" field.

- Leave the "Password" field blank.

- Click the "Test" button to test the connection.

If the connection test is successful, you should be able to use the Airflow connection to access your Cloud SQL database.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've added the Username from the database user name and set the host to 127.0.0.1, the dag is still failing to connect:

MySQLdb.OperationalError: (2003, "Can't connect to MySQL server on '127.0.0.1:3306' (111)")

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If your DAG is still failing to connect to your Cloud SQL instance, consider the following troubleshooting steps:

-

Cloud SQL Instance Status: Ensure your Cloud SQL instance is running and the database is accessible.

-

Airflow Connection Settings: Verify the connection settings in Airflow, including the database username, host (

127.0.0.1if using Cloud SQL Proxy), and port (3306for MySQL). -

Restart Airflow Services: Consider restarting the Airflow scheduler and workers, as this can sometimes resolve connection issues.

-

Test Connection with Another Tool: Try connecting to the database using a different tool, such as MySQL Workbench, to isolate the issue.

-

Cloud SQL Proxy: Ensure the Cloud SQL Proxy is running on the same machine as Airflow and is correctly configured. If using a service account with the proxy, verify it has the necessary permissions.

-

Firewall Rules: Check the firewall rules for your Cloud SQL instance to ensure they allow traffic from the Airflow server.

-

Database Engine: Ensure the database type specified in Airflow matches the type of your Cloud SQL instance.

If you've checked all of the above and still face issues, please provide more details about your setup, such as the Airflow version and the exact error message. This will help in providing additional advice.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If there isn't a Cloud SQL proxy, then the public IP of that instance is the host the airflow has to connect to, right?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, that is correct. If you are not using the Cloud SQL Proxy, then you will need to set the "Host" field in the Airflow connection settings to the public IP address of your Cloud SQL instance.

However, connecting directly to a Cloud SQL instance using its public IP can introduce security risks. While you can encrypt direct connections using SSL/TLS and restrict access with firewall rules, the Cloud SQL Proxy offers several advantages:

-

Encryption: The proxy automatically encrypts traffic between Airflow and your Cloud SQL instance, eliminating the need to manage SSL/TLS certificates.

-

Authentication and Authorization: The proxy integrates with Google Cloud IAM, simplifying access management and ensuring only authorized applications and users can access the database.

-

No Public IP Required: With the proxy, you can connect to Cloud SQL instances without a public IP, further reducing exposure.

If security is a concern, it's recommended to use the Cloud SQL Proxy. For more detailed information on connecting to Cloud SQL securely, consider reviewing Google Cloud's documentation or consulting with your IT or security team.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Security isn't a concern, for now. But I am still not able to connect to the SQL instance with public IP as host.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

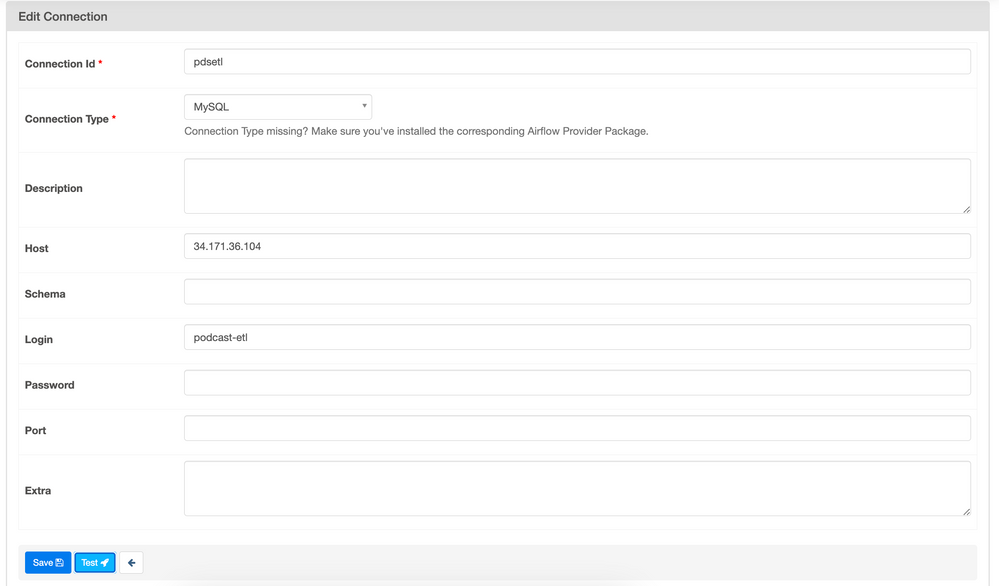

This is how it looks. With the host having Cloud SQL public IP and login having the service account username that has access to the SQL instance. The test doesn't return anything. Just stuck.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understand your frustration. When connecting to a Cloud SQL instance directly, especially from tools like Airflow, there are several factors to consider:

-

Firewall Rules: Ensure that the firewall rules for your Cloud SQL instance allow traffic from the IP address of your Airflow server.

-

Database User vs. Service Account: In the Airflow connection settings, the "login" should be a database user (like

rootor another user you've created within the SQL instance), not a service account username. Service accounts are typically used for Cloud SQL Proxy authentication or other Google Cloud services. -

Test with Another Tool: Try connecting to the database using a different tool, such as MySQL Workbench. This can help isolate whether the issue is with Airflow or the Cloud SQL instance itself.

-

Airflow Restart: Consider restarting the Airflow scheduler, workers, and web server. Sometimes, this can resolve transient connection issues.

-

New Airflow Connection: Try creating a new connection in Airflow with the same settings to see if it behaves differently.

-

Cloud SQL Proxy: If direct connection continues to be problematic, consider using the Cloud SQL Proxy for a more secure and reliable connection method. Remember to use a service account with the appropriate permissions to authenticate the proxy.

-

Logs: Check both Airflow and Cloud SQL logs for any error messages or warnings. These can provide more detailed information about the connection issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I wanted to know if can we connect MySQL directly from the airflow in Cloud Composer. [no cloud SQL instance]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, you can connect to a MySQL database directly from Airflow in Google Cloud Composer, even if the database is not a Cloud SQL instance. To do this, you will need to set up a connection in Airflow with the appropriate credentials and connection details for your MySQL database. Here are the general steps:

-

Database Information: Ensure you have the following information about your MySQL database:

- Hostname or IP address

- Port (default is 3306 for MySQL)

- Database name

- Username and password for the database

-

Create Airflow Connection:

- Go to the Airflow Web UI in your Cloud Composer environment.

- Navigate to

Admin>Connections. - Click on "Create" to add a new connection.

- Fill in the connection details:

- Conn Id: Give a unique name for your connection.

- Conn Type: Select

MySQL. - Host: Enter the hostname or IP address of your MySQL server.

- Schema: Enter the name of your database.

- Login: Enter the username for the database.

- Password: Enter the password for the database.

- Port: Enter the port number (default is 3306).

-

Test the Connection:

- After setting up the connection, use the "Test" button to check if Airflow can successfully connect to the MySQL database.

-

Use in DAGs:

- In your DAGs, refer to this connection by the

Conn Idyou specified to perform operations on your MySQL database.

- In your DAGs, refer to this connection by the

Remember, when connecting to an external database, ensure that your network settings (like firewalls and VPC rules) allow traffic between your Cloud Composer environment and the MySQL server. If the MySQL server is outside Google Cloud, you might need to configure additional network settings for connectivity.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your response, I have done the same way, but getting this error : (pymysql.err.OperationalError) (2003, "Can't connect to MySQL server on '10.245.8.38' (timed out)")

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The error (pymysql.err.OperationalError) (2003, "Can't connect to MySQL server on '10.245.8.38' (timed out)") indicates that Cloud Composer is unable to establish a connection to the MySQL server at the specified IP address within the allotted time. This issue is typically related to network connectivity or configuration. Here are some steps to troubleshoot and resolve this issue:

-

Check Network Accessibility:

- Ensure that the MySQL server at

10.245.8.38is accessible from the network where your Cloud Composer instance is running. If Cloud Composer is running in Google Cloud and the MySQL server is outside of Google Cloud, you need to ensure that the network settings allow external connections.

- Ensure that the MySQL server at

-

Verify IP Address and Port:

- Double-check that the IP address

10.245.8.38and the port (default is 3306 for MySQL) are correct. Ensure that the MySQL server is listening on the specified port.

- Double-check that the IP address

-

Firewall and Security Groups:

- Verify that any firewalls or security groups are configured to allow inbound connections to the MySQL server on the relevant port (usually 3306) from the IP range of your Cloud Composer environment.

-

MySQL Server Configuration:

- Check the MySQL server configuration to ensure it's set up to accept connections from your Cloud Composer's IP address. If the MySQL server is configured to listen only on

localhostor a specific IP, it won't accept connections from other IPs.

- Check the MySQL server configuration to ensure it's set up to accept connections from your Cloud Composer's IP address. If the MySQL server is configured to listen only on

-

Test Connectivity:

- Try to connect to the MySQL server using the same credentials and network path from a different client (like a MySQL client tool) to rule out issues specific to Cloud Composer.

-

Timeout Settings:

- Consider increasing the timeout setting in the Cloud Composer connection configuration if the default timeout is too short.

-

Logs and Monitoring:

- Check the logs of both the MySQL server and the Cloud Composer instance for any additional error messages or clues.

-

VPN or Network Peering:

- If the MySQL server is in a different network (especially in a different cloud or on-premises), ensure that there is proper VPN or network peering set up between the networks.

Twitter

Twitter