- Looker & Looker Studio

- Looker Forums

- Developing Applications

- Writing a simple data dictionary to csv using the ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

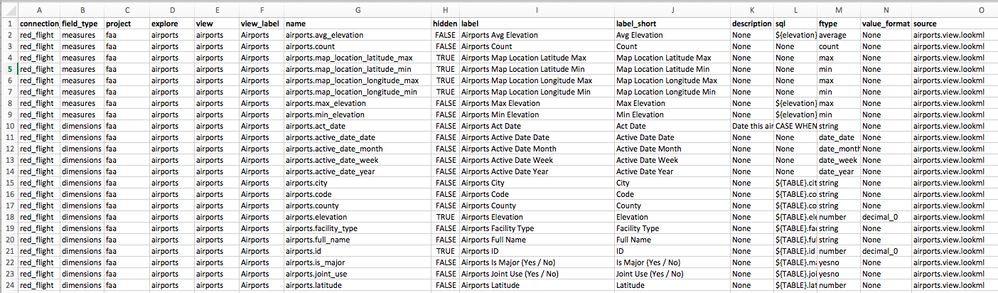

There are many reasons to come up with a document that explains what fields/explores/models are available or exposed in our Looker application. This script is used to parse through the model definition and print out a csv of fields. I use the Looker API to get the model Metadata.

I use python, and while you can use the Looker SDK, I prefer to use the python requests library.

With some other changes, you can easily figure out how fields are set up and audit the model for items such as:

- Do we follow a consistent naming convention?

- Do we have redundant or similar fields?

- Did we do a good job annotating fields via descriptions?

We’ve already done this into an interactive webpage using the Ruby SDK. You can see that here:

https://discourse.looker.com/t/creating-a-data-dictionary-using-lookers-api/3589

endpoints

To get this started, I need to have endpoints for authentication, get_model and get_explore endpoints. I do that with an API class:

class LookerApi(object):

def __init__(self, token, secret, host):

self.token = token

self.secret = secret

self.host = host

self.session = requests.Session()

self.session.verify = False

self.auth()

def auth(self):

url = '{}{}'.format(self.host,'login')

params = {'client_id':self.token,

'client_secret':self.secret

}

r = self.session.post(url,params=params)

access_token = r.json().get('access_token')

# print access_token

self.session.headers.update({'Authorization': 'token {}'.format(access_token)})

# GET /lookml_models/{{NAME}}

def get_model(self,model_name=None,fields={}):

url = '{}{}/{}'.format(self.host,'lookml_models', model_name)

# print url

params = fields

r = self.session.get(url,params=params)

if r.status_code == requests.codes.ok:

return r.json()

# GET /lookml_models/{{NAME}}/explores/{{NAME}}

def get_explore(self,model_name=None,explore_name=None,fields={}):

url = '{}{}/{}/{}/{}'.format(self.host,'lookml_models', model_name, 'explores', explore_name)

print url

params = fields

r = self.session.get(url,params=params)

if r.status_code == requests.codes.ok:

return r.json()

csv writing

Once we can call those endpoints, The script should call for all models, and parse through each explore: calling for all the field information in a loop. We then will write each field and it’s metadata to a new row. For each row, I have created a function to call:

def write_fields(explore, fields):

### First, compile the fields you need for your row

explore_fields=explore['fields']

try:

connection_name = str(explore['connection_name'])

except:

connection_name = ''

for dimension in explore_fields[fields]:

# print dimension

field_type = fields

project = str(dimension['project_name'])

explore = str(explore_def['name'])

view=str(dimension['view'])

view_label=str(dimension['view_label'])

name=str(dimension['name'])

hidden=str(dimension['hidden'])

label=str(dimension['label'])

label_short=str(dimension['label_short'])

description=str(dimension['description'])

sql=str(dimension['sql'])

ftype=str(dimension['type'])

value_format=str(dimension['value_format'])

source = str(dimension['source_file'])

### compile the line - this is possible to combine above, but here to keep things simple

rowout = []

rowout.append(connection_name)

rowout.append(field_type)

rowout.append(project)

rowout.append(explore)

rowout.append(view)

rowout.append(view_label)

rowout.append(name)

rowout.append(hidden)

rowout.append(label)

rowout.append(label_short)

rowout.append(description)

rowout.append(sql)

rowout.append(ftype)

rowout.append(value_format)

rowout.append(source)

w.writerow(rowout)

csv formatting

Then all I need is to instantiate the API, open a CSV, write the header, and then iterate through my models. using the csv library we can start a csv with this code:

csvfile= open('dictionary.csv', 'wb')

w = csv.writer(csvfile, delimiter=',', quotechar='"', quoting=csv.QUOTE_MINIMAL)

header = ['connection_name',

'field_type',

'project',

'explore',

'view',

'view_label',

'name',

'hidden',

'label',

'label_short',

'description',

'sql',

'ftype',

'value_format',

'source']

w.writerow(header)

Parse the model.

The rest of the script looks like this:

-

Get looker API 3.0 Credentials

-

Call for the model

-

Parse through the model and write each field as a row into our csv

-

close the file

f = open(‘config.yml’)

params = yaml.load(f)

f.close()hostname = ‘localhost’

my_host = params[‘hosts’][hostname][‘host’]

my_secret = params[‘hosts’][hostname][‘secret’]

my_token = params[‘hosts’][hostname][‘token’]looker = LookerApi(host=my_host,

token=my_token,

secret = my_secret)--------- API Calls -------------

– Get all models –

models = looker.get_model("")

pp(models)

for model in models:

model_name = model[‘name’]## -- Get single model -- model_def = looker.get_model(model_name) # pp(model_def) ## -- Get single explore -- for explore_def in model_def['explores']: explore=looker.get_explore(model_name, explore_def['name']) # pp(explore) ## -- parse explore -- try: write_fields(explore,'measures') except: print 'Problem measure fields in ', explore_def['name'] try: write_fields(explore,'dimensions') except: print 'Problem dimension fields in ', explore_def['name']

The end result of executing this file is a csv file called “dictionary.csv”

Check out the full script called get_data_dictionary.py here: https://github.com/llooker/python_api_samples

Note: the link will use a LookerAPI.py file to hold the class, and a configuration file for keys. Check the readme for setting this up.

-

access grant

1 -

actionhub

6 -

Actions

103 -

Admin

3 -

AI ML General

1 -

alert

1 -

Analytics Block

33 -

API

159 -

Authentication

8 -

BigQuery

7 -

blocks

2 -

boards

4 -

Bug

23 -

cache

2 -

Components

67 -

connection

5 -

connection database

2 -

content access

1 -

content-validator

3 -

customdimension

1 -

Dashboards

92 -

Data

4 -

Data Sources

1 -

Database

3 -

deprecated

1 -

derivedtable

2 -

develop

2 -

development

2 -

done

3 -

downloading

1 -

drilling

3 -

dynamic

2 -

embed

60 -

Embedding

71 -

Errors

6 -

etl

2 -

explore

4 -

Explores

4 -

Extensions

15 -

feature-requests

2 -

filed

1 -

Filter

35 -

folders

2 -

Gemini

1 -

git

7 -

Google Sheets

1 -

googlesheets

1 -

i__looker

1 -

Integrations

1 -

javascript

5 -

json

2 -

links

1 -

liquid

1 -

Looker

1 -

Looker API

144 -

LookerStudio

1 -

LookML

38 -

lookml dashboard

3 -

looks

11 -

Marketplace

24 -

merge

2 -

modeling

1 -

mysql

1 -

Networking

1 -

on-hold

1 -

parameter

2 -

pdf

3 -

pdt

2 -

permission management

3 -

postgresql

1 -

Projects

1 -

python

26 -

pythonsdk

7 -

Query

11 -

query manager

2 -

quickstart

1 -

ReactJS

10 -

redshift

3 -

release

6 -

schedule

16 -

schedule delivery

2 -

sdk

56 -

server configuration

1 -

sharing

1 -

snowflake

2 -

SQL

7 -

SSO

7 -

System Activity

2 -

tests

1 -

Ui

1 -

user access management

3 -

user management

5 -

user-attributes

12 -

Vertex AI Platform

1 -

Views

2 -

Visualizations

44 -

watch

1 -

webhook

6

- « Previous

- Next »

Twitter

Twitter