- Google Cloud

- Cloud Forums

- Infrastructure: Compute, Storage, Networking

- how to disable concurrency execution of batch job

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm currently using a GCP workflow for batch job processing, set to run every 30 minutes. However, I'm facing an issue where multiple jobs are sometimes created while old ones are still executing. How can I disable concurrency to ensure that only one job runs at a time? I want to avoid situations where new jobs are created while a job is still running. Any guidance on how to achieve this within the GCP workflow setup would be greatly appreciated.

- createAndRunBatchJob:

call: http.post

args:

url: ##{batchApiUrl}

query:

job_id: ##{jobId}

headers:

Content-Type: application/json

auth:

type: OAuth2

body:

taskGroups:

taskSpec:

runnables:

- container:

imageUri: ##{imageUri}

commands:

- "--script-location"

- "/mnt/disks/batch-scripts-${project_sha}/google_tv_aep/batch/code"

environment:

variables:

job_id: ##{jobId}

secret: projects/${project}/secrets/${secret_nm}/versions/latest

local_path_1: /mnt/disks/${local_path_1}

local_path_2: /mnt/disks/${local_path_2}

query_file: "tv_mkt_campaign_events_load_query.sql"

output_dataset_name: "tv_mkt_campaign_events_incremental_data.json"

project: "${project}"

aep_sandbox: "${aep_sandbox}"

aep_dataset_name: "TV mkt campaign Events"

load_mode: "incremental"

aep_connection_id: "${aep_connection_id}"

checkpoint_file: "mkt_campaign_checkpoint.param"

aep_flow_id: "${aep_flow_id}"

checkpoint_field: timestamp

volumes:

- mountPath: /mnt/disks/batch-scripts-${project_sha}

gcs:

remotePath: batch-scripts-${project_sha}

- mountPath: /mnt/disks/${bucket_nm}

gcs:

remotePath: ${bucket_nm}

computeResource:

cpuMilli: 2000

memoryMib: 16384

taskCount: 1

parallelism: 2

allocationPolicy:

network:

networkInterfaces:

network: projects/${project}/global/networks/spark-network

subnetwork: projects/${project}/regions/${location}/subnetworks/spark-subnet-pr

noExternalIpAddress: true

serviceAccount:

email: ${builder}

logsPolicy:

destination: CLOUD_LOGGING

result: createAndRunBatchJobResponse

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

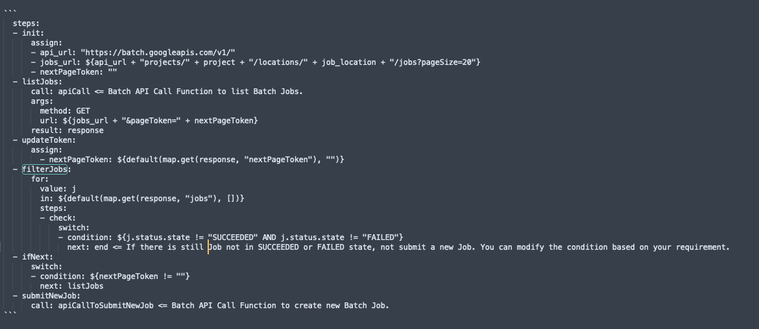

Hi @sugesh,

Below is one example of submitting a new Job when all listed existing Jobs are completed.

Ref:

- https://cloud.google.com/batch/docs/reference/rest/v1/projects.locations.jobs#State

- https://cloud.google.com/workflows/docs/reference/syntax/conditions#yaml.

Hope this helps,

Wenyan

-

Accelerators

26 -

Actifio

9 -

Analytics Block

1 -

Apache Kafka

5 -

API Hub

1 -

Apigee X

1 -

App Management

1 -

Application Migration

45 -

Backup and DR

51 -

Batch

163 -

BigLake

2 -

Cloud Armor

7 -

Cloud CDN

67 -

Cloud DNS

147 -

Cloud Interconnect

65 -

Cloud Load Balancing

293 -

Cloud Logging

1 -

Cloud SQL for SQL Server

1 -

Cloud Storage

561 -

Cloud VPN

104 -

Compute Engine

1,124 -

Data Applications

30 -

Data Transfer

108 -

Dynamic Workload Scheduler

1 -

Earth Engine

1 -

Filestore

44 -

Geo Expansion

2 -

Google Cloud VMware Engine (GCVE)

96 -

Graphics Processing Units (GPUs)

55 -

High Performance Computing (HPC)

29 -

Infrastructure General

483 -

Integrations

1 -

load balancer

1 -

Microsoft on GCP

26 -

Network Intelligence Center

21 -

Network Planner

46 -

Networking

472 -

Other

1 -

Persistent Disk

66 -

Private Cloud Deployment

1 -

Resources

1 -

SAP on GCP

18 -

Secure Web Proxy

1 -

Security Keys

1 -

Service Directory

21 -

Spectrum Access System (SAS)

99 -

URL Maps

1 -

VM Manager

75 -

Workload Manager

11

- « Previous

- Next »

Twitter

Twitter