- Google Cloud

- Cloud Forums

- Integration Services

- Re: Store Shopify Data to BigQuery using Shopify C...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am trying to fetch and store the Shopify's data to BigQuery using Shopify's connector. I have added two connectors, one of them has a REST schema and the second one has a GRAPHQL schema. Both connectors have an active status. How can I fetch and store the Shopify's data using this connector? Is it possible to do so using Google API?

Kind regards, Iana

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

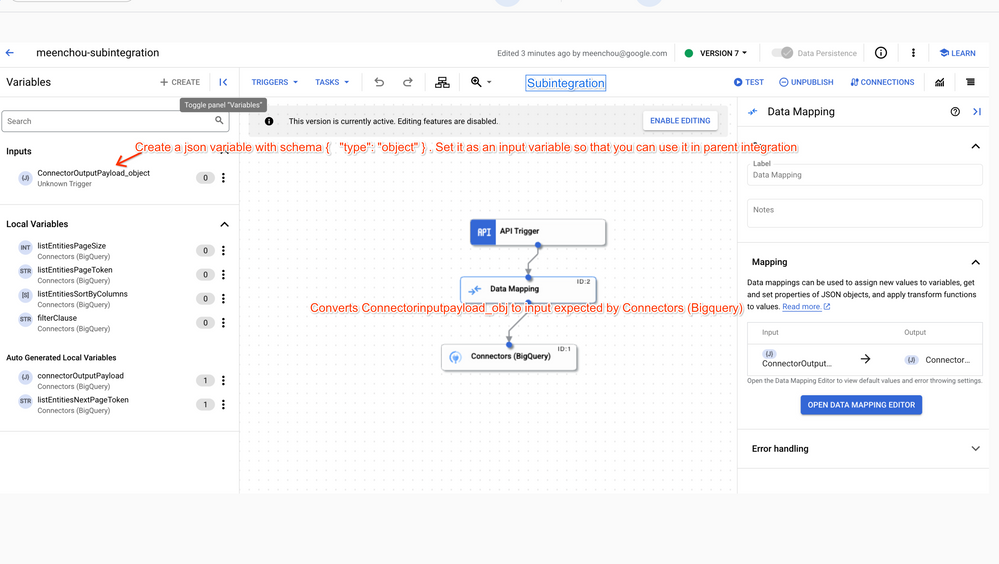

@Meenchou helped to create the sub-integration and connect it to the main integration. That solved an issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

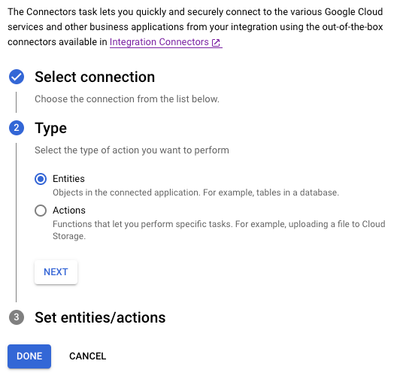

Kindly select the Shopify connection configured with REST schema and configure LIST operation in Connector task in Application Integration. LIST operation allows to fetch data records from Shopify. Example listed below can be helpful for step wise explanation

https://cloud.google.com/integration-connectors/docs/perform-crud-operation-mysql-database

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Madhuvandhini ,

Thank you for your response. I added the Shopify connector in the Application Integration and it gives the required results in a form of array. Also I added a BigQuery connector in order to pass the fetched data to the warehouse. There are some instructions given here: https://cloud.google.com/application-integration/docs/insert-data-bigquery-for-each-parallel-task?_g.... However, the data fetched from the Shopify's connector does not pass as an input to the BigQuery connector as BigQuery connector requires an input in a form of an object. Could you suggest how an array can be passed an an object to BigQuery connector to store the data there? Is there some proprocessing needed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ianala

you can use data mapping task to convert your array to an object (store it in a json variable)

try this mapping in the data mapping editor

arrayVar.TO_JSON() -> jsonVar

you should be able to pass jsonVar as an input to the BigQuery connector

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

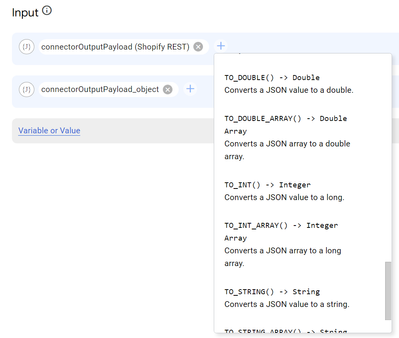

Hi @Meenchou ,

Thanks a lot for your comment. I've converted the output of Shopify's connector to string array and then TO_JSON and created an variable with the object with the following JSON schema: {

"type": "object"

}. Consequently, the created variable (connectorOutputPayload_object) is added as an input and the `ConnectorInputPayload (BigQuery)` is added as an output.When I test the integration, it throws the same error saying "Message: instance type (array) does not match any allowed primitive type (allowed: ["object"]) Schema Path:"". Could you guide what the issue could be with the convertation?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you try this

ConnectorOutputPayload (Shopify Rest). TO_JSON() -> ConnectorOutputPayload_object

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To_JSON() is not displayed in the list of possible functions to apply to ConnectorOutputPayload (Shopify Rest).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Kindly send private message to me with details of project name and integration name. We will look into it and revert back

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've sent you a PM.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Meenchou helped to create the sub-integration and connect it to the main integration. That solved an issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

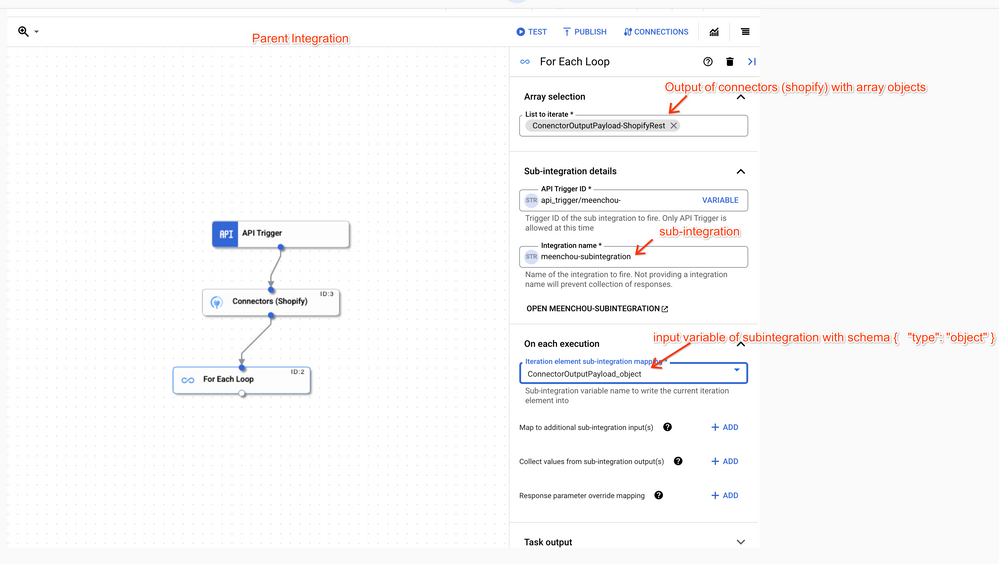

It seems like bigQuery connector input payload is expecting a single object and not an array

you can use foreach loop task to loop through that array and call the subintegration which would eventually call big query connector and the output from the task can be collected in your parent integration

Note:Publish the subintegration first and then refresh your parent integration

If you want to test the flow, you should directly invoke the parent integration and the value will be automatically set from parent integration

If you just want to invoke your subintegration for testing purpose, then you need to provide the input value

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is it possible to get more details of how the data mapping was configured?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

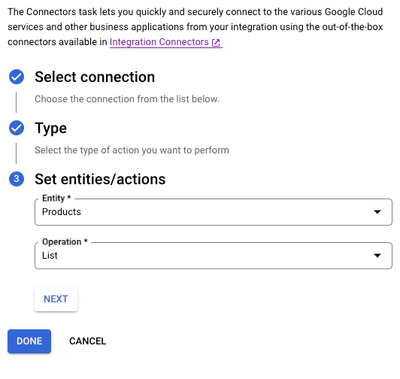

Shouldnt we use Create operation for Bigquery connector here instead of List since we want to store the data in Bigquery. Whats the point of keeping it as list operation here? I'm confused

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are correct, the LIST operation is like a SELECT * FROM Table Where Filter ; operation, so it will return a list of results. You would want to use the Create operation to insert a row into BigQuery (in a loop for many rows)...

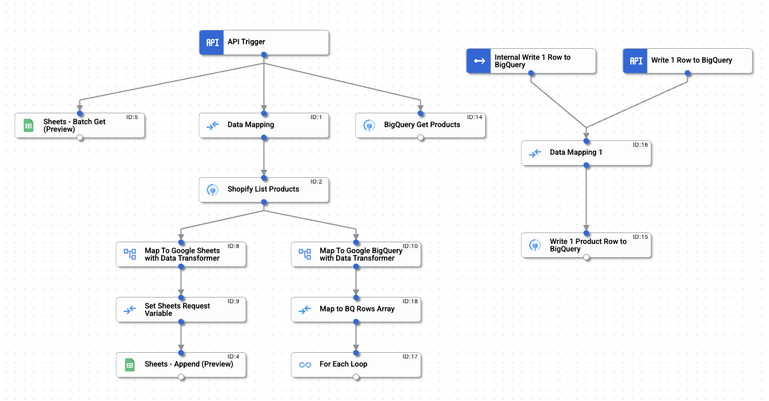

Here is a flow that does Shopify to Sheets and to BigQuery for the Product object in Shopify. I have a couple of extra branches which do a list of the existing data in Sheets and in BigQuery, so that I can view that in the execution logs to see the before and after my inserts. These are completely optional and can be removed if you want. I've found it helpful to do a LIST operation just so I can get some sample data in the logs and that helps me to figure out the business meaning of each of the fields I need to map (and can be used as the structure for my Data Transformer task's script).

You will see that the BigQuery connector is in the second integration flow to the right, and it is called by the For Each Loop (ID:17). There is a way to do batch updates with a job, etc..., but I just wanted something simple when I was developing this, so I did a loop with the Create operation on the BigQuery Connector, which takes one row at a time. Also note that I have 2 triggers on the sub-integration to write a row to BigQuery. This is also optional. The recommended trigger to use is the Private Trigger. The API trigger is there in case I want to call this sub-integration as an API from some external code someday in the future. The Private trigger can only be called by another integration in the same project, while an API Trigger will always create a Public API (secured by IAM) (for example, this can be called from an Apigee Proxy).

Here are the Shopify connection task configurations:

I used the new Data Transformer Task (Preview) for all of my main data mappings from Shopify to Sheets and BigQuery formats. If there is interest, I can share these details as well. I found the Shopify data structure a bit challenging because some of their JSON substructures had stringified JSON in them, so I had to add an extra parseJson command for those sub structures.

Hope that helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And here is the BigQuery Connection configuration (Task ID:15) ... I used the "Entity" option, and selected my dataset "shopify.products" and the "Create" operation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As an alternative, you can use the Skyvia (URL Removed by Staff) connector for integration

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@shaaland Hey! I'm following a similar method to the one you shared above. However, I have a lot of records to pull from Shopify so I would like to use the method of a while loop and page tokens to go pull the data from each page, insert into bigquery and repeat.

Currently, I have found the issue that whenever I assign the value from ListEntitiesNextPageToken to ListEntitiesPageToken, I get an error.

Similarly, whenever I try to add a default value for listEntitiesSortByColumns I also get an error. Do you know why this could be? I am adding the data in as a string

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Send me a DM and we can try to troubleshoot it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks so much for your reply- I don't seem to have the DM option yet on Google Cloud as I joined not long ago.

I think the issue is when assigning the listEntititesNextPageToken to the ListEntitiesPageToken for Shopify it looks like it's not able to process the token. I keep getting the error 400.

For example, even when simplifying to an integration as below:

the first task of returning 10 runs fine, so does the data mapping but after assigning the nextpagetoken to the page token there seems to be some kind of processing error:

Logs:

The other variables are part of another integration* and it doesn't matter the length of time I assign to test this- the error code comes back nonetheless

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the details. I'll raise this with engineering and see if we can replicate this and figure it out...hopefully it is something simple. It does look like the Next page token does have a value which you successfully mapped to the List page token input....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you! My only other thought was it could be an issue with how I set the Shopify connector up but not sure if thats relevant

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I saw there was a request for the Data mapping in this thread....so here is my data transformer script for mapping to BigQuery....I'm sure it can be improved from here, but it gives you a flavor for how the JSonnet script works in the Data Transformer task. I highly recommend using the Data Transformer Playground developed by one of our Customer Engineers -- I found it very helpful when developing this.

local f = import 'functions'; // Import additional functions

// TEMPLATE OUTPUT

// Json Object is expected as on output. The key of the object would be the variable whose value needs to be set.

// Example:

// {

// hello: "world"

// }

local ShopifyProducts = std.extVar('`Task_2_connectorOutputPayload`');

local c = 0;

local parseJson(d) = std.parseJson( std.strReplace(std.stripChars(d, "\n \r\n" ),"\n", " " ));

local parseNumber(str) = std.parseInt( std.substr( str, 0, std.length(str)-3));

local createBQRow(product) =

local variant = parseJson(product.Variants)[0];

local image = parseJson(product.Images)[0];

{

"Handle": product.Handle,

"Title": product.Title,

"Body_HTML": product.BodyHtml,

"Vendor": product.Vendor,

"Product_Category": "",

"Type": product.ProductType,

"Tags": product.Tags,

"Published": (if product.PublishedScope == "global" then true else false),

"Option1_Name": "Title",

"Option1_Value": variant.option1,

"Option2_Name": variant.option2,

"Option2_Value": variant.option2,

"Option3_Name": variant.option3,

"Option3_Value": variant.option3,

"Variant_SKU": (if variant.sku == null then "" else variant.sku),

"Variant_Grams": variant.grams,

"Variant_Inventory_Tracker": variant.inventory_management,

"Variant_Inventory_Qty": variant.inventory_quantity,

"Variant_Inventory_Policy": variant.inventory_policy,

"Variant_Fulfillment_Service": variant.fulfillment_service,

"Variant_Price": parseNumber(variant.price),

"Variant_Compare_At_Price": parseNumber(variant.compare_at_price),

"Variant_Requires_Shipping": variant.requires_shipping,

"Variant_Taxable": variant.taxable,

"Variant_Barcode": (if variant.barcode == null then "" else variant.barcode),

"Image_Src": image.src,

"Image_Position": image.position,

"Image_Alt_Text": image.alt,

"Gift_Card": "", //gift card isn't in the API"

"SEO_Title": "", //seo_title isn't in the API output"

"SEO_Description": "", //seo_description isn't in the API output"

"Google_Shopping_Google_Product_Category": "", //google shopping category"

"Google_Shopping_Gender": "", //google shopping gender"

"Google_Shopping_Age_Group": "", //google shopping / age group"

"Google_Shopping_MPN": "", //google shopping / MPN"

"Google_Shopping_Condition": "", //google shopping / Condition"

"Google_Shopping_Custom_Product": "", //google shopping / Custom Product"

"Google_Shopping_Custom_Label_0": "", //google shopping / Custom Label 0"

"Google_Shopping_Custom_Label_1": "", //google shopping / Custom Label 1"

"Google_Shopping_Custom_Label_2": "", //google shopping / Custom Label 2"

"Google_Shopping_Custom_Label_3": "", //google shopping / Custom Label 3"

"Google_Shopping_Custom_Label_4": "", //google shopping / Custom Label 4"

"Variant_Image": variant.image_id,

"Variant_Weight_Unit": variant.weight_unit,

"Variant_Tax_Code": "", //variant tax code"

"Cost_per_item": "", //cost per item"

"Included_United_States": true, //included / united states"

"Price_United_States": "", //price / united states"

"Compare_At_Price_United_States": "", //compare at United states"

"Included_International": true, //included / Interntional"

"Price_International": "", //price / International"

"Compare_At_Price_International": "", //Compare at price / International"

"Status": product.Status,

};

{

// Add mapping here

BigQueryRequest: {

rows: [createBQRow(product) for product in ShopifyProducts]

},

}

I

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @shaaland I referred Data Transformer Playground

the site (https://datatransformer-playground.web.app/) is not found.

Is JSonnet is still in use? It seems it is not maintained.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @muhasin-gauger ,

Apologies...it looks like the playground is offline. However, the Data Transformer task in Application Integration still has full support for JSonnet. I will investigate if we can get the playground back up and running...it is simply a tool to aid developers in being able to pre-test their JSonnet scripts.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Another alternative is a third party connector like Windsor.ai, which provides a free Shopify connector that handles technical complexities and schema management, eliminating the need to custom code or manage infrastructure, and if you're integrating multiple data sources, it can consolidate data into a unified BigQuery destination.

-

Apigee General

1 -

Application Integration

484 -

Eventarc

16 -

Integration Connectors

203 -

Scheduler

20 -

Tasks

62 -

Workflows

48

Twitter

Twitter