- Looker

- Looker Forums

- Modeling

- Defining JSON Objects as LookML Dimensions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you come across JSON objects in Postgres and Snowflake, the obvious thing to do is to use a JSON parsing function to select JSON keys as LookML dimensions. You’ll be able to create a lot of dimensions without any issues, but there are some nuances to note. This article will outline the process of defining dimensions and iron out some issues you may encounter in the process.

Step 1: Select the raw JSON dimension to see what key-value pairs are included

Say there is a dimension defined as the following:

Postgres or Snowflake:

- dimension: test_json_object

sql: ${TABLE}.test_json_object

If you select this dimension in an explore, you’ll get something like this:

{"key1":"abc","key2":"a44g6jX3","key3":"12345","key4":"2015-01-01 12:33:24"}

Now we know that our new dimensions are keys 1~4, with various data types ranging from string values to a timestamp.

Step 2: Manually define each key using a JSON parsing function or a single colon (’:’)

To define key2 in the above-described example, you would write the following:

Postgres:

- dimension: key2

sql: json_extract_path(${TABLE}.test_json_object, 'key2')

Snowflake:

- dimension: key2

sql: ${TABLE}.test_json_object:key2

This will return a string value by default, still in quotes:

"a44g6jX3"

Step 3: Cast data types for each dimension

Now that we have a baseline list of dimensions defined, we’ll explicitly cast these dimensions as appropriate data types in the SQL parameter:

Postgres:

- dimension: key2

sql: CAST(JSON_EXTRACT_PATH(${TABLE}.test_json_object, 'key2') AS string)

Snowflake:

- dimension: key2

sql: ${TABLE}.test_json_object:key2::string

This will now result in quotes being removed:

a44g6jX3

Simply declaring a LookML dimension type (string, number, etc.) may NOT remove the quotes (specifically in Snowflake). Even worse, if you have an integer dimension defined as the following (type declared, but not explicitly casted)…

Snowflake:

- dimension: key3

type: number

sql: ${TABLE}.test_json_object:key3

… you risk obtaining nulls for that dimension.

As such, explicitly casting data types at this stage is crucial.

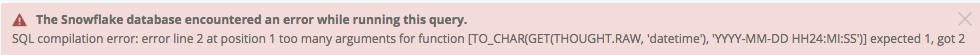

Step 4: Make sure timestamps come through correctly

This is an extension of step 3. LookML offers native timestamp conversion with dimension_group and type: time declaration:

Postgres and Snowflake:

- dimension_group: test

type: time

timeframes: []

While this might work for dates in Snowflake, you will most likely see errors cropping up when you try to select Time, Month, Year, etc.

Instead of assuming accurate timestamp handling by Looker, you should be explicitly casting your newly-defined dimensions, in this case as the following:

Postgres:

- dimension_group: new_time

type: time

timeframes: [time, date, week, month, year]

sql: CAST(JSON_EXTRACT_PATH(json_extract_path(${TABLE}.test_json_object, 'key4') AS timestamp)

Snowflake:

- dimension_group: new_time

type: time

timeframes: [time, date, week, month, year]

sql: ${TABLE}.test_json_object:key4::timestamp

-

access grant

6 -

actionhub

1 -

Actions

8 -

Admin

7 -

Analytics Block

27 -

API

25 -

Authentication

2 -

bestpractice

7 -

BigQuery

69 -

blocks

11 -

Bug

60 -

cache

7 -

case

12 -

Certification

2 -

chart

1 -

cohort

5 -

connection

14 -

connection database

4 -

content access

2 -

content-validator

5 -

count

5 -

custom dimension

5 -

custom field

11 -

custom measure

13 -

customdimension

8 -

Customizing LookML

118 -

Dashboards

144 -

Data

7 -

Data Sources

3 -

data tab

1 -

Database

13 -

datagroup

5 -

date-formatting

12 -

dates

16 -

derivedtable

51 -

develop

4 -

development

7 -

dialect

2 -

dimension

46 -

done

9 -

download

5 -

downloading

1 -

drilling

28 -

dynamic

17 -

embed

5 -

Errors

16 -

etl

2 -

explore

58 -

Explores

5 -

extends

17 -

Extensions

9 -

feature-requests

6 -

filter

220 -

formatting

13 -

git

19 -

googlesheets

2 -

graph

1 -

group by

7 -

Hiring

2 -

html

19 -

ide

1 -

imported project

8 -

Integrations

1 -

internal db

2 -

javascript

2 -

join

16 -

json

7 -

label

6 -

link

17 -

links

8 -

liquid

154 -

Looker Studio Pro

1 -

looker_sdk

1 -

LookerStudio

3 -

lookml

859 -

lookml dashboard

20 -

LookML Foundations

54 -

looks

33 -

manage projects

1 -

map

14 -

map_layer

6 -

Marketplace

2 -

measure

22 -

merge

7 -

model

7 -

modeling

26 -

multiple select

2 -

mysql

3 -

nativederivedtable

9 -

ndt

6 -

Optimizing Performance

30 -

parameter

70 -

pdt

35 -

performance

11 -

periodoverperiod

16 -

persistence

2 -

pivot

3 -

postgresql

2 -

Projects

7 -

python

2 -

Query

3 -

quickstart

5 -

ReactJS

1 -

redshift

10 -

release

18 -

rendering

3 -

Reporting

2 -

schedule

5 -

schedule delivery

1 -

sdk

5 -

singlevalue

1 -

snowflake

16 -

sql

222 -

system activity

3 -

table chart

1 -

tablecalcs

53 -

tests

7 -

time

8 -

time zone

4 -

totals

7 -

user access management

3 -

user-attributes

9 -

value_format

5 -

view

24 -

Views

5 -

visualizations

166 -

watch

1 -

webhook

1 -

日本語

3

- « Previous

- Next »

Twitter

Twitter