- Looker & Looker Studio

- Looker Forums

- Modeling

- Re: Rolling Average Daily Active Users - Redshift ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Note: there is a newer version of this analytical pattern available: [Analytic Block] Daily, Weekly, Monthly Active Users. Check it out for a more detailed walkthrough and additional features!

We derive two tables, a simple date table with one column of just dates and a second table with two columns: activity_date and user_id.

We cross join these two tables to create a table with three columns, user_id, activity_date and window_date. In the destination table, there is a row in the table for every day in the 30 days following a transaction.

Some Notes:

- Redshift is generate_series is broken. Hack one using an arbitrary table and ROW_NUMBER()

- Create a simple daily_use table of date/user_id for each day a user did something

- Cross join the daily_use table with the prior 30 days to get a table that looks like [user_id, active_date, window_date]

- This table can get large, so we provide filters to limit the range of values we have to query ({% condition %} expands to the a date range filter against the dates).

Uses the common Ecommerce Views

- include: ecommerce.view.lookml

- view: dates

derived_table:

sql: |

-- ## 1) Create a Date table with a row for each date.

SELECT '2001-01-01'::DATE + d AS date

FROM

-- generate series should work, but it doesn't in redshift

-- generate_series(0,20000) d

(SELECT ROW_NUMBER() OVER(ORDER BY id) -1 AS d FROM orders ORDER BY id LIMIT 20000) AS d

sortkeys: [date]

persist_for: 10 minutes

- explore: use_rolling_30_day_window

joins:

- join: users

foreign_key: user_id

- view: use_rolling_30_day_window

derived_table:

sql: |

WITH daily_use AS (

-- ## 2 ) Create a table of days and activity by user id

SELECT

user_id

, DATE_TRUNC('day', created_at) as activity_date

FROM orders

)

-- ## 3) Cross join activity and dates to get a row for each

-- date in the window.

SELECT

daily_use.user_id

, wd.date as window_date

, daily_use.activity_date

FROM daily_use, ${dates.SQL_TABLE_NAME} AS wd

WHERE

wd.date >= daily_use.activity_date

AND wd.date < daily_use.activity_date + interval '30 day'

-- ## 4) Filter the range of dates we use so don't have to do all of history

AND {% condition activity_date %} daily_use.activity_date {% endcondition %}

AND {% condition window_date %} wd.date {% endcondition %}

fields:

- dimension_group: activity_date

type: date

sql: ${TABLE}.activity_date

- dimension_group: window_date

type: date

sql: ${TABLE}.window_date

- dimension: user_id

- dimension: active_day

type: yesno

sql: ${TABLE}.activity_date = ${TABLE}.window_date

- measure: user_count_active_30_days

type: count_distinct

sql: ${user_id}

detail: [users.id, users.name]

- measure: user_count_active_this_day

type: count_distinct

sql: ${user_id}

detail: [users.id, users.name]

filters:

active_day: yes

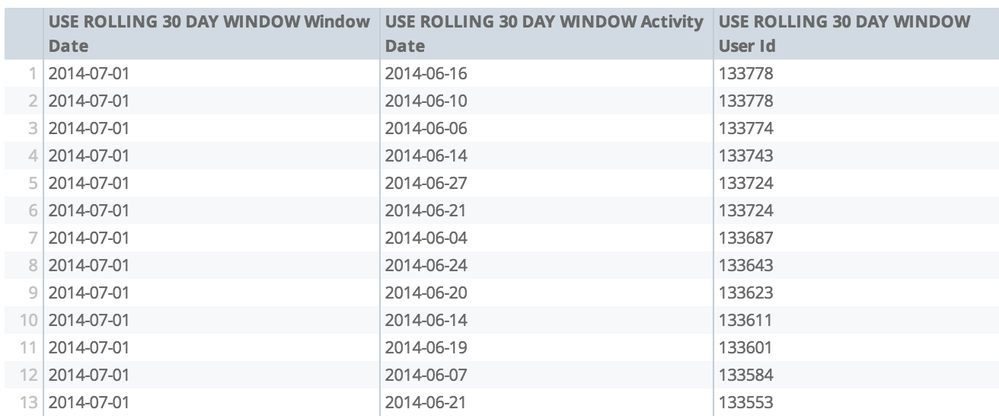

Detailed Look at date 2014-07-01

Count users as active on this date if they have bought anything in the last 30 days. Raw output from the rolling window table.

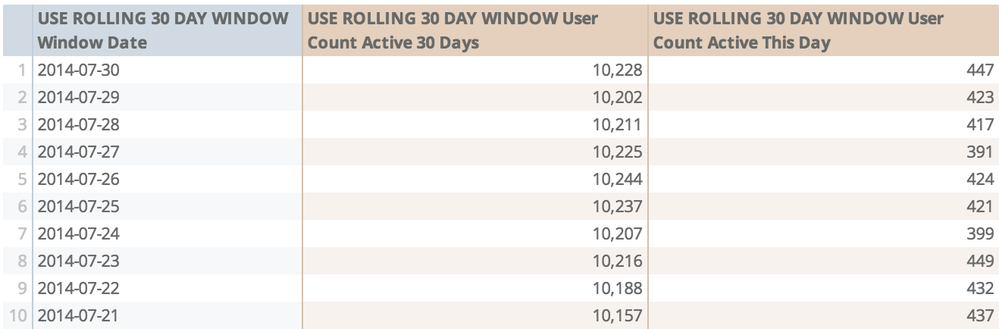

Rolling 30 Day Average Count of Users Placing Orders

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@errows asked how we might add a 7 day rolling average to this pattern.

This can be done by adding a couple of lookML fields.

- dimension: active_7_day

type: yesno

sql: (${TABLE}.window_date::date - ${TABLE}.activity_date::date) < 7

- measure: user_count_active_7_days

type: count_distinct

sql: ${user_id}

drill_fields: [users.id, users.name]

filters:

active_7_day: yes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Lloyd,

We’ve used this exact approach but we’ve noticed that the numbers we get as outputs for MAU, WAU and DAU are changing from report to report. For example, we’ll get our MAU figure on Monday of 50,000 and then later in the week that number is +/- 5-10%.

We are racking our brains about what could cause that on our side, but can you think of anything that would cause those changes in terms of how the SQL is designed?

Cheers,

Justin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To debug this, I would group by activity_date and window_date and user_count and make sure I’m seeing what I expect. Is it possible you are looking forward instead of a backward window?

The other thing that can be weird is timezones. If you have timezones turned on, truncating to dates it is possible that you might have records that show up in a window, but when you look at the normal transactions the values don’t line up. When truncating to date in a derived table, you need to be careful with timezones.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am new to looker and have been trying to make the 30 day rolling count distinct of user id. I tried following along these instructions but I am stuck. I know it is an old post but I would appreciate some input on how it can be done in Snowflake.

I know of the newer analytical block for MAU and DAU but that one calculated these as dimensions. I want to have them as measures for my end users. The table shown in the screenshot at the end of this post is exactly what i want to achieve.

Thanks,

Fizza

-

access grant

6 -

actionhub

1 -

Actions

8 -

Admin

7 -

Analytics Block

48 -

API

25 -

Authentication

2 -

bestpractice

7 -

BigQuery

69 -

blocks

11 -

Bug

60 -

cache

7 -

case

12 -

Certification

2 -

chart

1 -

cohort

5 -

connection

14 -

connection database

4 -

content access

2 -

content-validator

5 -

count

5 -

custom dimension

5 -

custom field

11 -

custom measure

13 -

customdimension

8 -

Customizing LookML

228 -

Dashboards

144 -

Data

7 -

Data Sources

3 -

data tab

1 -

Database

13 -

datagroup

5 -

date-formatting

12 -

dates

16 -

derivedtable

51 -

develop

4 -

development

7 -

dialect

2 -

dimension

46 -

done

9 -

download

5 -

downloading

1 -

drilling

28 -

dynamic

17 -

embed

5 -

Errors

16 -

etl

2 -

explore

58 -

Explores

5 -

extends

17 -

Extensions

9 -

feature-requests

6 -

Filter

220 -

formatting

13 -

git

19 -

googlesheets

2 -

graph

1 -

group by

7 -

help

1 -

Hiring

2 -

html

19 -

IDE

1 -

imported project

8 -

Integrations

1 -

internal db

2 -

javascript

2 -

join

16 -

json

7 -

label

6 -

link

17 -

links

8 -

liquid

154 -

Looker Studio Pro

1 -

looker_sdk

1 -

LookerStudio

3 -

LookML

858 -

lookml dashboard

20 -

LookML Foundations

114 -

looks

33 -

manage projects

1 -

map

14 -

map_layer

6 -

Marketplace

2 -

measure

22 -

merge

7 -

model

7 -

modeling

26 -

multiple select

2 -

mysql

3 -

nativederivedtable

9 -

ndt

6 -

Optimizing Performance

54 -

parameter

70 -

pdt

35 -

Performance

11 -

periodoverperiod

16 -

persistence

2 -

pivot

3 -

postgresql

2 -

Projects

7 -

python

2 -

Query

3 -

quickstart

5 -

ReactJS

1 -

redshift

10 -

release

18 -

rendering

3 -

Reporting

2 -

schedule

5 -

schedule delivery

1 -

sdk

5 -

singlevalue

1 -

snowflake

16 -

SQL

248 -

System Activity

3 -

table chart

1 -

tablecalcs

53 -

tests

7 -

time

8 -

time zone

4 -

totals

7 -

user access management

3 -

user-attributes

9 -

value_format

5 -

view

24 -

Views

5 -

Visualizations

166 -

watch

1 -

webhook

1 -

日本語

3

- « Previous

- Next »

Twitter

Twitter