- Google Cloud Security

- Security Forums

- SecOps SIEM

- Re: How to find duplicate events ingestion into ch...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

Is there any way that we can find the duplicate events ingested into chronicle. If yes, could you please share more information.

With Regards,

Shaik Shaheer

- Labels:

-

Ingestion

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jstoner @mikewilusz @manthavish - Could you please help me identifying the duplicate logs into chronicle.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

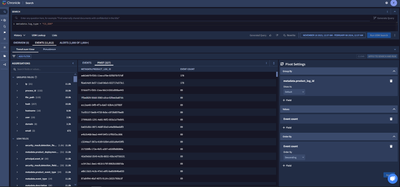

This should be possible by using a pivot table, on the basis that the log contains a unique identifier (Global Event ID, Event ID, Log Id etc). In the following case we are using Google Chronicle's demo instance, and utilizing the 'Crowdstrike Falcon' log source, with the UDM field that contains an event's unique identifier being "metadata.product_log_id".

[1] - First we search for the log type we want, in this case 'Crowdstrike Falcon' is the following: metadata.log_type = "CS_EDR"

[2] - Navigate to 'Pivot'

[3] - Apply Pivot settings like the screenshot below (grouping by the unique identifier}

[4] - Click on the :, export the data into a .csv, and remove all the ones that are equal to "1" (which if you order by Descending will be at the bottom) :).

This should show you the Event count based on the UDM field that is grouped (in this basis we are implying that metadata.product_log_id for the 'CS_EDR' logs is a unique identifier for each log). Depending on the need of this, it is likely that the creation of a dashboard may be better suited.

Hope this helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ayman C,

Greetings...!!!

Thank you for your suggestion, and we attempted to implement this method. However, it makes the analyst's job tedious as they have to manually export and individually check the logs. Is there an alternative automation process available?

With Regards,

Shaik Shaheer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shaik,

Google Chronicle SIEM customers can leverage several automation strategies to check for duplicate ingested data. Here's a breakdown:

1. Hash-Based Deduplication

-

Mechanism:

- Calculate a cryptographic hash (e.g., MD5, SHA-256) of the essential components of each event (consider a combination of timestamp, source IP, key fields).

- Store the hash values in a fast-access data structure (like a bloom filter or a hash table).

- Before ingesting a new event, check its hash against the stored values. If a match is found, it's likely a duplicate.

-

Pros:

- Reliable for detecting exact duplicates.

- Can be implemented at the pipeline level.

-

Cons:

- Minor changes to an event will produce a different hash, potentially leading to false negatives.

2. Similarity Detection with Chronicle Rules

-

Mechanism:

- Create Chronicle detection rules that compare essential event fields using similarity matching thresholds. Consider features like:

- Near-matching timestamps

- Similar IP addresses (perhaps within the same subnet)

- Matching key fields (e.g., usernames, file names)

- Optionally, use fuzzy matching or string comparison algorithms (Levenshtein distance) for more flexible comparisons.

- Create Chronicle detection rules that compare essential event fields using similarity matching thresholds. Consider features like:

-

Pros:

- Detects duplicates even with slight modifications.

- Leverages Chronicle's built-in rule engine, making it accessible to security analysts.

-

Cons:

- Can be resource-intensive with large data volumes.

- Requires careful rule tuning to avoid false positives.

3. External Data Deduplication

-

Mechanism:

- Send a stream of normalized events or pre-calculated hashes to a dedicated deduplication service or utilize a log management/SIEM platform that has deduplication features natively.

-

Pros:

- Offloads computation from Chronicle.

- Potentially more advanced deduplication algorithms and centralized management.

-

Cons:

- Adds complexity to the data pipeline.

- Might introduce latency.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jumping into this discussion because I just want to ask if Chronicle has any builtin/automated/default mechanism that would prevent ingesting the same log in a given time frame? For example, when pulling O365 audit logs, will Chronicle know when not to ingest a log already ingested in previous pulls?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ben,

I am curious if you have any customer's who have utilized the hash based table analysis method for deduplication. I would be interested in hearing more about this sort of setup.

Thanks,

Austin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shaik,

I think there are numerous ways you could solve this. You could create a dashboard that will schedule a delivery which contains the data you need. You can receive this data via a csv to then allow for review / further automation (depending on what your goal is). This is achievable if there's a vendor-specific event identifier uniquely identifier at source of the event, an example vendor that provides this is OFFICE 365 logs. In the below screenshot I'm using the public Google Chronicle SIEM instance to test this concept, this utilises 'WORKSPACE_ACTIVITY' as an example log source to identify duplicate logs. Feel free to utilise the dashboard, and remove the filters within the table to fit your needs!

https://demo.backstory.chronicle.security/dashboards?name=7432

To use this, save the dashboard and then import it into the SIEM Dashboard (legacy dashboard) on your Chronicle instance.

Once the dashboard is imported, and modified to suit your needs, set up scheduled reporting!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, how/where can we test/implement this feature? I have one log source that is randomly duplicating logs (same timestamp, etc) Assuming we cannot solve this at source, I would like it solved prior to alert generation. Is this possible currently?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Please open a support case with the feed ID - they will fix this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh, its not a problem with the feed. I just have a log source that sometimes sends duplicates (via syslog). I think this is due to the way it reads from the file and/or some artifact of the HA setup it's running that causes the duplication. While I will try to solve it at source, i was wondering if there was a way to de-duplicate in the log ingestion pipeline.

-

Admin

73 -

AI

10 -

API

82 -

Applied Analytics

4 -

BigQuery

11 -

Browser Management

4 -

Chrome Enterprise

5 -

Chronicle

322 -

Compliance

10 -

Curated Detections

37 -

Custom List

1 -

Dashboard

89 -

Data Management

63 -

Ingestion

213 -

Investigation

54 -

Logs

2 -

MCP

6 -

Parsers

176 -

Rules Engine

167 -

Search

87 -

SecOps

346 -

Security Command Center

1 -

SIEM

574 -

Siemplify

1 -

Slack

1 -

SOAR

67 -

Spotlight series

1 -

Threat Intelligence

47 -

Troubleshooting

74

- « Previous

- Next »

Twitter

Twitter