- Google Workspace

- Workspace Forums

- Workspace Q&A

- Prevent PAge Indexing on Google Sites

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is no way currently to prevent Web Crawlers from indexing selected pages on a Google Site?

This is a problem because we often want to create page "templates" on Google SItes for re-use but these get indexed.

There is no guarantee that removing these pages from robots.txt will prevent site indexing. The only way (based on Googles Search Central is to use the noindex meta tag which is not currently available to Google Sites) https://developers.google.com/search/docs/crawling-indexing/robots/intro?hl=en

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Perhaps it'd be best to not click "Publish" on the site templates, and only share them with the people that need access to them via Drive?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. Having already Published, is there a way to unpublish a specific Page, I can't see any documentation for that capability?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

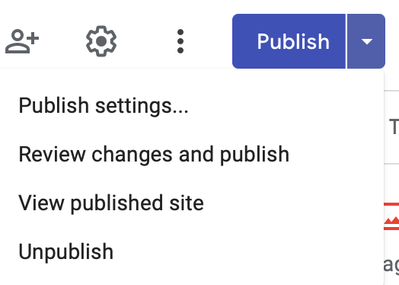

Click the down-arrow at the right side of the publish button and choose "unpublish":

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

-

2 step verification

1 -

Actions

1 -

Add-ons

13 -

Admin

1,210 -

Admin SDK

38 -

Administration

1 -

Adoption

1 -

Announcements

7 -

API Security

31 -

APIs

8 -

Apps Script

97 -

Auto Provisioning

12 -

Best Practices

1 -

Bug

1 -

Calendar

181 -

Card Framework

1 -

Change Management

2 -

Change Management & Adoption

1 -

Chat Apps

1 -

Classroom

62 -

Cloud Identity

73 -

Cloud SDK

1 -

Cloud Search & Intelligence

27 -

Contacts

59 -

Content

1 -

Copy

1 -

Currents

14 -

Customer & Partner Identities

23 -

Customer Support Portal

1 -

Delegated Administration

39 -

Device Management

93 -

Drive

551 -

Duet AI

5 -

Duplicate

1 -

Editors

83 -

Events

2 -

Feature Request

1 -

Finder

1 -

Forms

51 -

G Suite legacy free edition

14 -

G-Suite Legacy Snaffooo

1 -

GCDS

13 -

General Miscellaneous

1 -

Gmail

627 -

Google Chat

141 -

Google Credential Provider for Windows (GCPW)

55 -

Google Keep

1 -

Google Meet

130 -

Group Management

77 -

Groups

127 -

Hybrid Work

17 -

Improvement

1 -

Integrations

2 -

Introductions

87 -

Jamboard

5 -

Keep

6 -

Launches

1 -

Learning

1 -

locked

1 -

Mac

1 -

Marketplace

4 -

MDM

46 -

Migration

98 -

Mirror

1 -

Multi Factor Authentication

33 -

No-Low Code

1 -

Open Source

1 -

Other

111 -

Paste

1 -

Photos

28 -

Reduce AD dependence

6 -

Reporting

33 -

Scopes

6 -

Secure LDAP

14 -

Security

5 -

Security Keys

9 -

Shared Drive

195 -

Sites

59 -

Slides

1 -

Spaces

46 -

SSO

37 -

Stream

1 -

sync

1 -

Tasks

33 -

Tuesday Tips

18 -

User Security

100 -

Vault

32 -

Voice

72 -

Windows Management

27 -

Work Insights

14 -

Workflow

41 -

Workspace General

1,302 -

Workspace Marketplace

84

- « Previous

- Next »

Twitter

Twitter