- AppSheet

- :

- AppSheet Forum

- :

- AppSheet Q&A

- :

- Questions about Machine Learning in Appsheet Predi...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

May I ask some questions regarding Machine Learning in the Appsheet Prediction:

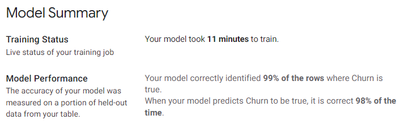

1) For the result from Classification (Target col = Yes/No), I have the following result:

a) Is the 1st statement (i.e. Your model correctly identified 99% of the rows where Churn is true.) is actually “Recall” value in Machine Learning? Or is it actually “Accuracy”?

b) How about the 2nd statement (i.e. When your model predicts Churn to be true, it is correct 98% of the time), is this “Precision” in Machine Learning?

2) For Classification (Target col = Yes/No), in the case of imbalance data in the Target column (e.g. 80% is Yes, and 20% is No), will the predictive model in Appsheet do some balancing method to tackle this issue?

The result shown in Question 1 is run with about 5600 rows of data, with 83% is Yes and 17% is No for the Target column. I found the result is too good, which make me wonder if it’s really the case, or is it because of the imbalance data issue.

3) If Appsheet models do not handle the imbalance data, any suggestion how to tackle the imbalance data manually before inputting the data into Appsheet, if we really don’t have sufficient data for the minority case?

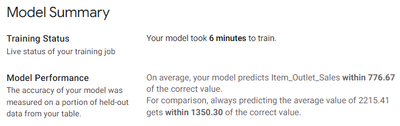

4) For Regression result as follows:

Can I check whether 776.67 is actually the value Mean Absolute Error (MAE)?

5) Does the models in Appsheet remove / tackle the outliers during the training?

Thank you so much in advance! 😊

- Labels:

-

Intelligence

-

!

1 -

Account

1,688 -

App Management

3,153 -

AppSheet

1 -

Automation

10,398 -

Bug

1,011 -

Data

9,742 -

Errors

5,782 -

Expressions

11,873 -

General Miscellaneous

1 -

Google Cloud Deploy

1 -

image and text

1 -

Integrations

1,631 -

Intelligence

588 -

Introductions

87 -

Other

2,941 -

Photos

1 -

Resources

546 -

Security

837 -

Templates

1,322 -

Users

1,566 -

UX

9,145

- « Previous

- Next »

| User | Count |

|---|---|

| 36 | |

| 31 | |

| 30 | |

| 20 | |

| 18 |

Twitter

Twitter