- Google Cloud

- Articles & Information

- Community Blogs

- Site Reliability Engineering (SRE) Fundamentals

Site Reliability Engineering (SRE) Fundamentals

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Site Reliability Engineering (SRE) was born out of an internal need at Google in the early 2000s and has since evolved to become the industry-leading practice for service reliability.

In our latest Community event, Technical Account Manager, Pamella Canova (@pamcanova) shared the fundamental SRE principles and practices that you can apply in your organization that enable your systems to be more scalable, reliable, and efficient.

In this article, we share key takeaways from the presentation, the session recording, slides, and supporting resources so you can refer back to them at any time.

If you have any further questions, please add a comment below and we’d be happy to help!

It's our goal to provide a trusted space where you can receive support and guidance along your cloud journey. So if you have any feedback or topic requests for our next sessions, please let us know in the comments, or by submitting the feedback form.

You can keep an eye on upcoming sessions led by Google Cloud Technical Account Managers in the Community here. Thank you!

- Session recording

- What is SRE?

- Key principles of SRE: Error budgets, SLIs, SLOs, and SLAs

- Error budgets

- SLIs, SLOs, and SLAs

- SRE areas of practice

- Metrics, monitoring, and alerts

- Demand forecasting and capacity planning

- Change management

- Emergency response

- Culture and toil management

- How to get started with SRE

- Supporting resources and how to get SRE help

Session recording

See the session in Portuguese here. Plus, see the slides in English and Portuguese attached at the bottom of this article.

In this article, we’ll cover five key areas of SRE:

- What is SRE? The core problem that SRE solves and the high-level organizational structures that facilitate the practice of SRE.

- Key principles of SRE. The guiding principles SREs use to keep systems reliable.

- SRE practice areas. The areas of responsibility and expertise SREs have.

- How to get started with SRE. The summary of next steps to adopt SRE within your organization.

- Supporting resources. The resources, training, and certifications Google provides to help you achieve your reliability goals with SRE.

What is SRE?

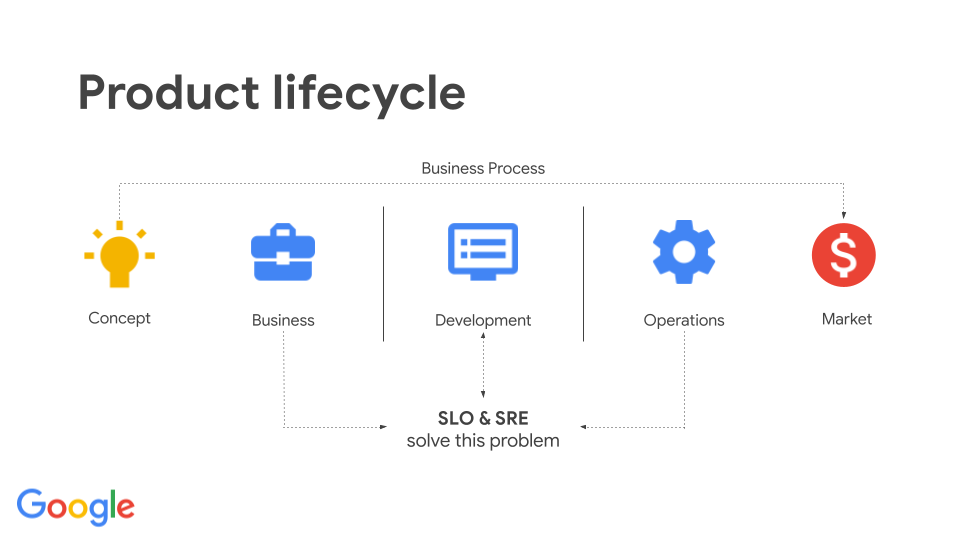

Typically in software engineering, developers build new features, pass it along to operators, and then move on to the next thing. Whereas operators are responsible for everything from scalability, reliability, and maintenance of the software.

In other words, developers want to go as fast as they can, while operators are looking to slow things down to reduce risks.

Since each group's priorities are misaligned with the needs of the business, this kind of setup often creates tension between developers and operators, and ultimately, slows down the product lifecycle.

SRE was born at Google in the early 2000s when a team of software engineers led by Ben Treynor Sloss, was tasked to make Google’s already large-scale sites more reliable, efficient, and scalable. The practices they developed responded so well to Google’s needs that other big tech companies also adopted them and brought new practices to the table.

Site Reliability Engineering (SRE), as it has come to be generally defined at Google, is what happens when you ask a software engineer to solve an operational problem. SRE is an essential part of engineering at Google. It’s a mindset, job role, and a set of practices, metrics, and prescriptive ways to ensure systems reliability.

Key principles of SRE: Error budgets, SLIs, SLOs, and SLAs

There’s a common misconception that 100% reliability should be the goal for your systems - that they’re available 100% of the time. However, having a 100% availability requirement slows down developers’ ability to deliver new features, updates, and improvements to a system.

Rather than a 100% reliability target, developers, product management, and SRE teams should define a mutually-acceptable availability target that will meet the needs of your users. This is where error budgets come into play.

Error budgets

The error budget is the amount of unavailability you’re willing to tolerate, which is used to achieve a balance between system stability and developer agility. As a general rule of thumb:

- When an adequate error budget is available, you can innovate rapidly and improve the product or add product features.

- When the error budget is diminished, slow down and focus on reliability features.

SLIs, SLOs, and SLAs

Hand in hand with error budgets are SLIs, SLOs, and SLAs.

- SLI (Service Level Indicator): a metric indicating at any moment in time how well your service is doing or not (e.g. availability, latency, throughput, etc.)

- SLO (Service Level Objective): the target value for your SLI over a window of time, which is agreed upon by the business, developers, and operators.

- SLA (Service Level Agreement): the consequences if you fail to meet your objectives (e.g. refunding money)

Your error budget is calculated as 100% - SLO over a period of time. It indicates if your system has been more or less reliable than what is needed over a certain time period and how many minutes of downtime are allowed during that period.

For example, if your availability SLO is 99.9%, your error budget over a 30-day period is (1 - 0.999) x 30 days x 24 hours x 60 minutes = 43.2 minutes. So if your system has had 10 minutes of downtime in the past 30 days and started the 30-day period with the full error budget of 43.2 minutes unutilized, then the remaining error budget would be 33.2 minutes.

Your SLO is the target value for your SLI over a window of time, which is agreed upon by the business, developers, and operators.

SRE areas of practice

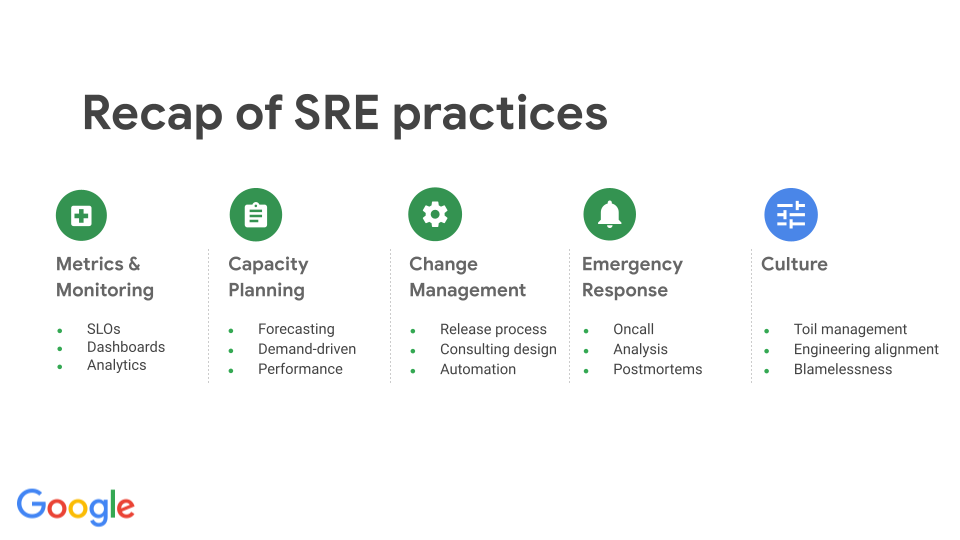

So the concept of SLOs and error budgets are all good and well, but how do we actually put them into practice, defending them from day to day? We need to work in a few key areas of practice:

- Metrics, monitoring, and alerts

- Demand forecasting and capacity planning

- Change management

- Emergency response

- Culture

Metrics, monitoring, and alerts

To maintain reliable systems, it’s important to measure how well you’re doing against your targets and to create alerts that signal when your error budget is threatened.

Google Cloud provides an SLO Monitoring feature that helps reduce the complexity of monitoring and alerting. With SLO Monitoring, the user selects the desired SLIs (reliability metrics) and SLOs (target values), and the framework handles the mathematical manipulations required to monitor SLOs and error budgets.

Demand forecasting and capacity planning

Demand forecasting and capacity planning are critical to make sure you have enough resources available to meet demand and your reliability goals, considering both organic growth (increased product adoption and usage by end users/customers) and inorganic growth (launches and new features that consume more resources).

Not only is it important to make sure you have enough capacity, but your CFO surely cares a lot about the cost and efficiency of running your service as well. If you over-provision resources, your service is running well, but you’re also spending unnecessarily on capacity you don’t need. If you under-provision resources, you’re saving more money, but at the detriment of your service performance and user experience.

Check out this article, Strategic Cloud Capacity Planning, for best practices based on the Google Cloud Architecture Framework.

Change management

Good change management is about managing risk - even with the smallest changes - in a routine, programmatic way. The business, developers, and operators should be aligned on change management processes, considering the following best practices:

- Implement progress rollouts, rather than global changes

- Quickly and accurately detect problems using monitoring and alerting tools

- Roll back changes safely when problems arise

- Remove humans from the loop with automation to reduce errors and fatigue and improve velocity

Emergency response

It’s unrealistic to expect you’ll never have something break. So when it does, you need to have a well-defined process in place.

Both developers and SREs should know the emergency response processes, and practice them in advance, so when you’re faced with a real incident, you don’t panic and can focus on mitigating the issue and restoring the service to a healthy state. Once that’s done, then you can work on the longer-term work of troubleshooting and fixing underlying issues so that it will not happen again.

Before an incident occurs, you need to define your incident threshold - at what point do you declare an incident? When do you bring in other responders to help?

Typically, your incident threshold is determined by how much damage is done to your SLO (how much of your error budget you've consumed). If you're about to run out of your entire error budget for the quarter in the next few hours, you probably want to declare an incident. On the other hand, if you have it under control and your error budget isn't at risk, then you can spend a few more hours to look at what's happening on your own.

Define these thresholds ahead of time so that when things go wrong, people don't have to wonder or worry about whether to declare an incident.

After an incident is resolved, your focus should be on postmortem: making sure you’ve identified the root cause of the issue, preventing it from happening again, and having an action plan in place to improve for future scenarios. See a detailed postmortem checklist from Google Cloud here.

As a final note, postmortems must focus on a culture of blamelessness:

- Postmortems must focus on identifying the contributing causes without indicating any individual or team

- A blamelessly-written postmortem assumes that everyone involved in an incident had good intentions

- "Human" errors are systems problems. You can’t “fix” people, but you can fix systems and processes to better support people in making the right choices

- If a culture of finger pointing prevails, people will not bring issues to light for fear of punishment

Culture and toil management

The last key practice area of SRE is a cultural one - the ability for SREs to regulate their operational workload, also known as toil management.

We define operational work as things you need to do to run a service that are manual, repetitive, automatable, reactive, without enduring value (no long-term system improvements), or that scale linearly (grows with user count or service size).

Operational work is important because not everything can be automated, and it provides the hands-on experience you need to improve your service, design better systems, and know what can be automated.

However, you don’t want all your SRE’s time spent on operational work. At Google, we have a policy of capping the amount of operational work at 50% of one’s time.

A good team culture with the right mix of skills and workload amongst team members helps alleviate operational burden and empower SREs to focus on work that delivers value for the business.

How to get started with SRE

If you’re relatively new to the concept of SRE or are just beginning to stand up a culture of SRE in your organization, we recommend you start with these four things:

- Start with Service Level Objectives (SLOs). If you don't have a measure of reliability, you have no idea how reliable your service is.

- Hire people who write software. You need to hire people on your operations team who write software or who can upskill people on that team - they'll be instrumental in replacing manual work with automation.

- Ensure parity of respect. People working in operations need to be enthusiastic about automation and have parity of respect with the product development and software engineering team.

- Provide a feedback loop for self-regulation. You need to have people who can be strategic and selective about their work - people who are able to push back and say, "we can't really support this new product right now because we're overwhelmed with what we have now."

As a final piece of advice, start small and grow from there. Pick one service to run according to the SRE model, empower the team with strong executive sponsorship and support, provide a culture of psychological safety, measure your progress, and aim for improvements over time - not perfection.

Supporting resources and how to get SRE help

- Google SRE Resources (books, articles, trainings, tutorials, and more)

- How to Maximize Service Reliability with the Google Cloud Architecture Framework

- SRE vs. DevOps: Competing standards or close friends?

- How SRE teams are organized, and how to get started

- Do you have an SRE team yet? How to start and assess your journey

- Site Reliability Engineering: Measuring and Managing Reliability (Coursera)

- Apigee Aumentado con Ingeniería de Plataformas

- VAIS:Retail - Catalog Ingestion best practices guide for optimized performance

- NLP2SQL using dynamic RAG based few-shot examples

- How thinking about light switches can help demystify digital transformation via APIs

- Google Cloud Customer Newsletter: May 2023

Twitter

Twitter